This paper changed my life: ‘Spontaneous cortical activity reveals hallmarks of an optimal internal model of the environment,’ from the Fiser Lab

Fiser’s work taught me how to think about grounding computational models in biologically plausible implementations.

Answers have been edited for length and clarity.

What paper changed your life?

Spontaneous cortical activity reveals hallmarks of an optimal internal model of the environment. Berkes P., Orbán G., Lengyel M. and Fiser J. Science (2011)

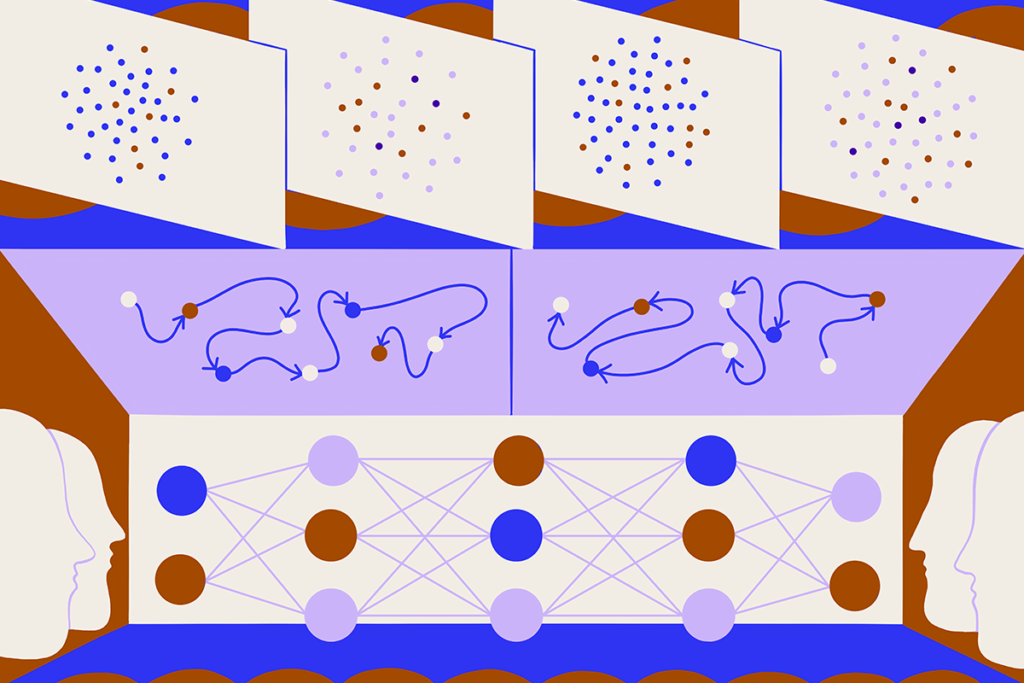

Many dominant opinions in neuroscience hold that, when it comes to perception, the brain operates by performing something that looks like Bayesian inference. The brain somehow develops and instantiates a prior expectation about what is likely to occur in the environment—the distribution of edge/contour orientations, for example—and then combines that expectation with incoming sensory information to form a posterior estimate that drives the percept, such as the orientation of a line within the visual field. There is still an ongoing debate about how brains represent prior expectations and incoming sensory information probabilistically and combine these representations to create percepts.

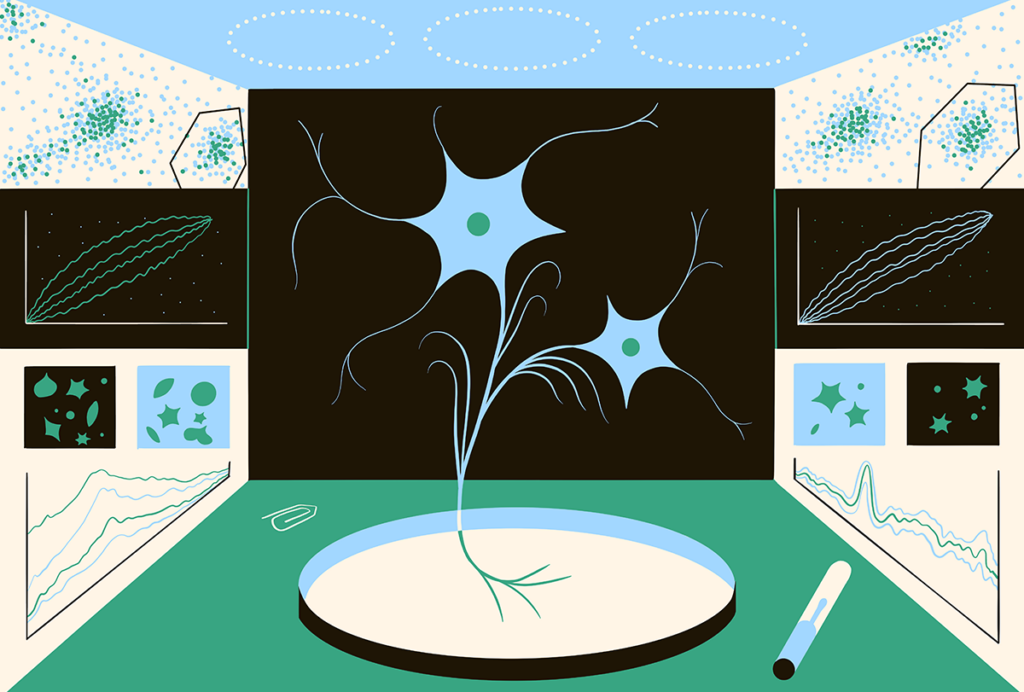

In this paper, Jozsef Fiser and his colleagues ask how components of a Bayesian model of perception might be neurally instantiated, specifically with respect to visual perception and visual cortex activity in ferrets. Their elegant electrophysiological work shows that expectations about environmental variables are represented in the ongoing, population-level spontaneous activity of neurons in the visual cortex, and that this spontaneous activity optimally converges to resemble evoked activity in response to naturalistic sensory stimuli as the animal develops and learns through experience.

When did you first encounter this paper?

Sometime around when it was published, in late 2011 or early 2012.

Why is this paper meaningful to you?

I was about halfway through my Ph.D. and just starting to appreciate the extreme difficulty of studying how probabilistic perceptual information is represented in brains.

This was the first paper I read on the topic where I felt like the explanation and evidence presented was so immediately “grokkable” to me. It provided a succinct and holistic view of how the brain might represent probabilistic perceptual information, and one that explicitly answered the question of how prior expectations and incoming information combine in overlapping regions of the brain—indeed, in overlapping neuronal populations! Reading this paper and feeling like I understood it made me more confident that I could grasp other literature in the space, and maybe even contribute some of my own insights to the conversation eventually.

How did this research change how you think about neuroscience or challenge your previous assumptions?

During my Ph.D., I studied the computations underlying multisensory perception, primarily in humans. My goal was to contribute an explanation of how the brain integrates incoming sensory evidence from multiple sources (visual and haptic) with prior expectations about objects’ sizes and densities to produce the percept of heaviness.

My colleagues and I had developed some hierarchical Bayesian causal inference type models that seemed to match human behavior, but I was struggling to conceptually link those models back to brains. Bayesian inference is all very nice, but I felt that if it isn’t related to what the brain is actually doing, something crucial was missing from the explanations I was so painstakingly seeking.

I had read other papers that purported to have made this link and was working on developing an understanding, but I still felt unsatisfied. When I read this paper, though, things started to make more sense.

It was the first time that I saw a tangible example of the link I was searching for. It gave a real grounding to the modeling work I was doing then and that I still do in my research today. This grounding returns again and again even now, compelling me to always consider how the “software” I’m trying to reverse engineer about perception, metacognition and subjective experience is translated to human wetware.

How did this research influence your career path?

This paper was the catalyst for what became a fascination with finding ways to intervene in specific populations of cells identified not by their location but by their function as part of the dynamic whole. I started thinking about more direct links between computational models and biologically plausible implementations, and—importantly—how I could possibly get at the level of granularity typical of electrophysiology in model organisms through noninvasive methods in humans.

As a postdoctoral researcher, I worked with monkey electrophysiology data and human electrocorticography data for the first time and was so excited to get my hands dirty with custom-written code to look at the dynamics of small windows into the brain. The influence of this particular paper eventually drove me to link my models to specifics of neural representations and dynamics, and it pushed me to suggest novel ways to use real-time functional MRI to test computational models of perceptual metacognition.

Many of the craziest ideas and most exciting projects I pursued in my career probably stem in some way from reading this paper in graduate school.

Is there an underappreciated aspect of this paper you think other neuroscientists should know about?

It isn’t about this paper per se, but I think anybody interested in these concepts should be aware that the debate about how the brain represents probabilistic information and performs “Bayes-like” computations is still very much a hot topic. For example, the conversation forms the basis of one of the ongoing “Generative Adversarial Collaborations” to have come out of the Cognitive Computational Neuroscience conference. I encourage folks to keep an eye on this work.

What paper changed your life?

Recommended reading

This paper changed my life: Shane Liddelow on two papers that upended astrocyte research

Explore more from The Transmitter

Imagining the ultimate systems neuroscience paper