This paper changed my life: ‘A massively parallel architecture for a self-organizing neural pattern recognition machine,’ by Carpenter and Grossberg

This paper taught me that we can use mathematical modeling to understand how neural networks are organized—and led me to a doctoral program in the department led by its authors.

Answers have been edited for length and clarity.

What paper changed your life?

A massively parallel architecture for a self-organizing neural pattern recognition machine. G.A. Carpenter and S. Grossberg Computer Vision, Graphics, and Image Processing (1987)

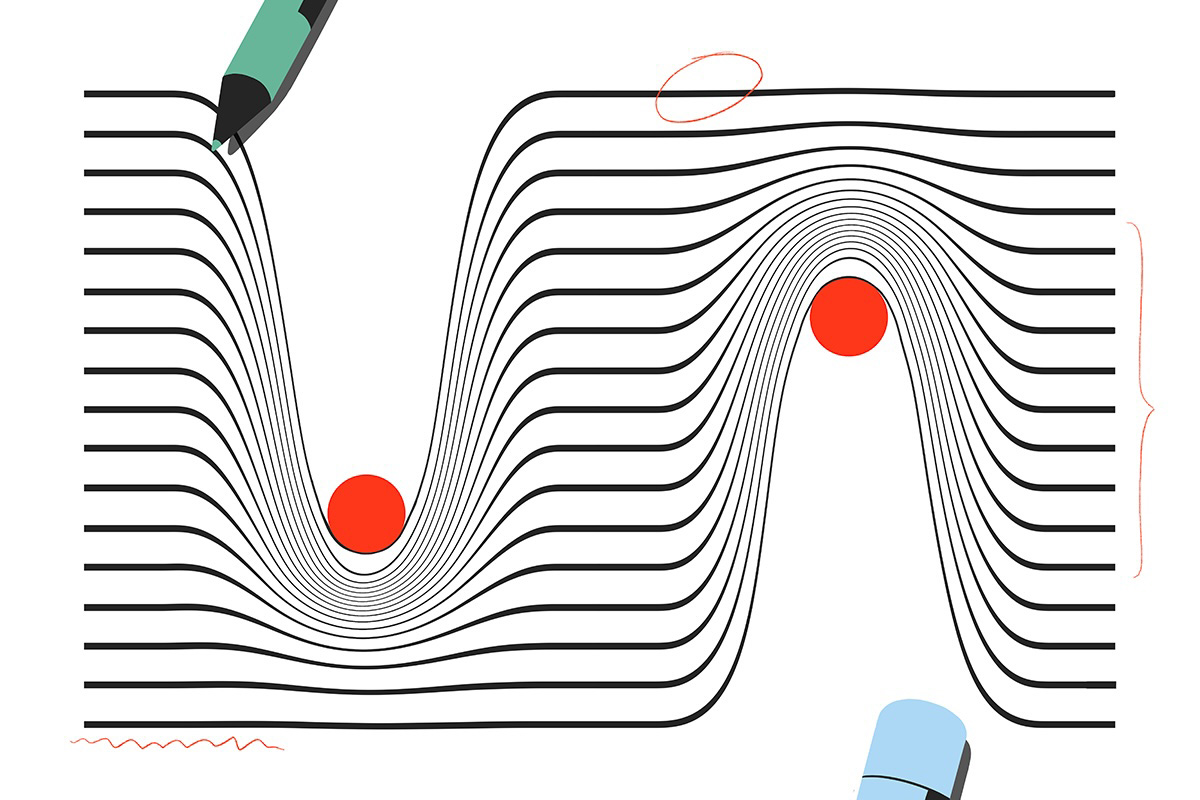

The paper describes a neural network architecture called adaptive resonance theory (ART) that learns in an unsupervised manner (i.e., without a “teaching signal”). A key insight of the paper was how networks can keep learning new things without losing what they already knew—like how we can learn new facts without forgetting old ones—thus addressing what is called the “stability-plasticity” dilemma.

The architecture works by combining two types of information flow: one that brings in new information (bottom-up) and another that checks this against existing knowledge (feedback, or top-down), creating a back-and-forth process that produces complex dynamics.

Overall, ART describes how the brain might self-organize representations while remaining adaptive to new information in an ever-changing environment.

When did you first encounter this paper?

I first read the paper as an undergraduate student in computer science at the Federal University of Rio de Janeiro in Brazil.

Why is this paper meaningful to you?

As a computer science student, I had not taken courses in biology or neuroscience at this point in my training. The paper shifted my interest from traditional computer science to the biological realm.

One of the reasons for the shift was the realization that one could try to understand biological neural networks by using mathematical modeling in ways that were completely novel to me. As someone who was familiar with the field of biology only as a “collection of facts”—my impression based on high-school biology—this notion was quite an epiphany.

How did this paper change how you think about neuroscience or challenge your previous assumptions?

This paper introduced me to the idea that the brain could be understood in terms of mathematical formalisms based on dynamical systems, parallel and distributed computation, and emergent properties. It also inspired me to think of the brain in terms of autonomous systems, which are related to properties such as self-organization, adaptive learning, attentional control and continuous real-time processing.

How did this research influence your career path?

The impact on my career path could not have been greater. I went on to do my Ph.D. research at Boston University in the Department of Cognitive and Neural Systems, which was headed by the two authors of the paper, Stephen Grossberg and Gail Carpenter. Although I switched to experimental human neuroscience focused on functional MRI imaging sometime after my Ph.D., the computational framework developed by Grossberg, Carpenter and their collaborators has always been extremely influential to my way of thinking about the brain.

Is there an underappreciated aspect of this paper you think other neuroscientists should know about?

The ideas developed in the paper remain largely underappreciated by neuroscientists. Perhaps even more so with the success of deep-learning architectures and transformers. The ideas of the paper—dynamics, self-organization, continual learning, autonomy—remain as relevant today as they were when the work was first published.

Recommended reading

This paper changed my life: John Tuthill reflects on the subjectivity of selfhood

The best of ‘this paper changed my life’ in 2025

Explore more from The Transmitter