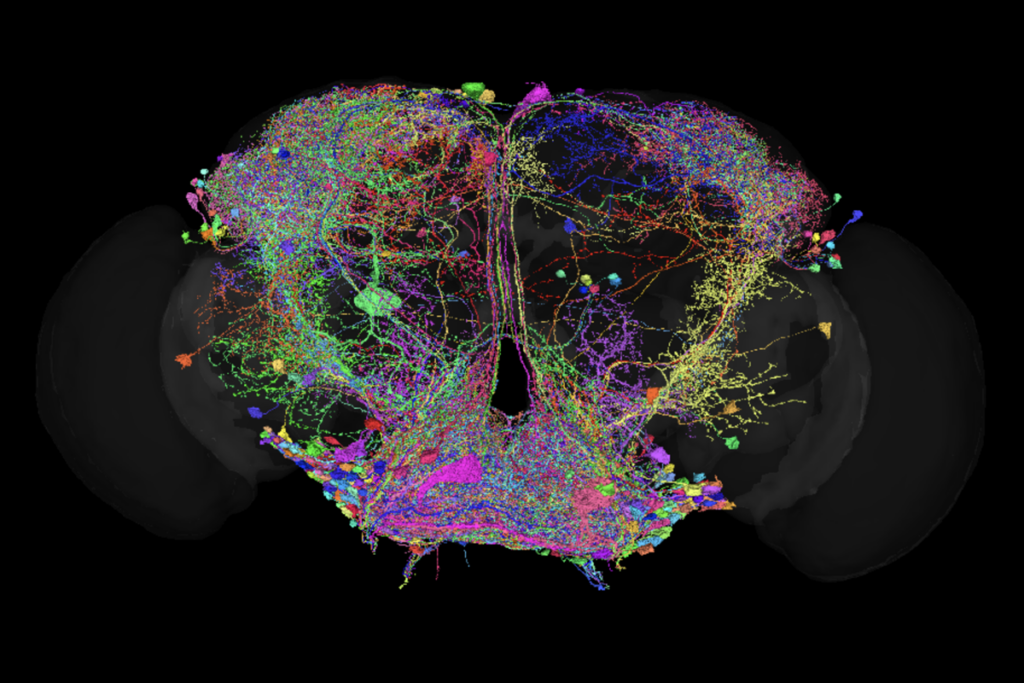

Neuroscience is at a crossroads. The latest advances in electrophysiology and optophysiology, such as Neuropixels probes and light-sheet microscopy, have pushed the boundaries of what we can record from the brain. These technologies are generating vast amounts of data—single experiments can produce petabytes’ worth—far more than we have ever dealt with before, sparking a critical discussion: How do we store and access all this information? Should we be keeping all raw data or focusing on processed datasets? And if we can’t keep everything, how do we decide what to discard?

Raw data are the most complete and unfiltered record of an experiment, capturing every detail, including those that might seem irrelevant at first. It is indispensable for certain types of research, particularly when it comes to developing new methodologies or uncovering novel insights. Refined spike-sorting algorithms, for example, might extract meaningful patterns from what currently appears to be background activity.

Keeping raw data also enhances transparency and reproducibility, two pillars of rigorous scientific research. By preserving the original data, we enable other researchers to validate our findings and even uncover new insights that might not have been apparent initially. More recently, raw data have become an important training ground for artificial-intelligence models, an increasingly widespread tool in neuroscience research.

On the other hand, though raw data are incredibly valuable, processed data play an equally important role in the research ecosystem. Data that have undergone some type of preprocessing, such as spike sorting, filtering or deconvolution, are often much easier to share and work with.

Sharing processed data can also reduce the burden on those looking to reuse datasets. Rather than redoing all the preprocessing steps, researchers can build on the work of others and focus their efforts on new analyses or interpretations. This efficiency is particularly valuable in collaborative fields such as neuroscience, in which different experts may contribute to different stages of the research process, and for researchers who may not be experts in the nuances of data-preprocessing—such as theorists focused on modeling rather than data acquisition.

B

oth processed and raw data have their unique advantages and challenges. Understanding the trade-offs between the two is crucial for determining what to keep and how to make the most of the data we generate. Though the ideal scenario might involve retaining both, storage costs and access limitations mean that many labs are forced to make difficult choices.Storing raw data can be costly, both in terms of physical storage infrastructure and the complexity of managing such large datasets. Cloud storage solutions may eventually grow with our data needs, but the expense and challenges of ensuring data integrity over time are not trivial. On the access front, the sheer size of these datasets can make it difficult for researchers to download and analyze data efficiently. This barrier has led to the development of strategies such as “lazy loading,” in which only the parts of the data necessary for a specific analysis are accessed at any given time. This approach, though effective, requires sophisticated data management infrastructure and presents a learning curve for researchers who are used to more traditional methods of data access.