Over the past few decades, neuroscience has become so broad and technically sophisticated that individual researchers can no longer fully understand the technical foundations of their experiments. The average systems neuroscience project, for example, requires in-depth knowledge of animal surgery; mechanical, optical and electrical engineering; statistics and computer science. Neuroscientists very rarely have deep proficiency in all of these domains. Research involving custom tools and procedures, such as surgeries and electrophysiology, as well as novel methods, including viruses, algorithms and custom-designed microscopes, can be especially challenging. Unlike with large commercial devices, such as MRI scanners, appropriate use of these tools requires in-depth knowledge of many technical details.

We underestimate the price of the suboptimal experiments that result from this lack of expertise. Small mistakes can have large consequences: The wrong grounding scheme on an electrophysiology implant can mask reward responses. Improperly soldered connectors can lead to missing weeks of data. A math error in a laser controller can destroy months’ worth of samples. In addition, fear of such mistakes incentivizes researchers to stick to methods they know well, which slows innovation. Even more seriously, applying invalid statistical methods; forgetting to include key controls; or failing to correct systematic artifacts produced by the wrong virus serotype, buffer, microscope drift or inappropriate data pre-processing can lead to incorrect results and wrong scientific inferences.

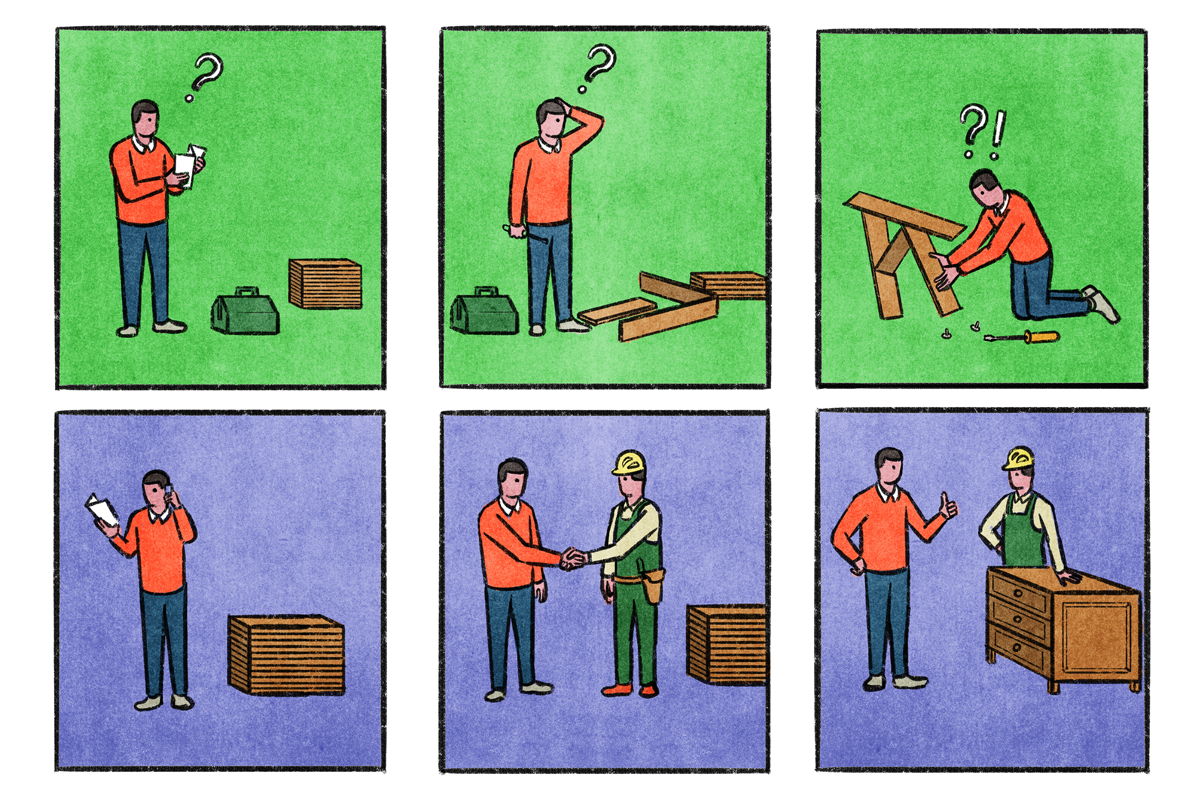

For all these examples, there are experts who would be able to spot and to fix the problems if only they were involved in the projects, be it at the start of a project to help plan, when encountering an issue, or to outsource specific tasks.

N

euroscientists have been reluctant to relinquish their DIY ethos for a variety of reasons. One is the belief that tinkering with methods and pushing techniques beyond known limits or intended purposes is an important method of discovery. Learning about the technical details of our work, and even learning from mistakes, can make us better scientists, particularly for students and postdocs who are explicitly expected to pick up new skills. But not all technical training makes one a better scientist. The most useful types of in-depth technical training are different for everyone. Training electrophysiologists to understand electrical engineering concepts is useful. Expecting them to also become mechanical engineers will not add to their ability to reason about the input impedance of neurons but will instead lead to wasted months trying to fix an incorrectly home-built recording rig.

Second, expert help can seem expensive. But it’s often cheaper in the long run than having a trainee spend months solving a simple problem, such as a faulty injector or the wrong glue. Beyond the wasted resources — salary, facility costs, supplies — the opportunity cost, missed deadlines and compounded career implications of such delays are bigger still. Economically speaking, avoiding such delays should then be worth a lot of money.

Scientists also often overestimate their ability to become and remain experts across too many domains, and underestimate the amount of time required to solve technical problems. This results in a belief that asking a colleague with relevant expertise for help is enough, when in fact many problems require multi-day visits to diagnose issues, write software or train lab staff.

Putting the reluctance of individual scientists aside, adopting a culture of expertise will also require some shifts in the field. The current funding and publishing system punishes specialization and undervalues technical expertise. For example, most published papers have one (or at best a few) first and last authors. As long as we hang on to the idea of singular intellectual ownership as the main currency in neuroscience, people are incentivized to shoulder as much of their own project as they can rather than spending significant time helping someone else’s. A more granular means for giving credit and attribution — one that acknowledges that neuroscience is a team sport — would improve scientific progress.

The field also needs to expand access to expert help. In some domains, institutes such as the Howard Hughes Medical Institute’s Janelia Research Campus in Ashburn, Virginia, where I work; the Allen Institute in Seattle, Washington; and the Sainsbury Wellcome Centre in London, England, demonstrate the power of in-house technical expertise. But at most universities, core facilities serve far too few labs to specialize in narrow areas and are permanently either over- or undersubscribed, which often leads to them getting shut down as too costly for the work they provide. This problem would be solved by opening them up to external work, allowing more specialization and evening out the workload, as well as charging sustainable rates for their work, effectively turning them into companies.

C

urrently, the main source of outsourced technical expertise in neuroscience is tool manufacturers — they provide support and, in some cases, training to scientists. But this is usually tied to purchases, and such companies are often unclear on how to engage with technical work beyond their own tools. To remedy this, we need more flexibility, by making consulting contracts for bespoke, project-specific services more common. Technical consulting is already commonplace in some areas, such as for server administration, vector cloning, mouse transgenics or air table installation. In systems neuroscience, some small, domain-specific consulting companies already offer such services, including

Open Ephys and

Aquineuro for freely moving imaging and electrophysiology, and

Independent NeuroScience Services for microscope design.

The evolution of Open Ephys, of which I am co-founder, reflects the need for these kinds of services. The organization launched in 2010 as a nonprofit that disseminated scientist-built, open-source devices and software for systems neuroscience, with the aim of reducing the amount of time trainees had to spend getting their experimental rigs up and running. It has recently expanded its offerings, beginning to provide consulting services ranging from custom design work to lab-specific training modules. (I receive no financial compensation for my role at Open Ephys.)

These companies show that this general approach is viable, but we need many more. For the majority of technical issues, paying for expertise is not yet an option.

To expand the market, funding agencies will need to include a means of paying for expertise. Currently, grants typically include funds only for equipment and scientist salaries. If they allow consulting fees, budgets are often tailored to student and postdoc salary levels. Professional technical consulting will be more costly — people with the relevant expertise need career paths that offer stability, salaries and work environments that are competitive with industry. However, these costs would be offset by removing needless delays, reducing the cost of the project overall and increasing the quality and robustness of scientific results.

In sum, a cultural shift that increases the role of technically demanding scientific work as a career path — in academic labs, companies or publicly funded organizations, or as consultants — would be good for science, good for trainees and good for funding agencies seeking to increase the impact of each dollar they grant.