Imagine the ultimate systems neuroscience paper. What it looks like could tell us much about where neuroscience, and how we communicate it, is going. Extrapolating from the evolution of systems neuroscience over the past century, we might envisage something like this:

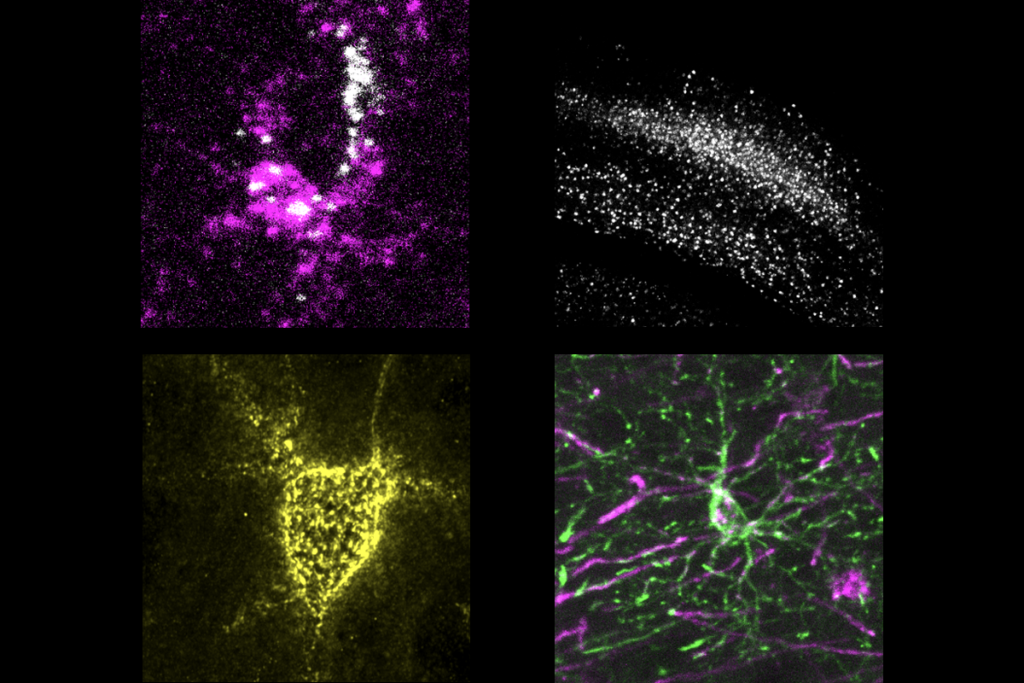

It begins by reporting the activity of every neuron in the brain of a human across their lifespan, from birth to death. The authors have perfected the molecular ticker tape, on which every spike of a neuron adds a new fluorescent molecule to a protein strand inside it. Sequencing the proteins gives a complete history of neural activity over the lifespan; sequencing each neuron’s own messenger RNA tells us which of the then-known 104,000 types of neuron it is.

The researchers report every nuance of the person’s behavior. Molecular sensors in muscle predict their movements: Eye movements deduced from the contraction patterns of the three pairs of muscles controlling each eyeball; speech inferred from the pattern of muscular contractions of the jaw, throat, lips and tongue. Retina-mounted cameras, nano-sized cochlear implants and flexible 2D camera sheets on their clothing capture everything they see and hear.

With this unprecedented dataset, the authors train a deep network of 1,000 layers and 2 trillion weights, dubbed the Transformer Generative Deep Dynamics network (TraGeDy Net), to predict half a lifespan’s worth of behavior from the neural activity. This network then predicts the person’s moment-to-moment behavior in the other half of their lifespan—chasing their hat blowing away in a gale, or stumbling in a hole in the grass and twisting their ankle—from the neural activity with an R2 value of 0.99.

And that’s it. We’re done. The ultimate paper has shown we can successfully map a human’s entire lifetime of neural activity at the single-neuron level to their behavior.

W

hat do we learn from imagining this ultimate paper? We learn that our data will only ever get more complex. Every spike from every one of the 86 billion neurons in the human brain creates forbiddingly high-dimensional data; in my book “The Spike: An Epic Journey Through the Brain in 2.1 Seconds,” I made a back-of-the-envelope calculation of roughly 34 billion spikes in the cortex alone over the average human lifespan. That’s an eye-watering raster plot. The behavioral data would be far more complex, starting with a frame of pixels for every few milliseconds of a life.Such a paper would be remarkable. But no one would ever want to read it. The main text would have the density of a singularity or be thousands of pages long—or both—and convey but a sip of the lake of data, with valuable insights obscured in hundreds of supplementary figures. Tucked away in supplementary figure 131 is the comparison of the TraGeDy Net with control benchmark models, showing that regularized regression could achieve an R2 value of 0.92 on the same data, but no one will notice. There is just too much data.

Indeed, one might argue we’re already there: A growing body of papers on systems neuroscience and on giant simulations of neural circuits are beyond the point that anyone can reasonably understand them end to end. Some research papers are already more than 100 pages long, with tens of supplementary figures—an entire Ph.D. thesis in disguise.

A PDF is not how to convey data this complex. Nor is a webpage of the same text, no matter how clever the design, nor whether it can be updated “live” or not. Our publishing model must, inevitably, change, and preferably long before we reach that ultimate paper.

Some hints of what that might look like come from the data-intensive studies we already have, such as FlyWire, a full connectome of the adult female Drosophila brain. This publishing model releases full data via a portal, provides descriptions of how the data were gathered and offers tools for accessing and querying it. For Flywire, one of the main thrills of the recent splurge of papers across the Nature journals was not the connectome itself (impressive as it was) but the studies that built on it. Researchers combined the wiring diagram with recent inferences of whether each connection was inhibitory or excitatory, and they used that combination to predict the dynamics of responses to visual input or to predict visual computations of specific cell types.

The Allen Brain Institute has pursued this model for more than a decade, releasing interfaces to their data collections on neuron types and neuron wiring across species. The Blue Brain Project, to its credit, released an interface for their syntheses of cell types and numbers in the mouse brain. But the dense, fixed text of papers announcing these resources remain the ultimate output. Science is done on top of these, generating more papers, describing ever more complex data.

S

o how do we take the next step and remove the paper itself? Replace it with a bot.We’re now all familiar with the power of large-language models (LLMs). They can already plot graphs from data, answer queries on specific sources, give syntheses of and even critique a paper’s text. The logical next step is to use that power directly on the data—to have the LLM sit on top of the data portal.

It’s easy to envision the scientists conducting the hypothetical study of the human lifespan harnessing LLMs to tame the complexity of their data. Now imagine yourself tackling the human-lifespan study armed with this paper-bot interface. You can run the authors’ pre-configured prompts—“Summarize the main results” or “Show the evidence for predicting behavior”—to get a basic understanding of what was done and why. But you are free to prompt however you like, asking what data were collected from retina-mounted cameras, how exactly they were collected and the results of specific analyses—whatever questions you need to ask to understand the scientific insights from the study (rather than what the authors thought people needed to know).

Querying the paper-bot solves the problem that papers are linear but science is not. It will mean no longer having to deal with the fragmentary results scattered across a paper’s text and supplemental material. For our imagined human lifespan paper, simply asking the paper-bot how well TraGeDy Net performed compared with its regularized-regression controls will immediately deliver both text and a figure summarizing their predictive power without having to dig through hundreds of supplemental figures with no context and poorly drafted legends. Then simply asking whether TraGeDy Net did meaningfully better than the controls will give the proper comparison, with statistics, that the authors neglected to do.

Rather than static text, a paper becomes a release of data with its bot. Each new release of data, with updated paper-bot, is the token of scientific output we currently call a “paper.” The job of researchers becomes that of gathering and releasing their data, analyses and preconfigured prompts for the paper-bot that gives their view of the context, the results and their meaning. Author credit? Ask the paper-bot who did what.

It’s not hard to imagine how powerful these bots would become. You could use them to ask your own questions of the human lifespan data, looking for correlations between variables in the behavior, for example. In this way, new science would arise directly from the data through the paper-bot itself, rather than via the laborious cycle of further analysis, paper writing and review. There’s no reason to stop at a bot for each data release. Meta-bots could synthesize data across studies, ready to field your queries as you try to understand what we know. No doubt there would be artificial-intelligence scientists standing ready to prompt each paper-bot with new queries and piece together new knowledge.

Would this really mean the end of papers? That’s for the field to decide. Perhaps you’ll be satisfied reading an AI bot’s report of the latest research; perhaps you might prefer a return to the original model of Nature, in which authors publish a summary of the serious work elsewhere—in this case, on a data portal. Whatever it may contain, there’s one thing we can say for sure: If there is an ultimate paper, it won’t be a paper.