Andrew Whitehouse never expected his work as an autism researcher to put him in danger. But that’s exactly what happened soon after he and his colleagues reported in 2020 that few autism interventions used in the clinic are backed by solid evidence.

Within weeks, a range of clinicians, therapy providers and professional organizations had threatened to sue Whitehouse or had issued complaints about him to his employer. Some harassed his family, too, putting their safety at risk, he says.

For Whitehouse, professor of autism research at the Telethon Kids Institute and the University of Western Australia in Perth, the experience came as a shock. “It’s so absurd that just a true and faithful reading of science leads to this,” he says. “It’s an untold story.”

In fact, Whitehouse’s findings were not outliers. Another 2020 study — the Autism Intervention Meta-Analysis, or Project AIM for short — plus a string of reviews over the past decade also highlight the lack of evidence for most forms of autism therapy. Yet clinical guidelines and funding organizations have continued to emphasize the efficacy of practices such as applied behavior analysis (ABA). And early intervention remains a near-universal recommendation for autistic children at diagnosis.

The field urgently needs to reassess those claims and guidelines, says Kristen Bottema-Beutel, associate professor of special education at Boston College in Massachusetts, who worked on Project AIM. “We need to understand that our threshold of evidence for declaring something evidence-based is rock-bottom low,” she says. “It is very unlikely that those practices actually produce the changes that we’re telling people they do.”

How this dearth of high-quality data on autism intervention has persisted despite decades of dedicated research is murky. Part of the problem may be that autism researchers can’t seem to agree on what threshold of evidence is sufficient to say a therapy works. A system of entrenched conflicts of interest has also artificially kept this bar low, experts say.

In the meantime, clinicians have to make daily decisions to try to support autistic children and their families, says Brian Boyd, professor of applied behavioral science at the University of Kansas, who studies classroom-based interventions. “They can’t always wait for science to catch up.”

But clinicians also have an ethical responsibility to consider the safety and costs of interventions, Whitehouse says. This is especially true given that many autistic people have reported experiencing physical or emotional harm from practices such as ABA — adverse events that are rarely tracked.

“Evidence has to drive that conversation,” says Whitehouse, who is hopeful for the field’s future despite its persistent problems. Several teams are tracing out the paths the field needs to follow — toward more sophisticated trials that compare different therapies and adapt to participants’ needs.

“The field is just starting to get the high-quality evidence it needs,” Whitehouse says.

T

he problems facing autism intervention science date back to the field’s foundation in the 1970s and ’80s. Some initial studies, though groundbreaking at the time, had small sample sizes and statistical shortcomings. Ole Ivar Lovaas’ seminal 1987 ABA study, for example, was ‘quasi-experimental,’ in that participants weren’t assigned to groups randomly. And other studies from this era followed a ‘single-case’ design, in which participants served as their own controls.Even as researchers in other disciplines began to prioritize randomized controlled trials — widely considered the gold-standard design for treatment studies — autism intervention struggled to keep up, says Jonathan Green, professor of child and adolescent psychiatry at the University of Manchester in the United Kingdom. From the start, some researchers deemed randomized controlled trials neither ethical nor feasible for a condition as complex as autism. And that resistance fed into a culture of accepting a lower standard of evidence within the field, says Green, who developed the parent-training intervention PACT.

“These are legacy ideas, but they persist,” he says, and probably keep the field from advancing toward more effective interventions. “The real disappointment of this is what we’re missing by not doing this well.”

Less than a third of the studies that test ABA-related interventions are randomized controlled trials, according to Project AIM. And single-case designs make up the bulk of studies included in national reports issued to U.S. clinicians. For example, the 2021 National Clearinghouse on Autism Evidence and Practice (NCAEP) report deemed 28 practices evidence-based, including many behavioral interventions, yet 85 percent of the studies reviewed are a single-case design. So too, the 2015 National Standards Report (NSP) identified 14 effective interventions for autistic children, adolescents and young adults but draws on a set of studies of which 73 percent are single-case.

To exclude single-case studies would be to ignore important information, says Samuel Odom, senior research scientist at the University of North Carolina at Chapel Hill, who co-directed the NCAEP review and contributed to the 2015 NSP report. Researchers need alternatives to randomized controlled trials, he says. “If you drill down so far, in terms of rigor of methodology, at least in developmental psychology, one does find that nothing works.”

But single-case designs are not suited to track long-term developmental changes, which are often the focus of intensive interventions, says Micheal Sandbank, assistant professor of special education at the University of Texas at Austin. Sandbank led Project AIM, in which the team chose to omit single-case design studies entirely. These types of studies can help researchers detect changes in specific skills, such as learning classroom routines in school, she says, but “we can’t make recommendations based on a whole literature of single-case design work.”

A

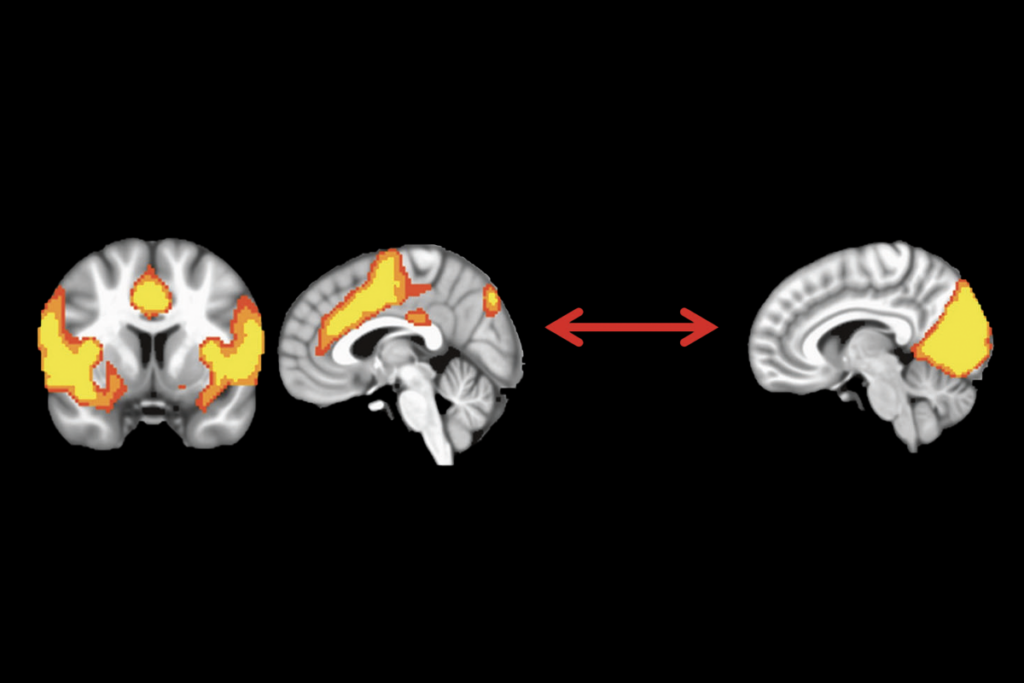

midst this ongoing debate lurks a more menacing influence over the field’s evidence problem: a system of intertwined conflicts of interest. It’s these forces that pushed back so strongly when Whitehouse and his team exposed holes in the intervention literature, he says.“There is a real sinister edge to enforcing the status quo,” says Whitehouse, who has conducted randomized controlled trials to explore preemptive therapy for infants showing signs of autism.

Autism therapies make up a multibillion-dollar industry, at least in the United States, thanks in large part to state-wide insurance mandates and financial firms backing some ABA providers. Some say this investment increases access to care, but deeper monetization of autism treatment may also compromise the field’s commitment to high-quality evidence, Whitehouse says. Private equity demands profits, and “in a tension between profits and good clinical practice, profits will always win out,” he says.

Financial concerns also fuel several potential conflicts of interest in the field, Bottema-Beutel says. These competing interests may prevent progress in critically evaluating evidence, she says, because “there’s just so many conflicts of interest that are layered on top of each other that make it really difficult for people to switch course and say this is not going well.”

For example, the editorial boards of journals that publish behavioral intervention research, such as the Journal of Applied Behavior Analysis, often include many board-certified behavior analysts (BCBAs), who are trained to provide ABA, Bottema-Beutel says.

Many BCBAs also contributed to the NSP report, which included behavioral therapies among its list of ‘established interventions.’ And the report was funded in part by the May Institute, a nonprofit organization that provides ABA services throughout the U.S. The involvement of BCBAs and the May Institute appears in the report, but the potential therein for conflicting interests is not disclosed.

It’s not that practicing BCBAs should be barred from doing this research, Bottema-Beutel says, but their conflicts need to be stated clearly so others can read their work with appropriate scrutiny.

Rigorously interrogating potential competing interests within research agendas was not common when the NSP report was published, says Cynthia Anderson, senior vice president of ABA at the May Institute and director of the institute’s National Autism Center. “I don’t think that was even on anyone’s radar as something as to be thinking about,” she says. Anderson and her team are working on a new report, in which they are exploring questions such as whom autism interventions are designed to help, and they plan to disclose its funding from the May Institute, she says.

Researchers with a background in ABA also worked on the NCAEP report, which listed several behavioral interventions as evidence-based practices, but no one on the team stood to gain financially from its results, Odom says. The key to avoiding the influence of bias in evaluating the literature is to be open to any type of intervention, behavioral or not, that passes muster, he says. “We tried to really follow the data.”

Avoiding bias can also be difficult when autism interventions are tested by the same researchers who create them — a common overlap that is rarely reported in published work.

Researchers aren’t often motivated to move out of their silos and test interventions independently or in combination with others, says Connie Kasari, professor of human development and psychology at the University of California, Los Angeles, who developed a play-based intervention called JASPER. “It’s insane, but it all comes down to money.”

Even so, Kasari says she’s optimistic about the field’s prospects. “We have a ways to go, but I feel like we have a direction. We just need to do it.”

T

o that end, the number of randomized controlled trials in the field has jumped from just 2 in 2000 to 48 in 2018, and most of these occurred after 2010, according to a 2018 review. Yet the same review revealed that only 12.5 percent of those randomized trials had a low risk of bias.Researchers need to break the pattern of testing their own interventions and prioritize independent replications, Sandbank says. It’s possible that these studies may turn up less promising results than the original work, she says, but “we have to be unafraid to find that out.”

To move forward, the field also needs to move beyond trials testing single interventions against a control toward studies that compare multiple interventions, says Tony Charman, professor of clinical child psychology at King’s College London in the U.K. The goal should be to provide families with the pros and cons of different treatments side by side so they can make informed choices, he says. “We’re definitely quite a way from that.”

Only a handful of studies have explored the relative effects of different treatments. For example, a 2021 study found that neither an ABA-based intervention nor the Early Start Denver Model (ESDM), a naturalistic intervention that harnesses a child’s interests to teach new skills, outperformed the other. More trials like these could help to reveal which interventions provide the most benefits for the least amount of time and cost.

Researchers are also testing sequences of interventions. For instance, Kasari and her team are testing a form of JASPER both before and after a version of ABA. Some children may do better starting with a structured approach such as ABA, whereas others may benefit from beginning with a naturalistic approach such as JASPER, Kasari says. These sequential multiple assignment randomized trials, or SMART studies — will help identify how to personalize treatment strategies for individuals, she says.

To truly advance autism interventions, top-down changes in the regulation of science are needed, Green says. “The outlets for trial reporting have a lot to answer for.” Many autism journals need to tighten their criteria for publishing autism intervention studies, he says. Similarly, researchers need funding that incentivizes them to pursue complex, costly study designs, such as SMART studies, as well as independent replications.

Ultimately, moving the field forward will require individual investigators owning their obligation to do high-quality science instead of outsourcing blame, Whitehouse says. “Culture change is hard, but it is critical to deliver on our clinical promise to children and families to deliver safe and effective therapies.”