Virtual reality yields clues to social difficulties in autism

Assessing social ability in adults with autism requires controlled tests involving real-time social interactions. Virtual reality makes this possible.

You’re walking down a narrow corridor. Someone is walking toward you, so you step to one side. But in that moment, they step to the same side. You make eye contact, grin awkwardly and then, without a word, negotiate a way around each other.

Our lives are full of these delicate social dances. Whether we’re having a conversation, playing a game or trying to avoid collisions with passersby, our social interactions are reciprocal. My behavior affects your behavior, which in turn affects my behavior.

But until the past few years, research into social cognition — the psychology of human interaction — has been decidedly non-interactive. Participants looked at images of faces, read short stories about social scenarios, or watched videos of other people interacting. They didn’t actually interact with another person.

Take the Sally-Anne task, which is widely used in studies of autism to test ‘theory of mind,’ the ability to understand other people’s beliefs, intentions and emotions. The participant watches an interaction between two dolls and is asked to predict the behavior of one of the dolls based on an understanding of what the doll ‘believes.’

When children with autism answer incorrectly, the assumption is that they have failed to read the doll’s mental state and that similar failures explain their difficulties interacting with other people. However, many adults with autism pass this test, and even others that are more challenging, yet still experience severe social difficulties.

These observations clearly demonstrate that traditional tests of social cognition fail to capture key aspects of social interactions, particularly in adults, that are essential to understanding autism.

We need tests that allow us to precisely measure behavior in complex, reciprocal social interactions. To achieve this goal, we and others are investigating the use of virtual-reality technology as a tool for research and, potentially, therapy.

Using these technologies, we have confirmed that problems with joint attention — the ability to coordinate with someone else so that you are both paying attention to the same thing — persist into adulthood. We’ve also gained important insights about the roots of these problems. We also hope that adults with autism can one day practice their social skills within specially designed virtual environments.

Engaging others:

In 2013, a team led by Leonhard Schilbach, now at the Max Planck Institute of Psychiatry in Munich, Germany, published a new manifesto for social cognition research. These researchers argued that social cognition should be investigated using a ‘second-person neuroscience’ approach, in which behavior and brain responses are measured while people engage in reciprocal interactions.

This emerging field offers exciting possibilities for understanding autism. But it also presents serious challenges: Experimental investigations require precise control of the conditions so they can be repeated and manipulated consistently. Achieving this control in the context of a realistic social interaction is far from straightforward.

In a review article published earlier this year, we addressed these issues1. We focused on studies of joint attention, which involves both responding to your partner to attract his attention, and initiating joint attention, by guiding that person to an object or location of interest. Joint attention is important in the development of language and social skills, and a delay in its development is one of the most reliable early signs of autism.

One approach used in a number of studies has been to measure brain responses while participants are engaged in a joint-attention game with another person, either face to face or through a live video feed. However, this relies on the partner behaving consistently for all participants.

Differences in the brain responses of people with and without autism may reflect differences in the neural mechanisms of joint attention. But they could just as well reflect variation in the behavior of the partner, or in the participants’ sensitivity to other social cues, such as smiles or eyebrow raises, from the partner.

Search party:

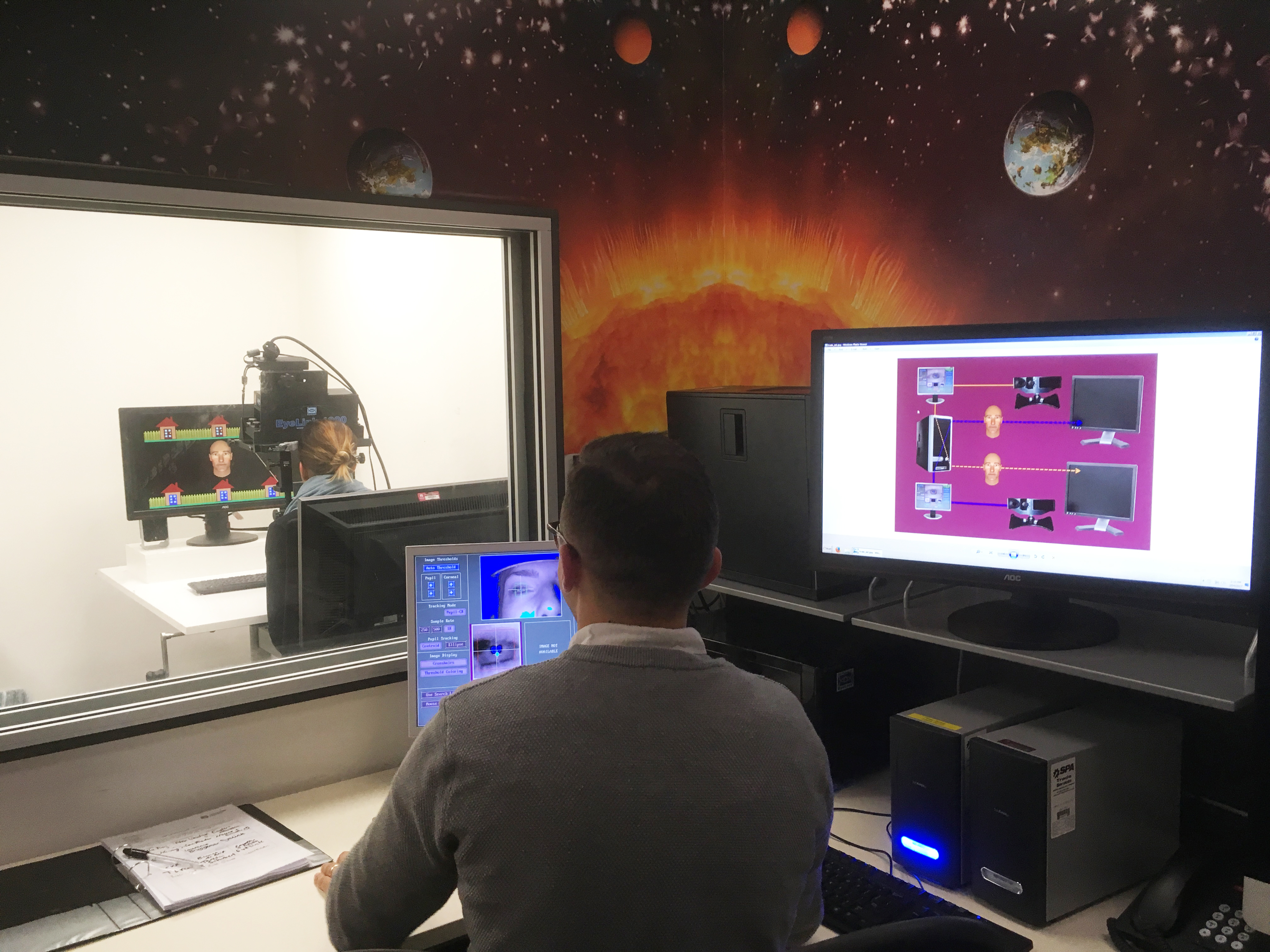

To address these issues, we and other researchers have replaced the human partner with a virtual partner, or avatar, whose behavior is controlled by a computer2.

In our own studies, participants interact with an animated virtual character called ‘Alan’ whose face appears in the center of a computer screen3. We use an eye tracker to see where on the screen the participant is looking, and program Alan to respond to her eye movements. This gives us complete control over the interaction.

The participant works with Alan to catch a burglar who is hiding in one of six houses on the screen. Each trial begins with both Alan and the participant searching the houses. If the participant finds the burglar, she initiates joint attention, guiding Alan to the burglar by making eye contact and then looking at the correct house. If, on the other hand, Alan finds the burglar, he initiates joint attention and the participant responds.

The game requires participants to coordinate their behavior with Alan, make use of eye contact and flexibly assume different roles in the joint-attention process.

The search phase of our task also adds a complexity that is absent from other studies. Because Alan makes many eye movements during the trial, the participant has to decide whether a particular eye movement is intended to guide her to the burglar or is simply part of Alan’s ongoing search.

We have found that participants respond much faster if we remove the search phase so Alan’s eye movements always indicate the burglar’s location4. This suggests that what we call ‘intention monitoring’ — working out whether a cue such as an eye movement is intended to be communicative — is an important part of joint attention.

In research published in April, we used this task with a group of adults with autism5. Overall, they made slightly more errors than controls did. They were also slower to respond to the avatar’s eye-gaze cue, but were just as fast as controls when we replaced Alan’s eye-gaze cues with an arrow pointing to the burglar’s location.

This finding suggests that the difficulties of adults with autism are specific to the social interaction involved in the task, and cannot be explained by other factors that might affect performance — such as the ability to orient attention or control eye movements.

Our findings suggest that subtle joint-attention difficulties continue into adulthood, at least for some people with autism. This contrasts with evidence from other studies suggesting that children and adults with autism have no difficulty responding to eye-gaze cues on a computer screen6.

We think this may reflect the intention-monitoring component of our task, which makes it more akin to a real-life interaction.

Immersive interactions:

In our research so far, participants have interacted with a virtual character on a computer screen. The next step is to use fully immersive virtual-reality headsets to recreate more realistic social interactions, in which individuals must evaluate multiple social cues at once, including eye gaze, head orientation, hand gestures, speech and facial expressions.

We, among others, are also considering clinical applications of new immersive virtual-reality technologies. Virtual simulations could perhaps be used for social-skills training in which elements of a social interaction are introduced gradually. Virtual meeting spaces could also allow people with and without autism to interact in a safe and controlled environment that reduces anxiety and sensory overload.

Many of the insights in our research have come from adults with autism. They’ve told us how to make our task easier to understand, and they’ve described the strategies they’ve used to complete the task. Many have told us that although the virtual interaction is cognitively challenging, it is less intimidating and anxiety-provoking than real-life interactions.

Involving people with autism in research is key to its success. As virtual-reality technology improves and becomes increasingly affordable, the possibilities may be limited only by our collective imagination.

Nathan Caruana and Jon Brock are research fellows at Macquarie University in Sydney, Australia.

References:

- Caruana N. et al. Neurosci. Biobehav. Rev. 74, 115-125 (2017) PubMed

- Schilbach. L. et al. J. Cogn. Neurosci. 22, 2702-2715 (2010) PubMed

- Caruana N. et al. Neuroimage 108, 34-46 (2015) PubMed

- Caruana N. et al. PeerJ. 5, e2899 (2017) PubMed

- Caruana N. et al. Autism Epub ahead of print (2017) PubMed

- Leekam S. Philos. Tran. R. Soc. Lond. B. Biol. Sci. 371, 20150082 (2016) PubMed

Recommended reading

New organoid atlas unveils four neurodevelopmental signatures

Glutamate receptors, mRNA transcripts and SYNGAP1; and more

Among brain changes studied in autism, spotlight shifts to subcortex

Explore more from The Transmitter

Can neuroscientists decode memories solely from a map of synaptic connections?

AI-assisted coding: 10 simple rules to maintain scientific rigor