Technology from ‘Harry Potter’ movies brings magic of brain into focus

The same techniques that generate images of smoke, clouds and fantastic beasts in movies can render neurons and brain structures in fine-grained detail.

The same techniques that generate images of smoke, clouds and fantastic beasts in movies can render neurons and brain structures in fine-grained detail.

Two projects presented yesterday at the 2017 Society for Neuroscience annual meeting in Washington, D.C., gave attendees a sampling of what these powerful technologies can do.

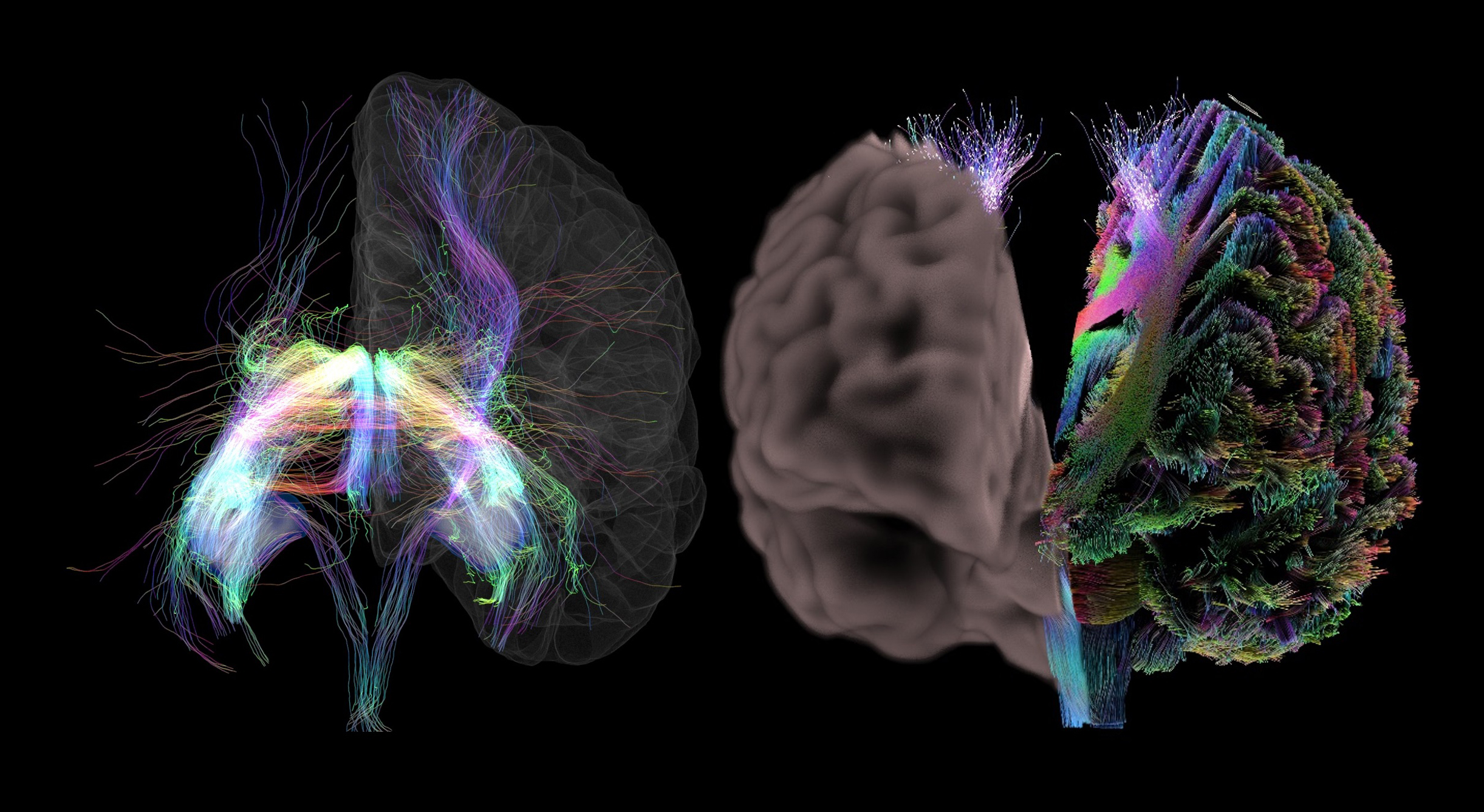

“These are the same rendering techniques that are used to make graphics for ‘Harry Potter’ movies,” says Tyler Ard, a neuroscientist in Arthur Toga’s lab at the University of Southern California in Los Angeles. Ard presented the results of applying these techniques to magnetic resonance imaging (MRI) scans.

The methods can turn massive amounts of data into images, making them ideally suited to generate brain scans. Ard and his colleagues develop code that enables them to easily enter data into the software. They plan to make the code freely available to other researchers.

The team is also combining the visualization software with virtual reality to enable scientists to explore the brain in three dimensions, and even perform virtual dissections of the brain. In one demo, the user can pick at a colored, segmented brain that can be pulled apart like pieces of Lego.

“This can be useful when learning neuroanatomy,” Ard says. “The way that I learned it, we had to look at slices, and that’s real hard. This is a way that allows you to understand 3-D structure better.” The team plans to release the program, called Neuro Imaging in Virtual Reality, online next year.

Tracing neurons:

Virtual reality can also be used to trace neurons: Scientists can don the headsets and step into their microscopic scans of neurons.

Just 1 cubic millimeter of human brain tissue packs about 50,000 neurons, each of which form some 6,000 connections with their neighbors. Traditional techniques require researchers to go through 2-D computer images of stained neurons, constantly rotating the view to see what’s behind a neuron’s branches.

A new virtual reality software enables scientists to walk around their images, tracing neurons using handheld controllers. This is a more natural and direct way to interact with 3-D information, says Will Usher, a graduate student in Valerio Pascucci’s lab at the University of Utah in Salt Lake City, who demonstrated the program.

To test the program, four neuroanatomists with professional tracing skills mapped a series of labeled neuron image stacks from the monkey visual cortex in both the virtual reality setup and on a conventional desktop computer.

The scientists’ tracings were as accurate in virtual reality as with the desktop software. But they were able to trace the neurons about 1.7 times faster using the virtual reality tool.

The team plans to release the program for commercial virtual reality devices in a few months to a year, Usher says.

For more reports from the 2017 Society for Neuroscience annual meeting, please click here.

Syndication

This article was republished in Scientific American.

Recommended reading

Expediting clinical trials for profound autism: Q&A with Matthew State

Too much or too little brain synchrony may underlie autism subtypes

Explore more from The Transmitter

This paper changed my life: Shane Liddelow on two papers that upended astrocyte research

Dean Buonomano explores the concept of time in neuroscience and physics