Tech grant takes aim at autism diagnosis, treatment

A $10 million grant from the U.S. National Science Foundation is funding a five-year project to develop new technologies that can help clinicians diagnose and treat autism.

-

U. Miami & Carnegie-Mellon U.

Face time: A new technique allows researchers to measure the emotional synchrony between a mother and infant by tracking the movements of facial muscles.

A simple game of peek-a-boo is dense with information: Facial and vocal expressions of surprise and delight, hand and body movements and the mirroring of emotion between parent and child all provide clues to social development and may signal potential problems early in childhood.

There has been no way to capture the wealth of data from such interactions other than by simple observation. However, one year into a new $10 million Expeditions in Computing grant from the U.S. National Science Foundation, a consortium of computer and behavioral scientists is developing technologies to precisely record and analyze these types of fine-grained behavioral data.

“The idea is to get unobtrusive quantitative data on behavior, communication and social development in kids on and off the spectrum,” says Matthew Goodwin, assistant professor of computer and information science at Northeastern University in Boston and the project’s associate director.

Launched in August 2010, the five-year project includes more than 50 researchers at Emory University, Carnegie Mellon University, the University of Pittsburgh, the University of Illinois at Urbana-Champaign, the University of Southern California and the Massachusetts Institute of Technology.

Together, the researchers aim to develop behavioral imaging technologies that can help identify new ways to diagnose and treat autism and other developmental disorders.

The project will pair autism experts with experts in computer vision, audio speech analysis and wireless physiology. The researchers plan to combine wearable sensors that capture and quantify physiological measures such as body temperature, heart rate and respiration with specialized cameras and microphones to measure eye gaze, facial and body expressions and vocal expressions.

Algorithms that can identify patterns in vast amounts of data and synchronize the various ‘channels’ of information will produce a nuanced snapshot of an individual’s behavior and responses in specific situations over time.

The project will require fundamental advances in computing, says James Rehg, the project’s lead investigator and professor of interactive computing at the Georgia Institute of Technology. “It’s a tremendous modeling challenge to represent all this complexity in a way that an algorithm can analyze.”

Signal analysis:

The researchers plan to collect these data as children interact with parents, therapists, teachers and peers. The data will not only provide new insights into the behavior of children with autism but may also help standardize aspects of diagnosis and treatment, they say.

“We won’t be able to replace human beings with technology,” says Catherine Lord, director of the Institute for Brain Development at New York-Presbyterian Hospital in New York City, who is providing clinical guidance to the project team. “But we need a way of measuring progress that is more objective [than observation alone].”

Goodwin points out that an expert clinician draws upon years of experience to diagnose a child with autism. Similarly, an expert teacher has a vast knowledge of which techniques work with certain children when trying to teach a new skill or correct a problem behavior. “But it’s difficult for them to convey exactly what they are doing,” he says.

The new technologies aim to provide a moment-by-moment record of these types of interactions that can be used to train parents, clinicians, teachers and aides in the same techniques.

The project does not include a clinical component, however. “It’s basic science,” says Rehg. “Clinical application is a long way off.” But collaborators have already stepped forward, offering to use their own funding to implement some of the technologies.

For example, administrators atthe Center for Discovery, a residential educational program for people with disabilities in Harris, New York, approached the researchers soon after the award was announced. “They said, ‘We don’t want to wait five years for results. We have the money to wire the classroom, sign us up,’” recalls Goodwin.

Six months ago, Goodwin and his colleagues began outfitting a classroom for children with autism at the center with eight cameras embedded in the ceiling and three ceiling-mounted microphones.

Each teacher and student is wired with a lapel microphone, and an adhesive sensor that records body temperature, heart rate, respiration and skin conductivity. The children also wear wireless accelerometers that capture and quantify their repetitive motor movements. “They are a living lab for us,” says Goodwin.

Although the children were initially spooked by the hardware, they adjusted quickly, says Teresa Hamilton, associate director of the center. “The kids see themselves on camera and they love it,” she says. “Our parents are thrilled too.”

The researchers plan to analyze the data with input from the center’s staff.

Meanwhile, automated facial analysis is already being used by another group of researchers to analyze facial expressions in infants and children with autism. “Facial expression is critical to regulating social interaction,” says Jeff Cohn, professor of psychology at the University of Pittsburgh.

Teams at the University of Miami, Carnegie-Mellon University and other universities are using the technology to observe the synchrony in facial expressions between mothers and infants.

Primary perspective:

Another Expeditions project in an earlier stage of development is using mathematical techniques to quantify the characteristic speech patterns of children with autism and analyze how they change over time and in different situations.

Computers can detect tiny variations in pitch and intonation and differences in timing in vocal utterances. This type of quantifiable data can flesh out a clinician’s intuitive sense that a child’s speech is similar to that of other children with autism.

The tools being developed are not meant to supplant clinical expertise, says Shrikanth Narayanan, professor of engineering at the University of Southern California in Los Angeles. Instead, they aim to provide more precise, quantifiable information.

“Computers can remember what happened yesterday, or ten days before, and pick up patterns in what is different today,” says Narayanan, who is studying both speech patterns and laughter in children with autism. “The question is how we can employ that as an extra piece of information to support clinicians.”

Another group of researchers is creating wearable devices that send a stream of data to computers, including precise location, and motion patterns of the wearer and surrounding people. A microphone sensitive enough to capture the conversations of passersby provides a soundtrack to the visual and kinesthetic data.

Together, these devices create a three-dimensional, first-person perspective of an individual with autism. They may one day allow people with the disorder more independence by streaming live information about their surroundings to parents or clinicians who can monitor their safety from a distance.

A second small camera pointing at the eye of the wearer can track his or her gaze and, together with sensors monitoring the movement of torso and arms and head movements, predict intention.

“All of these are synchronized so that we can tell if you are at the bus stop, on the bus, or walking, waiting or sitting, and where your attention is,” says Takeo Kanade, professor of computer science and robotics at Carnegie Mellon University in Pittsburgh. The devices could also incorporate a warning system for individuals when they are in danger.

That’s an application that really appeals to Lord, who is more focused on practical applications of technology to support clinicians and people with autism than on advances in computing. “Anything that will give adults with autism more independence, from hygiene and safety reminders to ways to communicate more clearly, would be very helpful,” she says.

Recommended reading

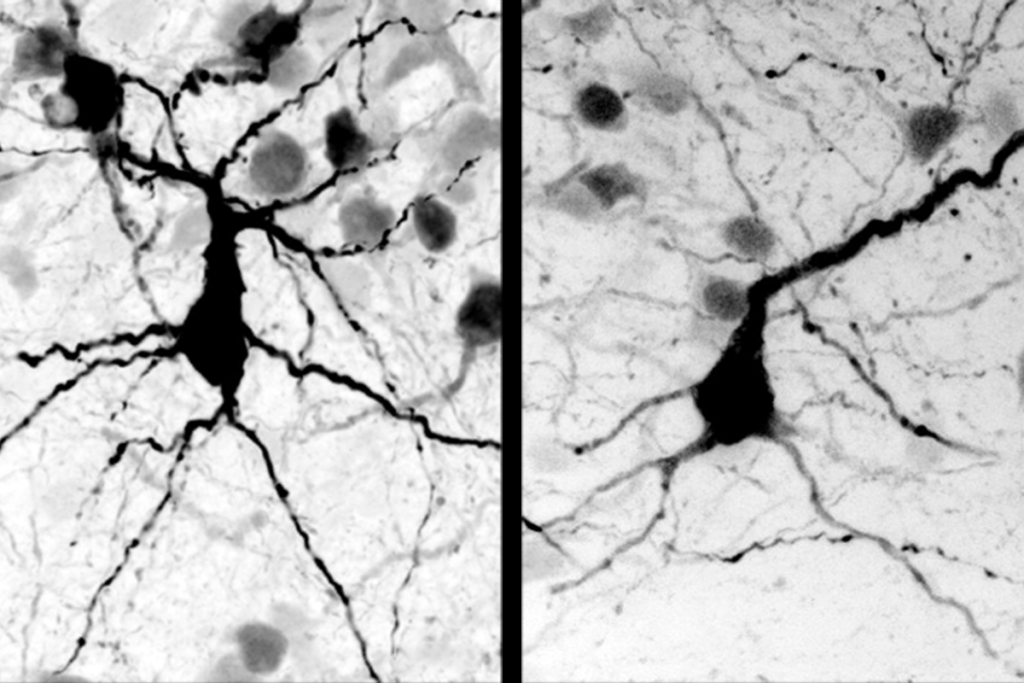

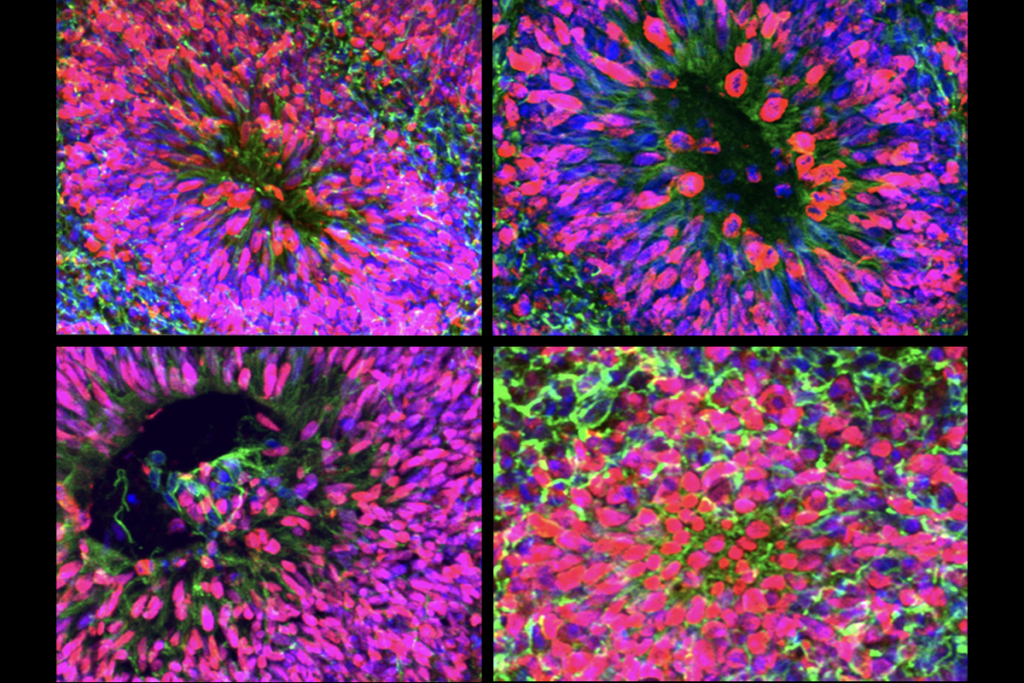

New organoid atlas unveils four neurodevelopmental signatures

Explore more from The Transmitter

Snoozing dragons stir up ancient evidence of sleep’s dual nature

The Transmitter’s most-read neuroscience book excerpts of 2025