‘Syntax’ of mouse behavior may speak volumes about autism

An algorithm that decodes and quantifies mouse body language could reveal the brain circuits underlying certain autism features.

When Robert ‘Bob’ Datta purchased an Xbox Kinect camera for his laboratory six years ago, he had in mind something much cooler than playing video games: He wanted to revolutionize how people study mouse behavior.

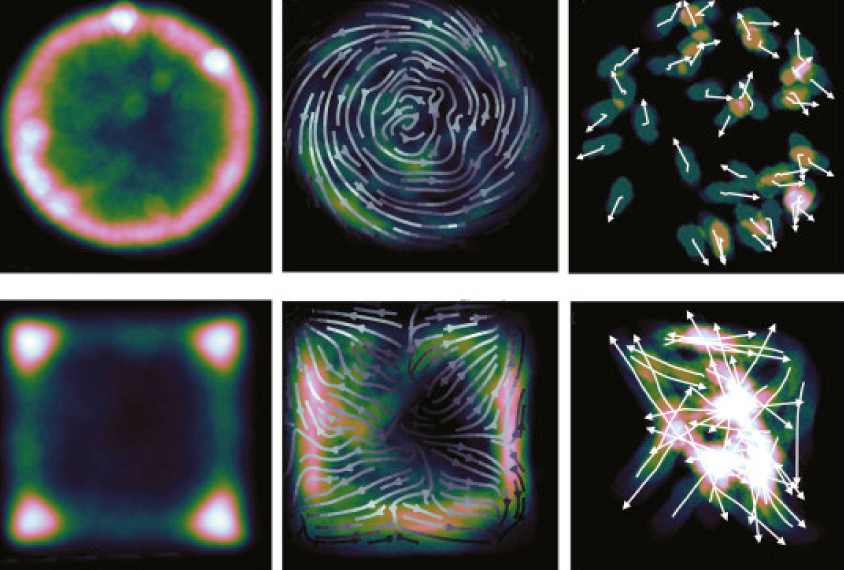

He affixed the $100 camera above a circular enclosure at his Harvard University lab. He released a mouse into the enclosure and let it explore the space. The camera is particularly adept at sensing depth — enabling a person to use his body as a video-game controller — but in this case, it could accurately gauge where the mouse’s body was in space.

Within weeks, he had collected dozens of hours of videos showing mice as they walked, paused, darted or raised their heads.

Datta’s true innovation is what he did with the videos he collected: He developed an algorithm to decipher mouse body language and quantify it.

Most 3-D captures of mouse behavior are unwieldy, requiring researchers to position several cameras at various angles. Researchers then carefully observe and categorize the videos, often with stopwatches, becoming experts in mouse behavior: Some can tell a hyperactive mouse from a sluggish one, or a daring mouse from one that’s anxious.

But scientists can misinterpret mouse behavior just as they can that of their fellow humans.

New automated approaches, including Datta’s, aim to eliminate this subjectivity and make mouse studies more accurate.

Datta developed what he calls a “syntax” of mouse behavior: Just as syllables make up a word, mouse behaviors such as grooming comprise quantifiable parts1. These ‘behavioral syllables,’ such as a nod of the head or a turn of a foot, combine to form larger movements.

This syntax is resistant to misinterpretation, Datta says. “The key thing we’ve done here is taken the human out of the equation.”

Sorting syllables:

Datta’s technique holds promise for understanding autism. The condition is marked by distinct behaviors, so behavioral syllables may vary among the many mouse models. His approach may help researchers map the relationship between a specific mutation and the resulting behavior.

Based on brain recordings and his algorithm, Datta says he may be able to link behavioral syllables to specific brain circuits. “If we can treat behavior as if it’s a language, then maybe we could gain some insight into how it’s structured and how the brain might create it,” he says.

The syllables may also give clinical researchers a more precise ruler for measuring a drug’s effects on behavior.

The algorithm relies on artificial intelligence to identify and classify subunits of mouse behavior from hours of videos. It does not identify predefined behaviors; its only instruction is to find movements that are repeated in a predictable way.

The idea is analogous to sorting socks of the same color, Datta says. The computer can match and sort small movements into groups even when they share similar features — like separating a white dress sock from a white athletic sock. In a behavioral test that measures anxiety in mice, for instance, the algorithm found between 40 and 60 behavioral syllables.

It also takes into account when the syllables occur. Datta’s team tested several algorithms before finding one that best predicts the order of the behavioral syllables it defined.

Mapping circuits:

Because it is automated, the technique also speeds up data analysis — but comes with some speedbumps.

Computers have limits, says Maria Gulinello, research associate professor at Albert Einstein College of Medicine in New York, who was not involved in the work. “There are a lot of things you can’t really determine,” with a computer, she says. “Is the animal not moving because it’s scared? Is it not moving because it is sick? Is it in pain?”

Other researchers are similarly skeptical that computers can measure behavior more accurately than people can.

“When it comes to the interpretation of the behavioral observations, I think a behavioral scientist has to be involved and make that call,” says Mehrdad Shamloo, research professor of neurosurgery at Stanford University in California.

Datta says his method isn’t at odds with traditional behavioral observation. However, person-led observation is not “a gold standard” but rather a “historical standard” ready for updating, he says.

In fact, his work seems to be part of a larger trend: Another team also uses a depth-sensing camera to track the interactions of two caged mice. The team also created software to code the mice’s behaviors2.

Unlike Datta’s algorithm, however, this one is designed to detect specific behaviors. The team has also developed another algorithm that can cluster behaviors it isn’t told to look for, says lead researcher David Anderson, professor of biology at the California Institute of Technology in Pasadena.

For example, the algorithm found and clustered frames that show two mice fighting, a behavior it is not programmed to identify. The method could help researchers pinpoint behaviors in mouse models of autism that they wouldn’t otherwise know to measure, Anderson says.

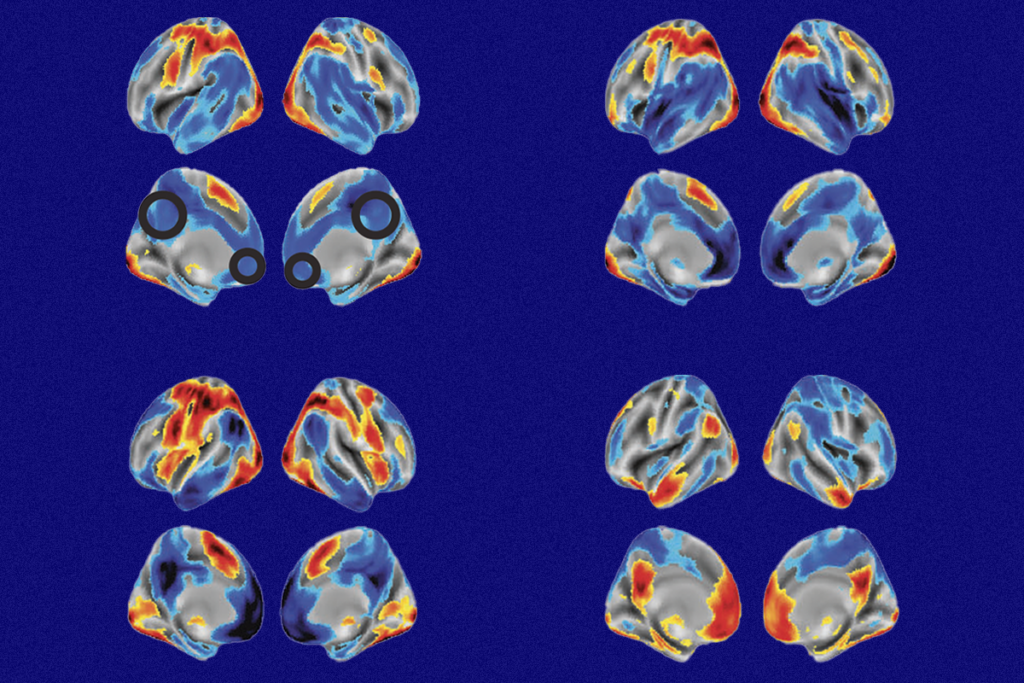

Both teams have the same ultimate goal: to map mouse behaviors to brain circuits. This could be useful for studying mutations tied to autism, Anderson says. “What we ideally want to see is if there is a change in behavior caused by a mutation, where in the brain is that change originating?”

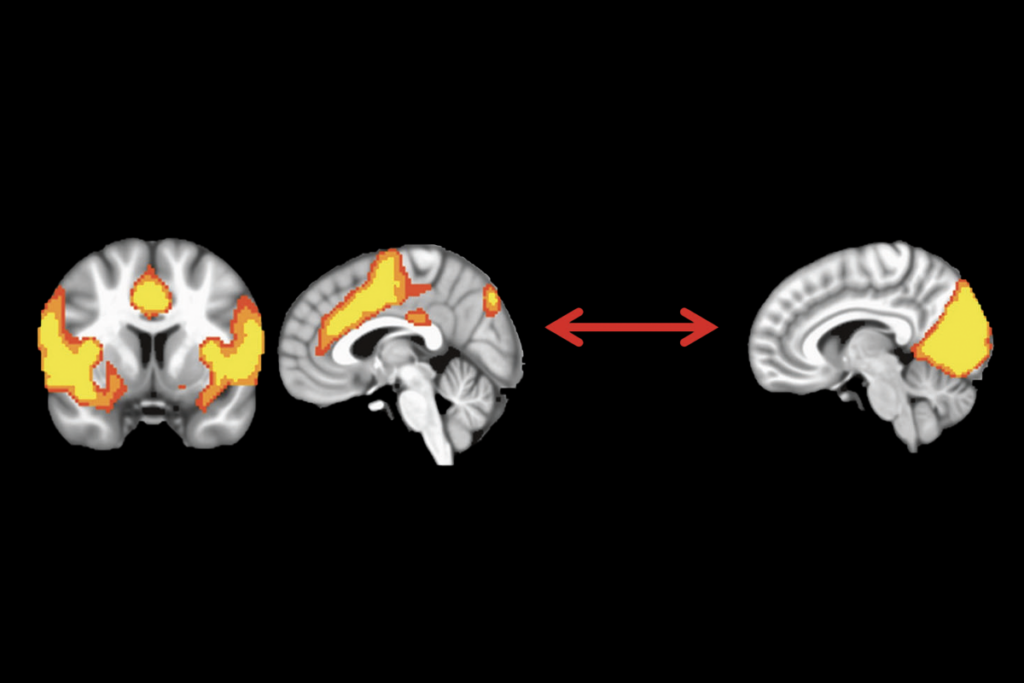

Datta is integrating his behavioral analysis with neural recordings in mice. His team is matching patterns of brain activity, detected with an implanted miniature microscope, to behavioral syllables in real time. Specifically, they are correlating mouse behaviors with activity in the striatum — a brain region involved in motivation and movement. Datta plans to eventually do this work in mouse models of autism.

In the meantime, even the skeptics are curious about the approach’s promise. Shamloo says he would use Datta’s setup if given the chance. “I would definitely give it a try.”

References:

Recommended reading

Too much or too little brain synchrony may underlie autism subtypes

Developmental delay patterns differ with diagnosis; and more

Split gene therapy delivers promise in mice modeling Dravet syndrome

Explore more from The Transmitter

During decision-making, brain shows multiple distinct subtypes of activity

Basic pain research ‘is not working’: Q&A with Steven Prescott and Stéphanie Ratté