Studies of autism treatments lack standard yardsticks

Clinical trials of autism treatments rarely use a consistent set of tools to measure efficacy, making it tough to compare the treatments.

Clinical trials of autism treatments rarely use a consistent set of tools to measure efficacy, a new study suggests1. Instead, researchers generally design questionnaires specific to their study goals, and 69 percent of these tools are used only once.

The lack of consistency could obscure positive results. “Losing even one treatment that could possibly be helpful, just because we don’t use the right instruments, is a big loss,” says study investigator Natascia Brondino, assistant professor at the University of Pavia in Italy.

This variability also makes it difficult to compare treatments, or even the same treatment across studies.

“It doesn’t enable us to aggregate and compare data, and it makes the work slower in terms of understanding the big picture of autism,” says Nalin Payakachat, associate professor of pharmacy practice at the University of Arkansas for Medical Sciences, who was not involved in the study.

Researchers have studied this surfeit of tools before: There are hundreds of them. But the analyses covered short periods of time or only included studies of specific age groups. The new study spans nearly 36 years of trials and provides a comprehensive look at the issue.

Critical demand:

The researchers combed the Web of Knowledge for all English-language clinical trials of treatments for autism traits. Their search extended from 1980 — the year autism first appeared in the Diagnostic and Statistical Manual of Mental Disorders — to 2016. They excluded case reports, individual studies and meta-analyses.

The 406 studies they chose report the effects of drugs, psychotherapy and dietary supplements on core autism traits such as social difficulties and repetitive behaviors. For each study, they looked at the traits measured and whether the measurement tool — usually a questionnaire — was appropriate. They also logged how frequently each tool was used across studies. The results appeared in July in Autism.

Just over half of the tools had been adapted from previously reported methods or standard assessments — and so had been validated in some way, the researchers found. Only three validated tools that measure core traits were used in more than 5 percent of the studies: the Social Responsiveness Scale, the Childhood Autism Rating Scale and the Autism Diagnostic Observation Schedule.

These three tools are designed to screen for or diagnose autism. So they are good at identifying autism traits but may not be sensitive to changes in those traits over time, says So Hyun Kim, assistant professor of psychology in clinical psychiatry at Weill Cornell Medicine in New York, who was not involved in the study.

“They aren’t designed to measure treatment outcomes, so they may not capture subtle changes in symptoms,” Kim says. “There’s a critical demand for valid, objective measures of treatment outcomes that can detect subtle changes over the course of an intervention.”

The study does not offer solutions to this problem, however.

The researchers lumped studies involving participants of various genders and ages, so their results reveal little about how to refine the instruments. Analyses of tools specific to subgroups defined by age, sex or autism traits could reveal the best instruments and outcome measures for clinical studies, Payakachat says.

Brondino and her colleagues plan to update their database every three years with additional studies.

References:

- Provenzani U. et al. Autism Epub ahead of print (2019) PubMed

Recommended reading

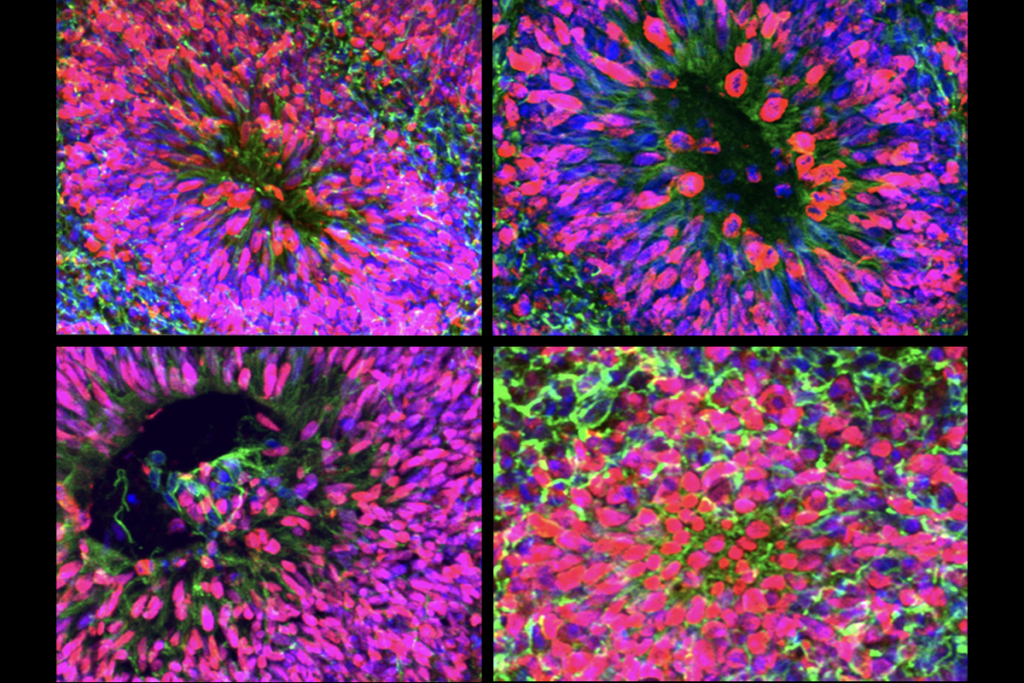

New organoid atlas unveils four neurodevelopmental signatures

Glutamate receptors, mRNA transcripts and SYNGAP1; and more

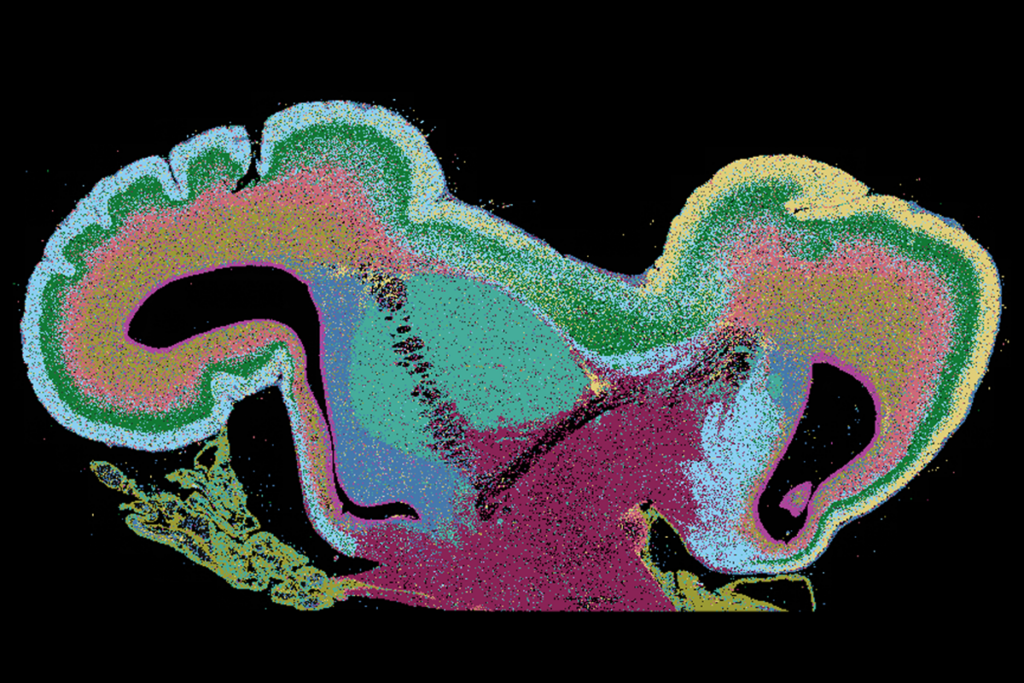

Among brain changes studied in autism, spotlight shifts to subcortex

Explore more from The Transmitter

Psychedelics research in rodents has a behavior problem

Can neuroscientists decode memories solely from a map of synaptic connections?