Statistical trap

Half of behavioral neuroscience studies published in top-tier journals make a crucial statistical error.

Neuroscientists don’t know much about statistics.

That’s the cynical take, anyway, of a shocking paper published 26 August in Nature Neuroscience. The researchers found that half of behavioral neuroscience studies published in top-tier journals make a crucial statistical error.

Here’s a hypothetical example of how the test that all of these studies left out, known as the difference between differences, might be used in an autism study.

Say you’re testing whether a new glutamate inhibitor has varying effects on the social behavior of two different mouse models of autism. You give the drug to one set of mutants and they spend significantly more time, say 25 percent more, with other mice than they did before getting the drug. The second set of mutants spends only 10 percent more time with other mice after getting the drug, which isn’t significant.

If you think that these results show that the two kinds of mice respond differently to the drug, you’re wrong.

Here’s why: Before you could prove this difference between the mice, you would have to do another statistical test, comparing the difference found in one group to the difference found in the other. If the difference between differences is significant, then you’re in the clear.

The new report analyzed 513 behavioral, systems and cognitive neuroscience papers published in Nature, Nature Neuroscience, Neuron, Science and The Journal of Neuroscience in 2009 and 2010. Of these, 157 should have performed this particular statistical test, but only 78 did so.

The problem extends well beyond behavioral neuroscience, too. The researchers looked at 120 Nature Neuroscience articles related to cellular and molecular neuroscience. None of these used the correct statistical analysis, and at least 25 “explicitly or implicitly compared significance levels” without performing this crucial test.

I asked the researchers whether any of the studies they looked at were autism-related. In the interest of collegiality, they declined to name names, but they did scan their database and found one article about autism.

In this particular case, they said, the significant effect and the non-significant effect went in opposite directions (using my previous example, it would be as if the drug had improved social interactions in one mutant and worsened them in the other), so the error was unlikely to have affected the study’s conclusions.

Still, with the error cropping up in so many studies, and in some of the best journals, it’s difficult not to be concerned. How much wasted money and effort is going toward replicating or building upon an effect that was never real to begin with?

Luckily, the problem has an easy solution. Check, and then double-check, those darn statistics.

Recommended reading

Expediting clinical trials for profound autism: Q&A with Matthew State

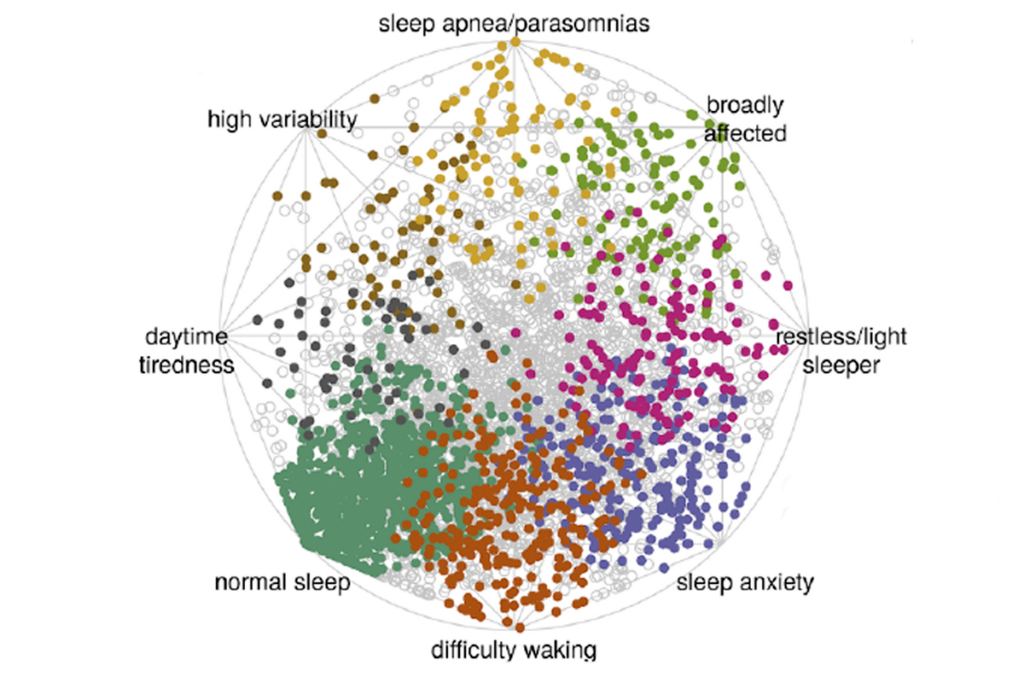

Too much or too little brain synchrony may underlie autism subtypes

Explore more from The Transmitter

This paper changed my life: Shane Liddelow on two papers that upended astrocyte research

Dean Buonomano explores the concept of time in neuroscience and physics