Sound response may explain language problems in autism

Children with autism process sounds a split second slower than typically developing children, according to a new study that measured the magnetic fields emitted from the children’s brains.

Children with autism process sounds a split second slower than typically developing children, according to a new study that measured the magnetic fields emitted from the children’s brains.

Although preliminary, the findings may partly explain the language and communication problems that burden so many with the disorder, the researchers say.

“The findings [show a delay of] only fractions of a second, but those really matter in spoken speech,” says Timothy Roberts, vice chair of radiology research at Children’s Hospital of Philadelphia, who presented the data last week at the annual meeting of the Radiological Society of North America in Chicago. “Our thought here is these delays kind of cascade through later and later processing,” Roberts says.

Many parents and clinicians report a delay in language acquisition or a loss of previously learned language in young children with autism. Reflecting the diversity of the disorder, some people with autism are also extremely sensitive to normal sounds, whereas others are insensitive to extremely loud sounds1.

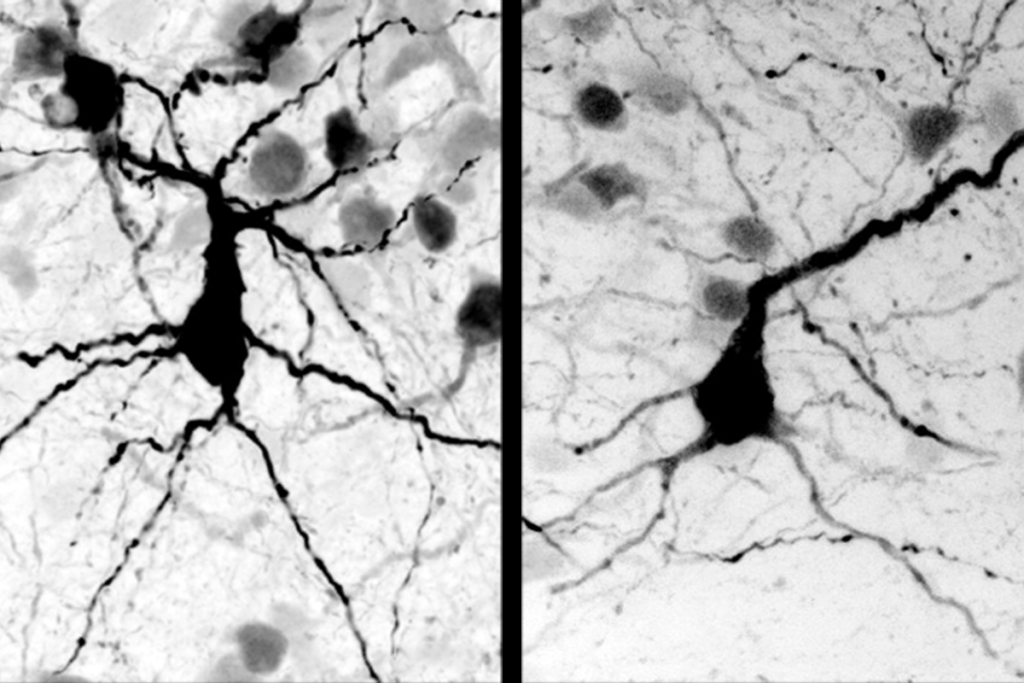

Roberts and his team were the first, about nine years ago, to explore these auditory deficits using magnetoencephalography (MEG), a brain imaging technique used to measure magnetic fields produced by the electrical activity of neurons. MEG is routinely used to pinpoint the misfiring brain areas that cause seizures in individuals with epilepsy.

Using MEG to study autism was “just the convergence of a problem and a solution,” Roberts says.

Many researchers hypothesize that autism is a communication failure between neighboring brain regions.

But the difficulty with proving this theory is that electrical signaling between brain regions occurs over a few hundredths of a second ― much too rapid to be picked up by functional magnetic resonance imaging (fMRI) or positron emission tomography (PET), which detect temporal differences on the order of seconds or minutes.

Like those methods, MEG can pinpoint the region of activity, but is much more sensitive to changes over short periods of time. “MEG’s real-time capability seemed to be ideally suited to look at autism,” Roberts says.

Short lag:

In 1999, Roberts led MEG experiments on 15 children with autism and 17 healthy controls2,3 which were the first to show that the brain response to sound in children with autism lags behind that of typically developing children.

In July 2007, a $1.25 million grant from the National Institutes of Health allowed the researchers to broaden these experiments to a much larger number of children.

For the scans, participants sit in a large and comfortable chair, their heads under a large MEG helmet that resembles “one of those old-fashioned hair dryers,” Roberts says.

The children watch a soundless movie, while tiny earphones play a series of beeps or vowel sounds. Each frequency evokes an automatic electrical ‘signature’ in the brain. This little bit of electrical activity generates a magnetic field, which passes through the skull and is immediately sensed by the 275 detectors lining the inside of the helmet.

At last week’s conference, Roberts reported results from 64 children aged 6 to 15, including 30 who have autism. In the first set of experiments, the children heard beeps of different frequencies, and the MEG detectors recorded the brainʼs response to each sound.

In children with autism, this response is delayed by about 20 milliseconds compared with the response in typically developing children, Roberts found.

In a second ‘mismatchʼ experiment, the children heard three successive speech sounds, such as ‘ah, ah, ah,ʼ followed by a different speech sound, such as ‘ouʼ. Compared with the control group, children with autism respond to the novel sound with a delay of about 50 milliseconds.

People normally speak at a rate of about four syllables per second, or 250 milliseconds per syllable, meaning that a delay of 50 milliseconds could be “quite catastrophic,” Roberts says.

“If you’re saying ‘elephant,’ they’d be stuck on the ‘el’ when you’re on the ‘ant’. If the brain can’t catch up, can’t cope with the constant stream of information, then that might be why spoken speech presents such difficulties,” he says.

Diagnostic tool:

Roberts is still recruiting participants, hoping to enroll a total of 300 before the grant expires in 2012. He has not yet submitted the results to a journal for publication.

In future experiments, he plans to test young toddlers, in hopes of using MEG signatures as a diagnostic tool for autism. “We’d like to predict if there’s some sort of abnormal brain function even before they get a diagnosis [of autism],” he says.

Other experts agree that auditory processing should be studied in much younger children Studies in the past year have shown that the perception of native speech sounds begins in babies as young as 7 or 8 months of age4.

“Those studies have shown now that language process is developing very, very early, and that auditory processing is much linked to language learning,” says Eira Jansson-Verkasalo, a professor at the University of Oulu, in Finland. Jansson-Versakalo has used electroencephalography (EEG), a cousin of MEG, to identify millisecond delays in sound processing in people with Asperger’s syndrome5,6.

“It will be very important to study these children as infants, because when we find it early enough, we can teach or rehabilitate the child so that these difficulties don’t get worse,” she says. MEG is a great technique for studying babies, she adds, because it’s non-invasive and doesn’t require them to perform specific cognitive tasks.

The biggest downside to using MEG as a diagnostic tool is cost. The machines cost about as much as an fMRI machine, roughly $3 million. There are only about 100 machines worldwide; of the 30 in the U.S., only 2 are in pediatric hospitals.

“It’s expensive, but when you’re thinking about rehabilitation, that’s expensive as well,” Jansson-Verkasalo says. “I think if we find these children early enough, then this rehab will be cheaper.”

References:

-

O’Neill M. and Jones R.S. J. Autism Dev. Disord. 27, 283-293 (1997) PubMed ↩

-

Gage N.M., Siegel B. and Roberts T.P. Brain Res. Dev. Brain Res. 144, 201-209 (2003) PubMed ↩

-

Kuhl P.K. et al. Philos. Trans. R. Soc. Lond. B. Biol. Sci. 363, 979-1000 (2008) PubMed ↩

-

Jansson-Verkasalo E. et al. Neurosci Lett. 338, 197-200 (2003) PubMed ↩

-

Jansson-Verkasalo E. et al. Eur. J. Neurosci. 22, 986-990 (2005) PubMed ↩

Recommended reading

New organoid atlas unveils four neurodevelopmental signatures

Explore more from The Transmitter

Snoozing dragons stir up ancient evidence of sleep’s dual nature

The Transmitter’s most-read neuroscience book excerpts of 2025