Simple steps aim to solve science’s ‘reproducibility problem’

Leaders from the National Institutes of Health and Nature Publishing Group say an array of simple reforms can boost the reliability of research findings. Their suggestions spurred a lively audience discussion yesterday at the 2014 Society for Neuroscience annual meeting in Washington, D.C.

With more than 30,000 neuroscientists buzzing about the reams of new data presented at the 2014 Society for Neuroscience annual meeting, leaders from the National Institutes of Health and a top scientific journal delivered a sobering message: Most of those findings will never be replicated.

At a lively symposium yesterday, they described an array of simple reforms — such as reporting protocols more thoroughly, training students more carefully in statistics and critically assessing mouse models — to try to boost the reliability of medical research.

Scientists in the audience were eager to talk about change but also seemed skeptical that these reforms would do much good given the entrenched cultural problems in science.

“It’s easy to underestimate how difficult it will be to change research practices, because the inertia is very strong and a lot of poor research practices are accepted as normal,” one attendee told the panelists. “It’s going to take something more fundamental than checklists.”

The lack of reproducibility in medical research has received much attention in the past few years. In 2011, for example, scientists from Bayer Healthcare published a review of the company’s internal studies, attempting to replicate 67 published reports that had identified potential drug targets. Less than 25 percent of the original findings held up. The following year, Amgen did a similar study of 53 ‘landmark’ cancer papers and found that just 6 could be confirmed.

Mouse models of disorders are also notoriously difficult to standardize. Earlier this year, scientists at the ALS Therapy Development Institute in Cambridge, Massachusetts, published a devastating description of their attempts to replicate the reported beneficial effects of nine treatments in a certain mouse model of the disorder. None of the treatments had any effect on the animals’ survival, the researchers found.

Checklist reform:

Closer home to autism research, Huda Zoghbi of Baylor College of Medicine in Houston relayed her experience trying to replicate behavioral findings reported in a mouse model of intellectual disability. She declined to specify the name of the model but said that it has been “beautifully published.”

Zoghbi’s team tested the animals for a learning and memory problem that has been reported — “and has been cured, no less” — in several top publications. But in her team’s hands, the animals behaved normally. The researchers looked at males and at females, at 3 months and 6 months, and in mice with two different genetic backgrounds. They even tried a different kind of memory test.

“I finally said, ‘We have to quit,’” Zoghbi said. The experiments cost around $50,000, which “could have been better spent on something that would advance science.”

The bad news has traveled far outside the scientific community. Several panelists mentioned a story titled “How Science Goes Wrong” that made the cover of theEconomist magazine last year.

All of this media attention “is frankly casting somewhat of a cloud over our biomedical research enterprise,” said Francis Collins, director of the National Institutes of Health (NIH).

In June, the NIH held a workshop with editors from dozens of scientific journals. The group agreed on a set of principles for journals to improve rigor in biomedical research. The principles include eliminating length limits for methods sections and using a checklist to ensure that papers transparently report key details — such as whether a clinical trial was blinded and what kind of statistical test the researchers used. These principles, published 30 October on the NIH’s website, have been endorsed by more than 120 journals, Collins said.

Nature Publishing Group implemented one such checklist for all of its life science journals in May 2013, said panelist Véronique Kiermer, director of author and reviewer services at Nature Publishing Group.

Kiermer presented preliminary data collected since instituting the new policies. One part of the checklist, for example, asks scientists to report the source of their cell lines — whether from a cell bank or as a gift from another researcher — as well as whether they authenticated the type of cells and tested for contamination.

In an analysis of 60 papers, Kiermer’s team found that most researchers reported the source of their cell lines but only about 10 percent had authenticated them. “A checklist is not necessarily going to change this behavior immediately,” Kiermer noted, but she added that having the data in hand reveals the scope of the problem.

While the panelists offered concrete recommendations for improving reliability, they largely avoided the elephant in the room: money.

For example, audience members were eager to talk about the enormous pressure to publish in high-profile journals, which typically reject replications and papers with negative results. That pressure comes in large part because publications are key to getting a grant funded, and many researchers rely on grants for their salaries.

“There’s no solution here that brings you to where you want to be in the short term,” Collins said. The eventual hope is that universities can change how scientists are paid, but it’s unclear how long that will take, he said. “Is that 10 years, 5 years, 20 years? That’s where the argument is currently.”

For more reports from the 2014 Society for Neuroscience annual meeting, please click here.

Recommended reading

Expediting clinical trials for profound autism: Q&A with Matthew State

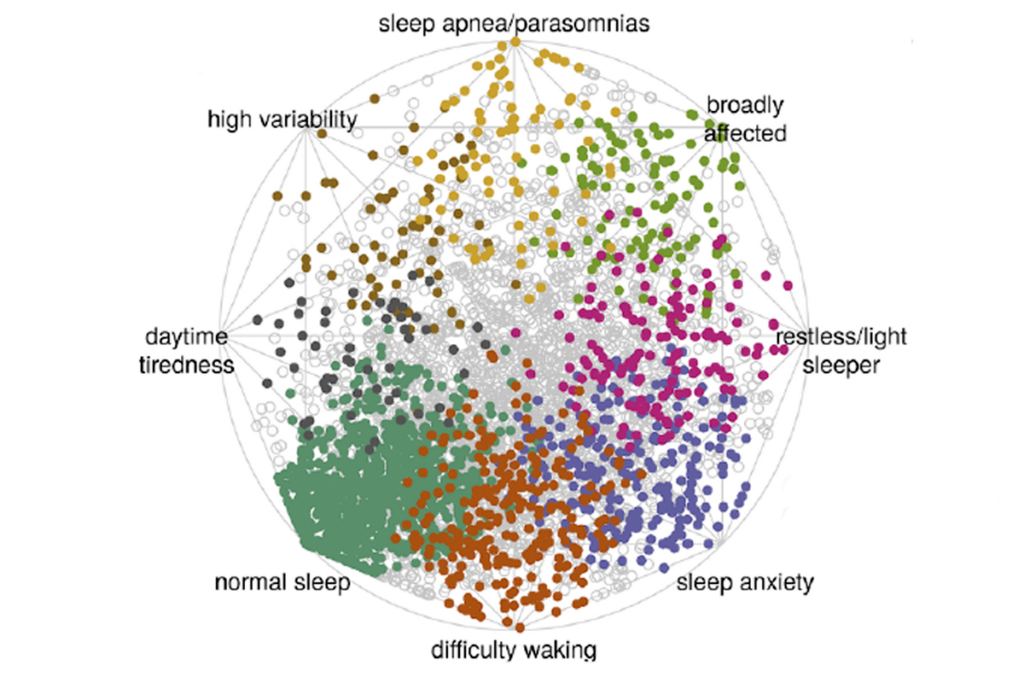

Too much or too little brain synchrony may underlie autism subtypes

Explore more from The Transmitter

This paper changed my life: Shane Liddelow on two papers that upended astrocyte research

Dean Buonomano explores the concept of time in neuroscience and physics