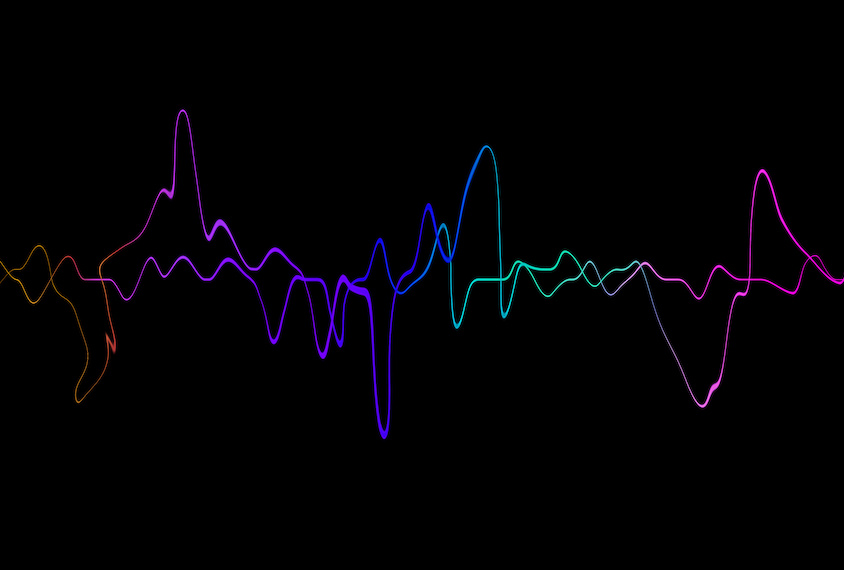

Among autistic people who are minimally verbal, those with more spoken words use sounds that typically occur later in development, such as ‘l,’ according to an unpublished analysis. The finding supports the idea that sound diversity expands with word development.

The work draws on data from the first repository of such sounds, which includes 7,077 vocalizations from eight minimally verbal autistic people, collected longitudinally over 4 to 64 weeks.

Researchers presented the findings at the 2022 International Society for Autism Research annual meeting last week. (Links to abstracts may work only for registered conference attendees.)

Early-developing consonants such as ‘m’ and ‘h’ are well represented in samples from the new repository, whereas other common early sounds, such as ‘b’, ‘p’ and ‘n’ are not.

“We have many more samples to look at, but there could be fundamental developmental changes that are occurring in this group that are different from typical development,” says study investigator Kristina Johnson, a research affiliate in the Affective Computing research group at the Massachusetts Institute of Technology (MIT) in Cambridge.

Vocalizations and vowels associated with expressions of frustration are consistently longer than those associated with a request, suggesting there are quantifiable differences between vocalization types. Request vocalizations, such as asking for a tablet or food, also appear to show greater linguistic structure.

“[Minimally verbal] individuals communicate through many different means, including gestures, sign language, augmentative communication, picture cards and many other methods,” Johnson says. “However, non-verbal vocalizations like ‘mmm,’ ‘uh,’ or ‘ah! ah! ah!’ are one of the most organic and universal communication methods.”

People with few or no spoken words produce a range of phonemes, or units of sound, that may serve as developmental markers or intervention targets, the researchers say. Although there are multiple definitions of minimally verbal, Johnson’s lab uses this term to describe people who speak fewer than 20 words.

“Our goal is to develop these consonant repertoires eventually for these individuals,” Johnson says. “And I think language is such an incredible metric for this population because typically developing people’s developmental language is so well studied.”

I

n 2020, Johnson and Jaya Narain, who was a graduate research assistant at MIT at the time, created Commalla, a system to collect, label, classify and translate non-speech vocalizations from minimally verbal people.The system captures vocalizations at home through a lapel-worn microphone and prompts caregivers to categorize them in real time as “delighted,” “dysregulated,” “frustrated,” “social,” “request” or “self-talk,” as well as custom labels.

“This is really exciting because the speech used in these analyses were not prompted or based on imitation like traditional motor assessments are, but rather collected from natural communication,” says study investigator Amanda O’Brien, a graduate student at Harvard University.

“One of the largest challenges in understanding potential motor-based differences in individuals who are limited in their expressive speech is that historically the only reliable way to quantify these differences was through these various repetition or imitation-based assessments, which can be challenging for this population to complete,” O’Brien says.

A separate pilot case study — presented at INSAR by Thomas Quatieri of MIT — used the Commalla dataset to analyze expressions of dysregulation, request and delight from a 19-year-old minimally verbal autistic man.

The team ultimately aims to train machine-learning models to recognize vocalizations automatically — which Johnson demonstrated using an audio snippet from her son, who is minimally verbal and autistic.

“Essentially what happens is that during model training, the model is looking for patterns in the extracted features that are consistent with the given labels,” Narain says. “And then we evaluate the model by testing how well it can classify data that it didn’t see during that training stage.”

With more data, the researchers say they want to see if minimally verbal people share developmental commonalities.

“I think we could begin to find commonalities in as little as 100 individuals,” Johnson says.

Read more reports from the 2022 International Society for Autism Research annual meeting.