Reckless report exaggerates flaws in brain scan software

A bug in brain imaging software casts doubt on the results of some autism studies, but it’s way too soon to write off the powerful imaging technique.

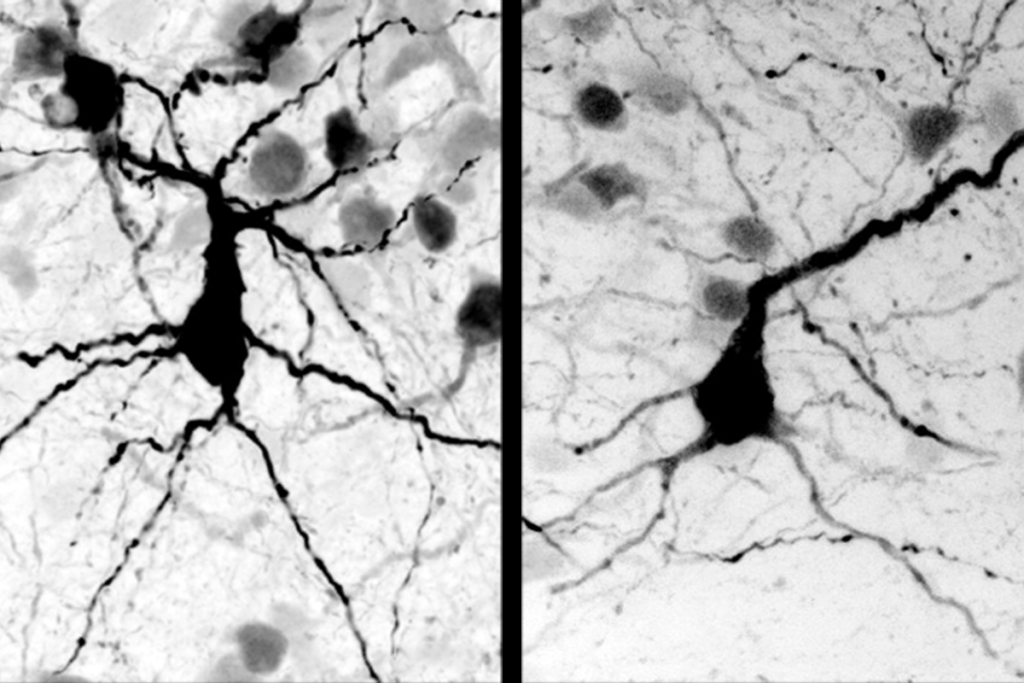

In June, a group of researchers broadcast what they claimed is a dangerous flaw in popular programs for analyzing brain images. A bug in the programs, the researchers warned, highlights hotspots of brain activity that are not actually present. It may even have infected some autism studies.

But the report, published in the Proceedings of the National Academy of Sciences, is itself flawed. It grossly overstates the impact of the software snafu on studies involving functional magnetic resonance imaging (fMRI).

Life Science

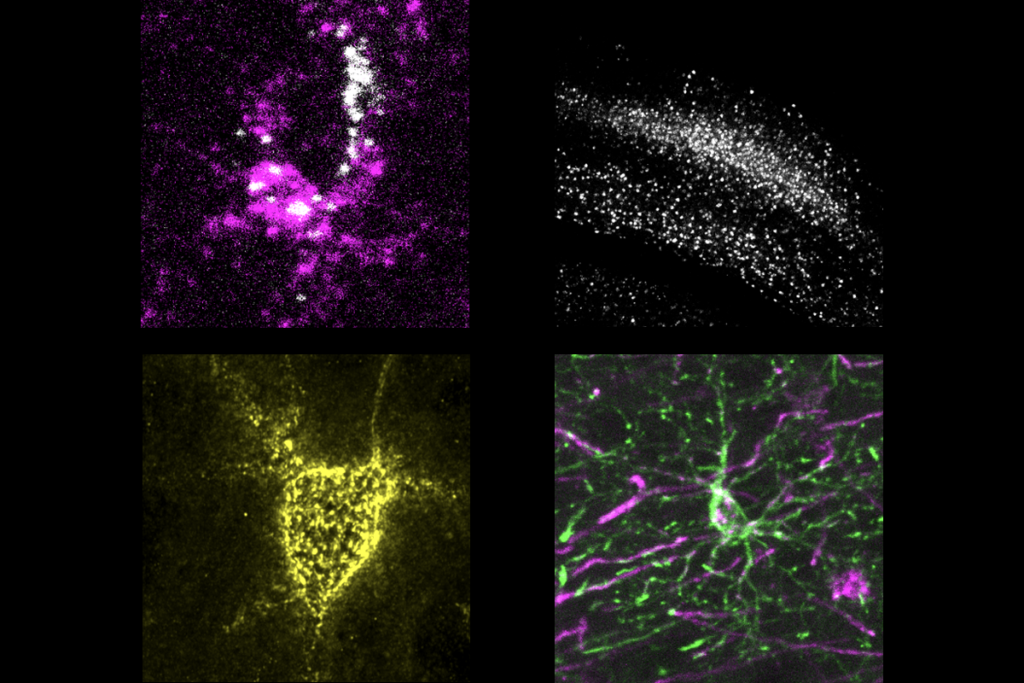

fMRI tracks changes in levels of blood oxygen in the brain as a proxy for neural activity, and is our most powerful tool for understanding how the brain works and may be different in conditions such as autism.

To make sense of brain imaging data, researchers rely on computer programs that spot patterns in neural activity. One such program, called Analysis of Functional NeuroImaging (AFNI), had a bug that flags clusters of brain activity that might not actually be there.

In their report, the researchers say this bug and other flaws in fMRI software and analysis call the results of 40,000 research papers into question. They claim that 70 percent of positive findings generated by fMRI are false.

Validity and reproducibility of results are a growing concern in our field, and reports that draw attention to potential pitfalls are a crucial part of the scientific process. But in this case, the criticisms are greatly exaggerated.

Suspect scans:

For one thing, many fMRI studies have not involved the clustering or other statistical models deemed problematic in the article.

For another, one of the bugs detailed in the report has been resolved. This bug increased the rate of ‘false positives’ in brain imaging data by incorrectly identifying significant clusters of brain activity. Clusters refer to activity in a set of neighboring ‘voxels’ — tiny sections of three-dimensional space. The bug found the excess significant clusters by failing to make the corrections needed when examining an entire brain, which is made up of many thousands of voxels.

But the makers of AFNI fixed this glitch in 2015. What’s more, to the best of my knowledge, no autism studies have employed AFNI.

The authors of the report say the media has overblown their conclusions, but they are the ones who stated that 40,000 studies were at stake. This statement alone may make it harder to publish studies that use fMRI.

The reckless claim has already generated a lot of mistrust of our field. One of my students and several of my colleagues have reported receiving ill-informed comments from reviewers and journal editors such as “Doesn’t one have to be particularly careful with fMRI given that it usually provides false results?”

Modern methods:

A second, subtler issue may affect some autism studies. It involves the so-called parametric approach, which is a way to mathematically control for some of the problems associated with whole-brain analysis.

This approach sometimes mistakenly flags group differences in activity clusters, because of the noisy nature of real-world data. These data often don’t meet the mathematical assumptions needed for the parametric approach to work properly. Left unchecked, some fMRI papers on autism may be less accurate than they claim to be.

The findings serve as an important reminder that we must always check our assumptions when analyzing brain scans, no matter which method we use. No piece of software can replace an expert scientist looking carefully at the data. In addition to scrutinizing the analytics, experts also must check the quality of data — for instance, to make sure they are free of motion artifacts.

We should also be flexible about what methods we use, making sure to pick those best suited to the data. In the late 1990s, when fMRI was first being applied to questions in cognitive neuroscience, a few groups rapidly dominated the statistical scene. They argued for a standard mathematical approach that all scientists should use.

My colleagues and I courted controversy by being one of the first groups to use an alternative method. In a paper published in 2003, we measured the signal change from hand-drawn regions of interest, following anatomical guidelines and testing hypotheses about the distribution of activity. Our nonstandard approach attracted fierce criticism during peer review, but challenging the status quo is necessary to make sure analyses are always improving.

Our approach held up in the hands of other researchers, bolstering its usefulness in the field. The authors of the new report emphasize the importance of sharing data and repeating experiments in different labs, and I wholeheartedly agree.

Challenging assumptions:

In the field of autism, the Autism Brain Imaging Data Exchange promotes sharing by giving researchers access to thousands of datasets from various fMRI studies. The Stanford Center for Reproducible Neuroscience, which helps researchers share their methods and data, is another spectacular effort to improve research quality.

Brain imaging in autism is particularly challenging. Many study participants are young children, and many have sensory sensitivities that the noisy, small fMRI scanner can aggravate. Some children also have conditions such as intellectual disability that can make participation in brain imaging studies even more difficult.

As a result, fMRI studies for autism are relatively small. Because autism is heterogeneous, reproducing results from small studies can be challenging. For that reason, I would like to see an effort to fund a collaboration to troubleshoot accuracy issues in fMRI data related to neurodevelopmental conditions.

Scientists from multiple labs should systematically examine the assumptions in fMRI and other neuroimaging techniques in a common, large sample across multiple sites. By doing so, we can check the analytic methods of individual studies and invent new methods.

We also could develop a consensus on the merits of using different imaging and analytic techniques to find biomarkers for neurodevelopmental conditions. We need to work toward a common but flexible approach to making sense of neuroimaging data.

Ultimately, fMRI is still our most powerful tool for studying the brain and understanding autism. It isn’t perfect, but no tool is. We scientists have to stick to our data and do our best to not overstate the results of a single study — no matter what it is.

Kevin Pelphrey is Carbonell Family Professor and director of the Autism and Neurodevelopmental Disorders Institute in Washington, D.C.

Recommended reading

Explore more from The Transmitter