Most neuroimaging studies have too few participants to reliably link complex behaviors to variations in brain structure or function, according to a study published today in Nature. The results point to the importance of large, multisite collaborations and data-sharing to ensure that imaging studies have enough statistical power to detect real associations, the researchers say.

The median sample size for neuroimaging studies hovers at about 23 participants, the team reported, based on a survey of open-source imaging data. Studies of this size can occasionally associate brain scans and behavior by chance, but the findings vary across datasets. To yield reproducible data requires thousands of participants, the researchers found.

The analysis may explain why the field of neuroimaging has not progressed in understanding these types of correlations as quickly as some had hoped, the team says.

“We in the neuroimaging literature have labored for decades under the misguided assumption that we can actually make progress by collecting small samples,” says Russell Poldrack, professor of psychology at Stanford University in California, who was not involved in the study. As the new work highlights, he adds, “we’re using very powerful statistical tools that have the potential to make it really easy for us to fool ourselves.”

For the autism field, in which collecting brain scans from thousands of people can be particularly challenging, it may be necessary to rethink how some experiments are conducted, says study co-senior investigator Damien Fair, director of the Masonic Institute for the Developing Brain at the University of Minnesota in Minneapolis.

“The types of questions you can answer with very large studies differ quite a bit from the types of questions you can answer with very small studies,” he says.

F

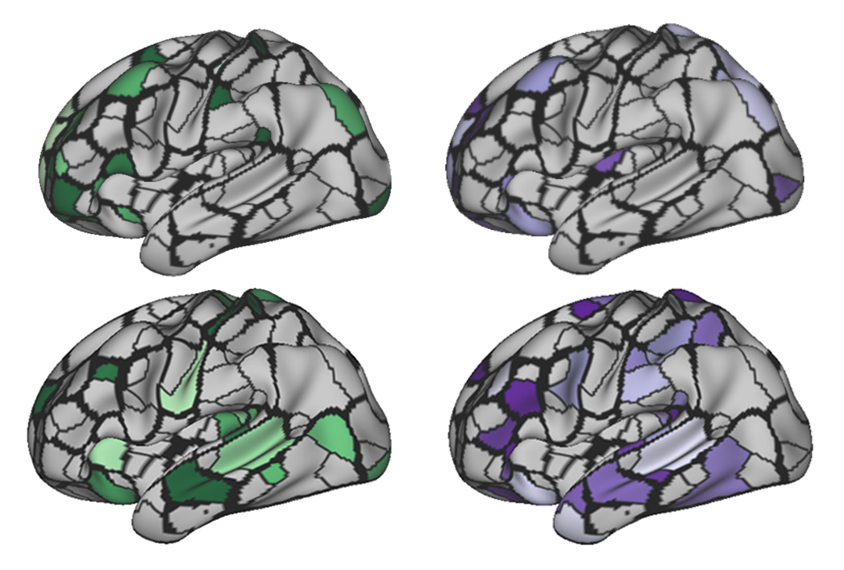

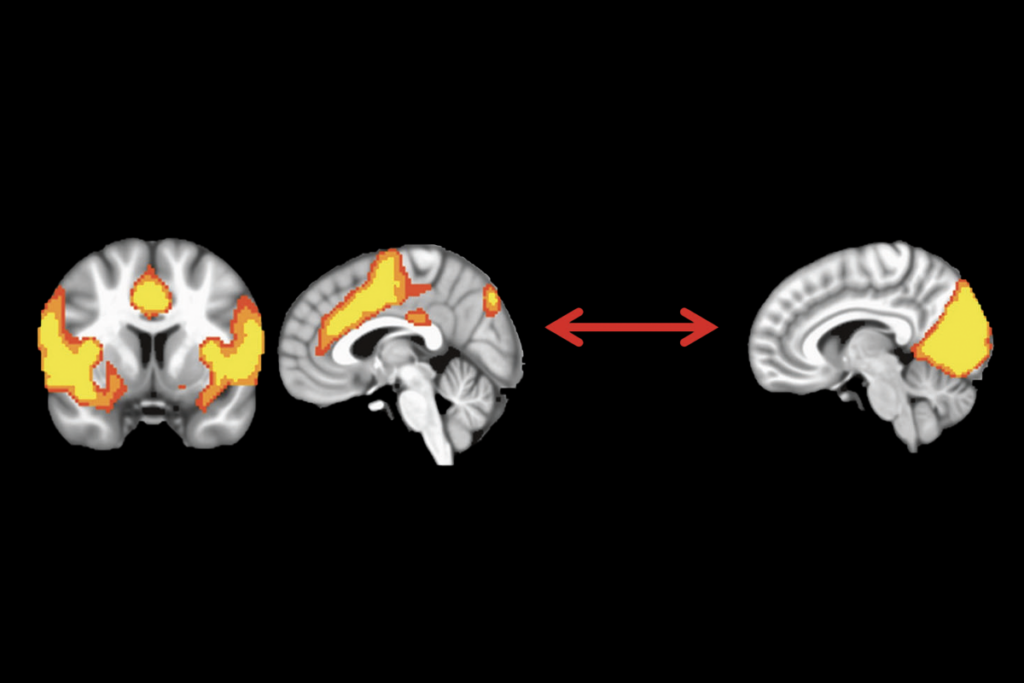

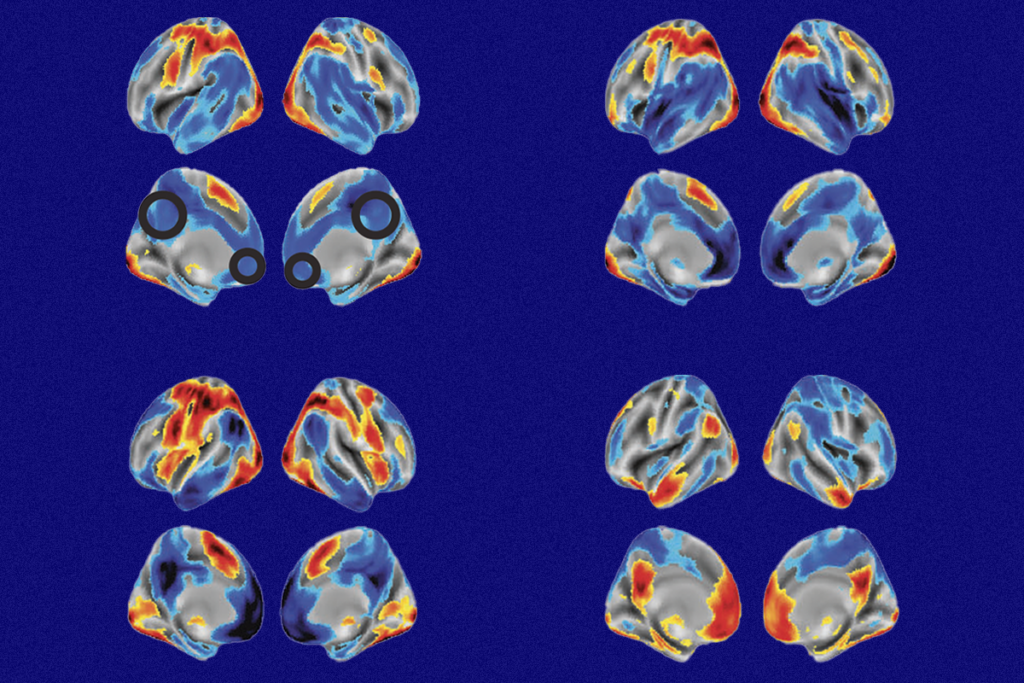

air and his colleagues analyzed brain scans — both structural and functional magnetic resonance images — collected as part of three large collaborative efforts: the Adolescent Brain Cognitive Development study, the Human Connectome Project and the UK Biobank. To simulate experiments of different sizes, they sampled scans from 25 to 32,572 people at a time and evaluated the strength of the correlations between the participants’ brain structure or function and their performance on tests of cognition and mental health.Datasets of fewer than 1,000 participants produced a wide range of results, the team found, including some that appeared to be significant. Some experiments run with 25 participants, for example, identified a strong positive link between resting-state functional connectivity and cognitive ability. Others of the same size, however, resulted in strong negative correlations between those same measures, and some found no link.

Because of publishing biases, the experiments that happen to result in strong correlations are those that end up getting published, Fair says, whereas the more commonly found non-significant results “don’t see the light of day.” And this bias, he says, leads to an inflated effect size in the literature: The smallest studies have the potential to falsely report the strongest connections.

When Fair and his colleagues expanded their analysis to include thousands of samples, they found only a weak correlation between functional connectivity and cognitive ability, for example. But they were able to replicate the findings across all three datasets, suggesting the effect is real.

“What this paper is showing is that the brain-behavior relationship has a very, very small effect size — at least in an unselected population,” says Sébastien Jacquemont, associate professor of pediatrics at the University of Montreal in Canada, who was not involved in the work. In a study of people who have a specific condition, such as a deletion at chromosome site 22q11.2, the correlation between brain structure and activity or behavior is likely much stronger — meaning fewer samples are needed, Jacquemont says.

Because autism is a heterogeneous condition, researchers may need to take further steps — such as subgrouping participants based on shared traits.

T

he findings shouldn’t raise any alarms about neuroimaging, says study lead investigator Scott Marek, instructor in psychiatry at Washington University in St. Louis, Missouri.“It’s not that there’s something wrong with imaging, and that’s why there’s a failure of replication,” he says. Any experiment that relies on correlational analyses will run into similar issues if the sample sizes are too small — just as genetics studies did in previous years, he says.

Geneticists overcame this challenge by gathering large datasets through consortia and by committing to open data practices, Marek says. The neuroimaging field has already begun to do the same — through the types of large-scale databases the new study relied on, as well as autism-specific initiatives such as the Autism Brain Imaging Data Exchange (ABIDE) and Enhancing Neuro Imaging Genetics through Meta Analysis (ENIGMA) projects.

Another option is to design experiments that are suited to smaller sample sizes, the team says. Longitudinal studies and treatment studies, for example, enable researchers to compare multiple brain scans from the same individual, which strengthens the signal and obviates the need for larger numbers of participants.

Rather than viewing the results of the study as a setback for the field, the team sees it as a “breakthrough,” says study co-senior investigator Nico Dosenbach, associate professor of neurology at Washington University in St. Louis.

“Recognizing why something isn’t working is always a critical step for making it work,” Dosenbach says. That wasn’t possible before these large neuroimaging studies existed. But now that they do, the field can learn from them, he says. “It’s all good news.”