People with autism have trouble processing sight, sound

People with autism tend to be less efficient than controls at integrating what they hear with what they see, according to unpublished results presented today at the 2014 Society for Neuroscience annual meeting in Washington, D.C.

People with autism are less efficient than controls at integrating what they hear with what they see, according to unpublished results presented today at the 2014 Society for Neuroscience annual meeting in Washington, D.C.

This effect is seen specifically in relation to speech but not other types of sounds, suggesting a mechanism that contributes to language problems characteristic of the disorder.

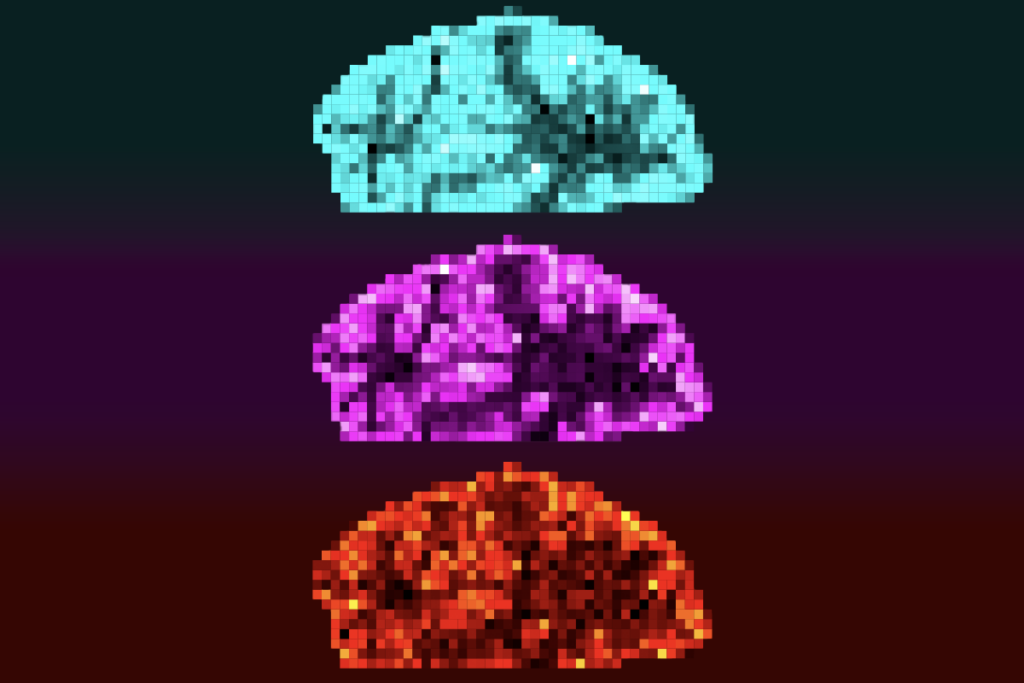

A variety of studies have used behavioral tests and measures of brain connectivity to show that people with autism struggle to integrate information from different senses. The new study employs an imaging technique called BOLD, measuring energy use in the brain to show just how hard people with autism work to put speech together.

In the study, 16 adults with autism and 18 controls watched short videos while resting in a magnetic resonance imaging scanner. One version of a video showed a woman telling a simple story, whereas another version had the sound of the storyteller’s words half a second out of sync with her lip movements.

Typical adults are known to process speech more efficiently — that is, their brains use less energy — when sight and sound are aligned than when they are out of sync. In line with that, the controls in the study showed an 18 percent leap in efficiency in the superior temporal sulcus when they watched the synchronous video compared with the asynchronous one. (The researchers focused on this brain region because it plays a role in language and social information processing, and has been implicated in autism.)

But in individuals with autism, the synchronized video produces only about a 5 percent increase in efficiency in the region over the asynchronous version.

“They’re not getting this boost in processing efficiency of audiovisual speech,” says Ryan Stevenson, a postdoctoral researcher working with Morgan Barense and Susanne Ferber at the University of Toronto, who presented the work.

In other words, for a person with autism, it’s not much easier to process speech in a normal video than in the sort of video that many adults would find maddeningly out of sync.

This difference in efficiency between people with autism and controls only turns up in the context of language.

The researchers also showed the study participants a video of a woman making non-speech sounds — calling, “Woooo!”, smacking her lips and so on. The participants with autism and controls both show about a 7 percent increase in efficiency in the synchronous version of this video.

The results hint at the neural underpinnings of the ‘intense world theory’ of autism, Stevenson says, given that the everyday world is crammed full of all sorts of sensory stimuli.

“If you’re processing all these things separately and really have to work, of course it’s overwhelming,” he says. “You’re doubling your perceptual world.”

The findings also suggest strategies that could help people with autism integrate what they see and what they hear more effectively. For example, Stevenson says, having simpler elementary school classroom environments could help just by giving children with autism less sensory information to process.

He and his colleagues are also working on perceptual training, an intervention that gives people more practice at integrating information from different senses.

For more reports from the 2014 Society for Neuroscience annual meeting, please click here.

Recommended reading

Expediting clinical trials for profound autism: Q&A with Matthew State

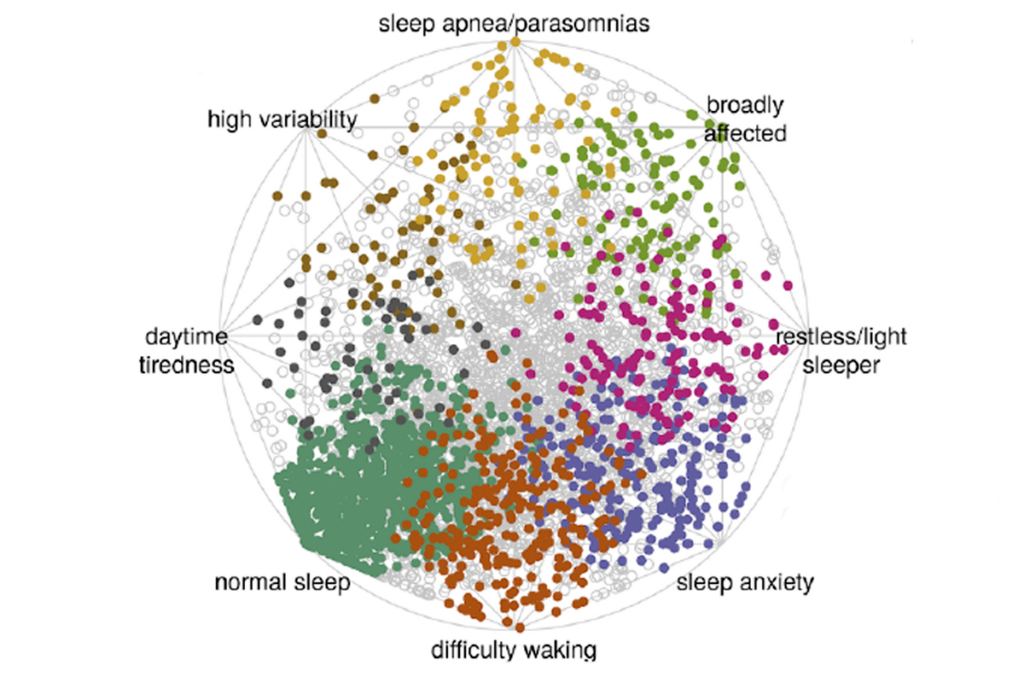

Too much or too little brain synchrony may underlie autism subtypes

Explore more from The Transmitter

Mitochondrial ‘landscape’ shifts across human brain