Peer review of methods before study’s onset may benefit science

‘Registered reports’ — a type of paper in which experimental protocols are reviewed before the study begins — may make neuroscience studies more rigorous and reproducible.

Everyone seems to be talking about how to increase the rigor, reproducibility and transparency of science. One interesting effort is registered reports, a type of journal article in which researchers submit their experimental protocol for peer review before doing the experiments.

The idea is that sound research questions and high-quality methodology should be more highly valued than mere novelty or provocative results. The practice may help prevent questionable research practices, such as poor statistics or cherry-picking results.

Although the ultimate goal is to improve the quality of scientific research, there are benefits for researchers as well. The journals that accept registered reports guarantee publication regardless of whether hypotheses are confirmed; this helps researchers avoid the temptation to fish for significant findings.

Still, other aspects of the process may give researchers pause. Will an added peer-review process slow down publication? Will binding oneself to a particular protocol limit experimental exploration?

Our research group uses functional magnetic resonance imaging (fMRI) to study cognitive flexibility — the capacity to switch between mental processes to appropriately respond to environmental stimuli — in children with autism. In February, we submitted a registered report to Developmental Science that was designed to explore the neural basis of cognitive flexibility in children with autism. Our study involves a task that we adapted from the developmental psychology literature, validated and used with fMRI for the first time.

Our initial experience with registered reports has been positive. So far, we’ve found the process fast and flexible enough to permit publication with minimal delays to the scientific process. What’s more, the early peer review and approval of our methodology promises to offer significant benefits for our study and for science in general.

Provisional acceptance:

Nearly 150 journals accept registered reports in addition to traditional single submissions.

These include several prominent psychology, neuroscience and psychiatry journals, including Developmental Science, NeuroImage, Nature Human Behavior and Cortex.

Registered reports entail a two-step publication process. In stage 1, researchers submit a proposed study — the equivalent of the ‘introduction’ and ‘methods’ sections of a traditional manuscript — for peer review. If the researchers reviewing the protocol deem it of high quality, the journal gives the paper a provisional acceptance — meaning it will accept the final report as long as the researchers use the registered methods.

The researchers then collect, analyze and report data in accordance with the registered guidelines. In stage 2, they report their results and discussion, and the final product is published.

Our group’s test run with registered reports is in progress: Our stage 1 report was provisionally accepted, and we are in the midst of data collection.

My first concern about using registered reports was that waiting for the stage 1 peer review would delay the start of our data collection. This turned out not to be the case; our stage 1 report underwent two rounds of peer review and was accepted within four months of the date we submitted it. Adding four months into our timeline was not a problem, although if it had taken much longer we might have started to worry.

Now we face two external deadlines. One deadline is the date we told the editors we would submit the stage 2 report; the other marks the end of our five-year grant for the project from the National Institute of Mental Health.

Although we must hustle to meet these deadlines, we don’t feel that the two-step process added an unusual amount of time or pressure. What’s more, we were able to cite the stage 1 acceptance in our latest grant progress report.

Exploratory analyses:

Another concern was that our stage 1 report would lock in a detailed data-analysis plan when there was a good chance we would want to alter that plan. All neuroimaging researchers face the challenge of keeping up with rapidly changing methods. Best practices for data analysis in the field are a moving target; guidelines can quickly become outdated1. For instance, acceptable thresholds for reporting results from fMRI studies are constantly being debated and revised2.

New methods of data analysis may even require rethinking an experimental design. In our study, we ask children to complete a cognitive flexibility task within a 12-second trial period. We proposed a partial-trial design to tease apart different stages of the task, but we may conduct additional exploratory analyses using newer methods as they become available3.

Luckily, most journals that publish registered reports allow for ‘exploratory analyses’ that are not specified in stage 1. Still, the risk remains that our plan may become obsolete by the time we’re ready to write our stage 2 report.

The registered-report format also raises questions about ownership of the work, particularly for research that takes a long time to complete. We study developmental processes in clinical populations, for which data collection can take years. In our lab, graduate students, postdoctoral fellows and research assistants typically take first authorship on the projects for which they do the bulk of data collection and analysis. When we submitted our stage 1 report, I realized that many lab members who worked on it would no longer be in the lab when the time came to submit stage 2. We haven’t come up with a good solution for how to manage appropriate authorship credit with two formal submissions over a period of years.

Important advice:

The registered-report process forced us to create, explicitly and deliberately, a detailed recruitment plan, data-collection protocol and data-analysis plan prior to data collection.

Our project benefitted from the suggestions provided by anonymous reviewers; one comment prompted us to rethink how we analyze separate stages of the cognitive flexibility task. The key is that this occurred before we went to the trouble of collecting expensive and laborious brain-imaging data from children.

Another benefit is that no matter the outcome, our results will eventually be published. Null results are informative, especially from well-designed studies, but they are not always easy to publish.

We have long considered ourselves proponents of the broader open-science movement, both by sharing data and by publishing preprints on bioRxiv4,5,6. Autism researchers, especially those conducting brain-imaging studies, have struggled to make sense of contradictory results7,8.

If registered reports ensure that clinical neuroscience research is more rigorous and reproducible, then we owe it to ourselves, the greater scientific enterprise and the public to adopt the practice.

Lucina Q. Uddin is associate professor of psychology at the University of Miami in Florida.

References:

- Nichols T.E. et al. Nat. Neurosci. 20, 299-303 (2017) PubMed

- Eklund A. et al. Proc. Nat. Acad. Sci. USA 113, 7900-7905 (2016) PubMed

- Ruge H. et al. Neuroimage 47, 501-513 (2009) PubMed

- Di Martino A. et al. Mol. Psychiatry 19, 659-667 (2014) PubMed

- Uddin L.Q. and K.H. Karlsgodt J. Clin. Child Adolesc. Psychol. 47, 483-497 (2018) PubMed

- Dajani D.R. et al. bioRxiv (2018) Full text

- Uddin L.Q. et al. Front. Hum. Neurosci. 7, 458 (2013) PubMed

- Müller R.A. et al. Cereb. Cortex 21, 2233-2243 (2011) PubMed

Recommended reading

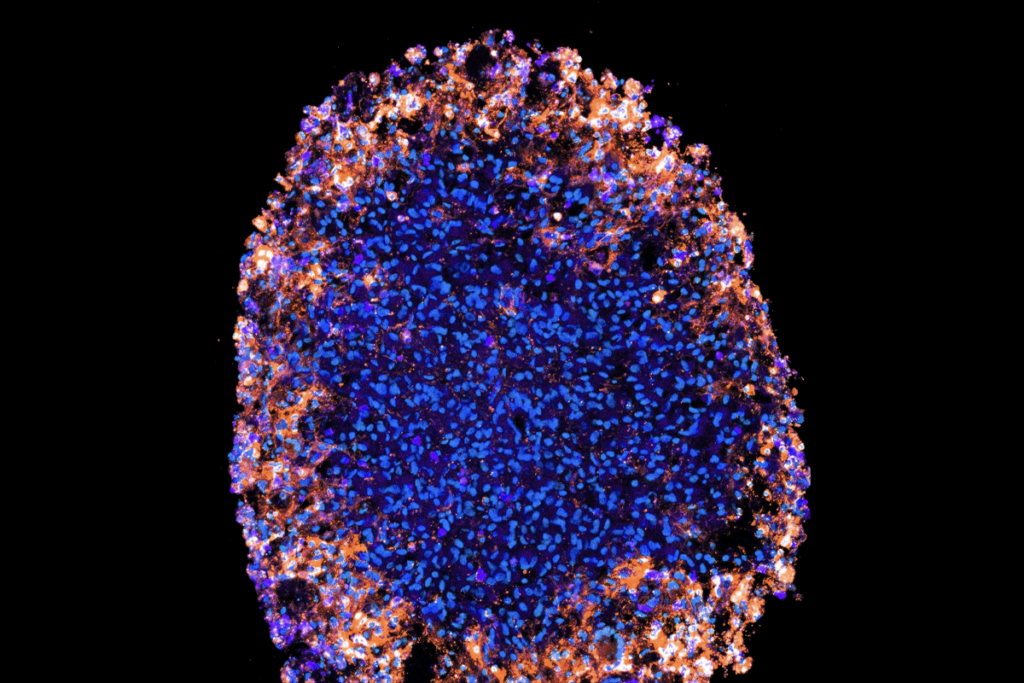

Among brain changes studied in autism, spotlight shifts to subcortex

Home makeover helps rats better express themselves: Q&A with Raven Hickson and Peter Kind

Explore more from The Transmitter

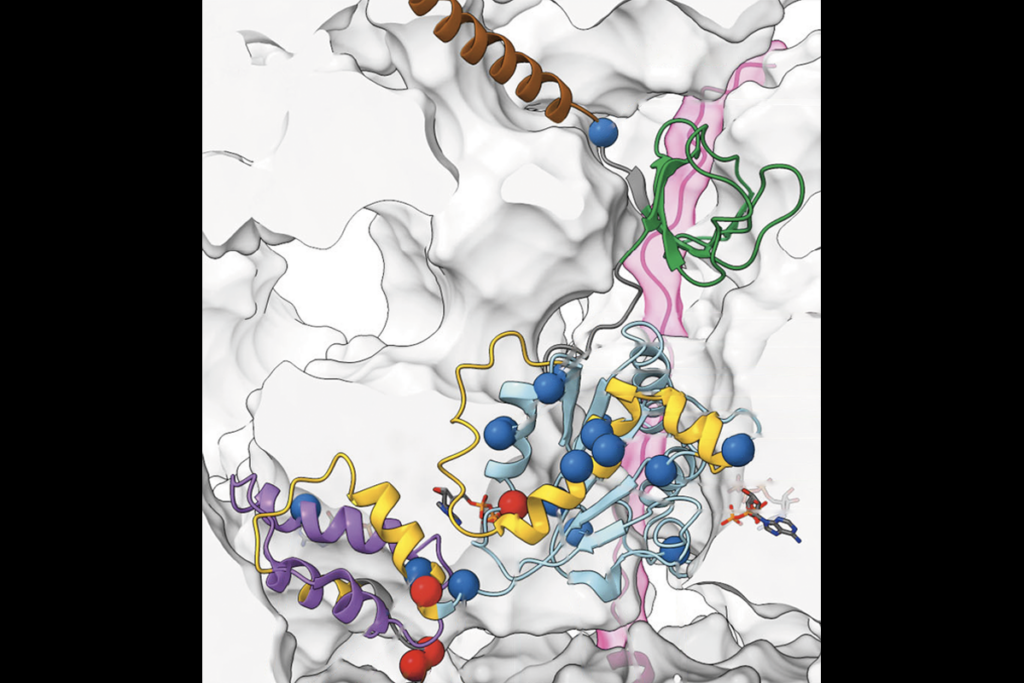

Dispute erupts over universal cortical brain-wave claim

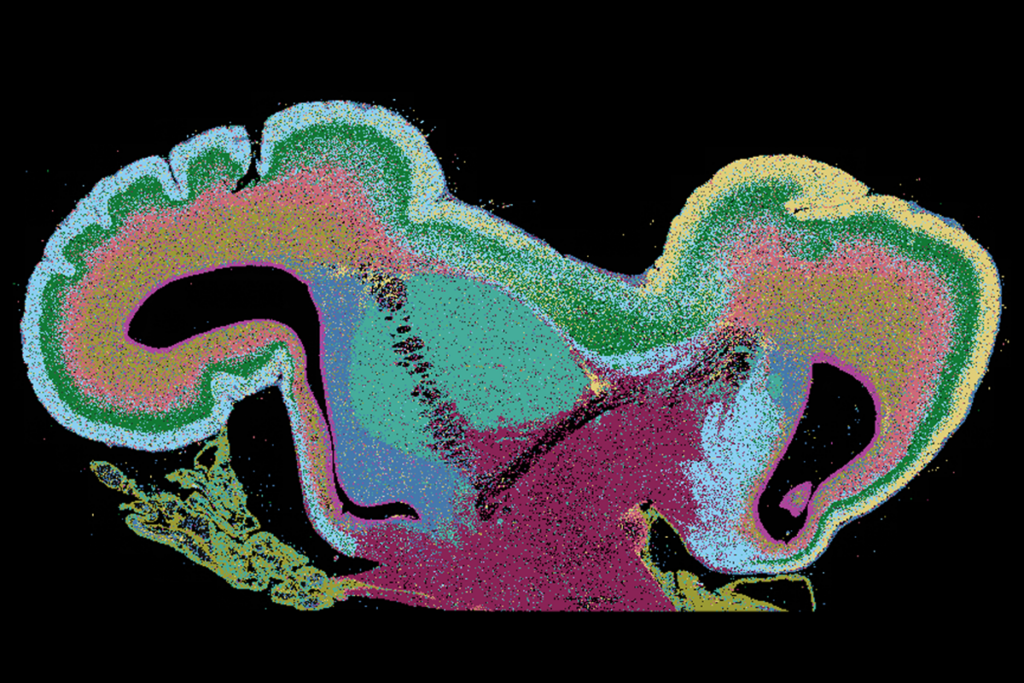

Waves of calcium activity dictate eye structure in flies