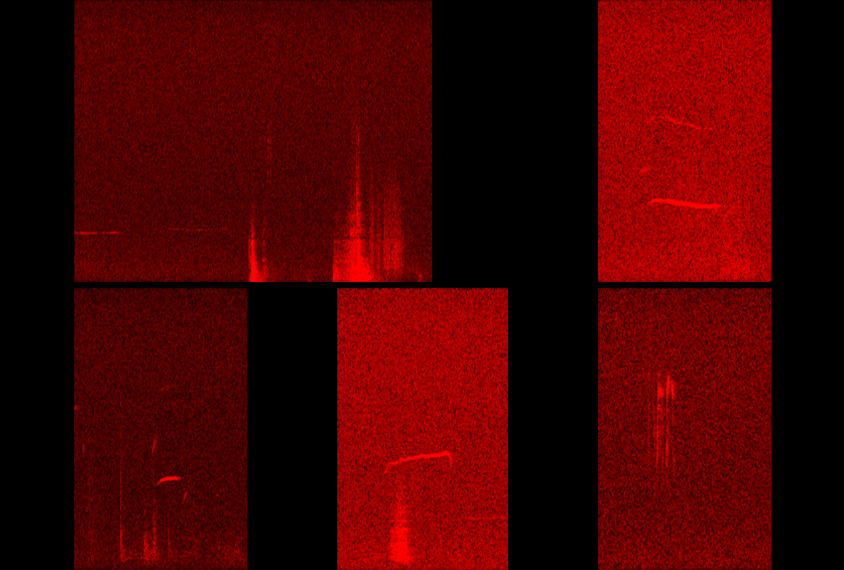

People speak; rats squeak. But the rodents also communicate through inaudible ultrasonic vocalizations, which offer insights into their social behavior. A new software tool called TrackUSF can aid researchers collecting ultrasonic data by recording the vocalizations and detecting differences between them, according to a new study.

Whether ultrasonic vocalizations are solid analogs for people’s communication abilities is under debate, but the tool could help research on animal models of autism; such vocalizations are altered in animals with some autism-linked gene mutations, previous research has found.

TrackUSF employs an approach often used by human speech-recognition software: identifying signatures and comparing them with each other, says lead investigator Shlomo Wagner, associate professor of neurobiology and social behavior at the University of Haifa in Israel.

“The idea of TrackUSF is to analyze the signature of [ultrasonic vocalizations] emitted by different groups of animals, such as [autism] models and their wildtype littermates, to compare them and to identify differences between them,” he says.

Wagner and his team classified the signatures using a measure called Mel-frequency cepstral coefficients (MFCCs). The coefficients are meant to represent what a listener actually hears, and they correspond to the sounds of different frequencies broken into distinct units.

The advantages of TrackUSF, Wagner says, are that it can be used for any condition, on many animals at once, and users generally do not need explicit training. “Moreover, unlike previous tools, TrackUSF may be used for other animals besides rats and mice, such as bats.”

W

hereas some vocalization analysis tools require experts to train them on reference or baseline audio samples ahead of time, TrackUSF is designed to identify changes in vocalizations between groups without any prior training, Wagner says.

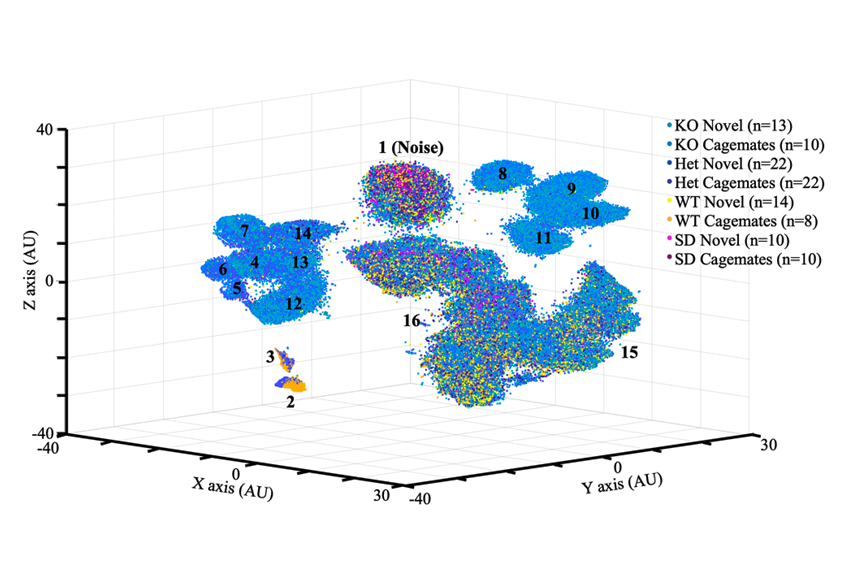

To this end, he and his team tested the tool on wildtype rats and rats missing one or both copies of the autism-linked gene SHANK3. Over 109 recording sessions, TrackUSF classified the animals’ calls into distinct clusters, grouped by both the animal making the vocalization and the intended listener. For instance, wildtype and SHANK3 rats tended to make similar sounds when communicating with their cagemates, but not so when they communicated with unfamiliar rats.

Adapting human vocal-analysis techniques for use with rodent squeaks introduces problems when it comes to processing the sounds, says Ryosuke Tachibana, associate professor of behavioral neuroscience at the University of Tokyo in Japan, who was not involved in the work. “MFCC emphasizes frequency resonance patterns in the vocal tract using signal harmonic structures,” which makes it a good unit of measure for analyzing the human voice, he says, but when it comes to mouse vocalizations, which usually do not have harmonics, MFCC may not be the best measure.

TrackUSF is not designed to characterize ultrasonic vocalizations in extensive detail, Wagner notes. But even high-level screening could be useful for researchers looking for any change from baseline characteristics in rats.

“For example, a drug company may use it to screen its drug library and find which of the drugs restores typical vocalizations in SHANK3 rats, pointing to possible drug treatments for Phelan-McDermid syndrome,” he says. “All they need to do is to place ultrasonic microphones in the animal cages and use TrackUSF for analysis.”