Looking directly in the eyes engages region of the social brain

The social brain has a sweet spot that activates when people look each other in the eyes but not when they look at eyes in a video.

The social brain has a sweet spot that activates when people look each other directly in the eyes but not when they look at eyes in a video.

Researchers presented the unpublished work yesterday at the 2019 Society for Neuroscience annual meeting in Chicago, Illinois.

Eye contact is key to social interaction, says study leader Joy Hirsch, director of the Brain Function Laboratory at Yale University. “Eye contact opens the gate between two perceptual systems of two individuals, and information flows.”

People with autism often avoid eye contact. But isolating what happens in the brain during eye contact is tricky because the studies typically involve a person lying alone in a scanner. Some researchers are beginning to find creative ways to capture brain responses during real-life social interactions.

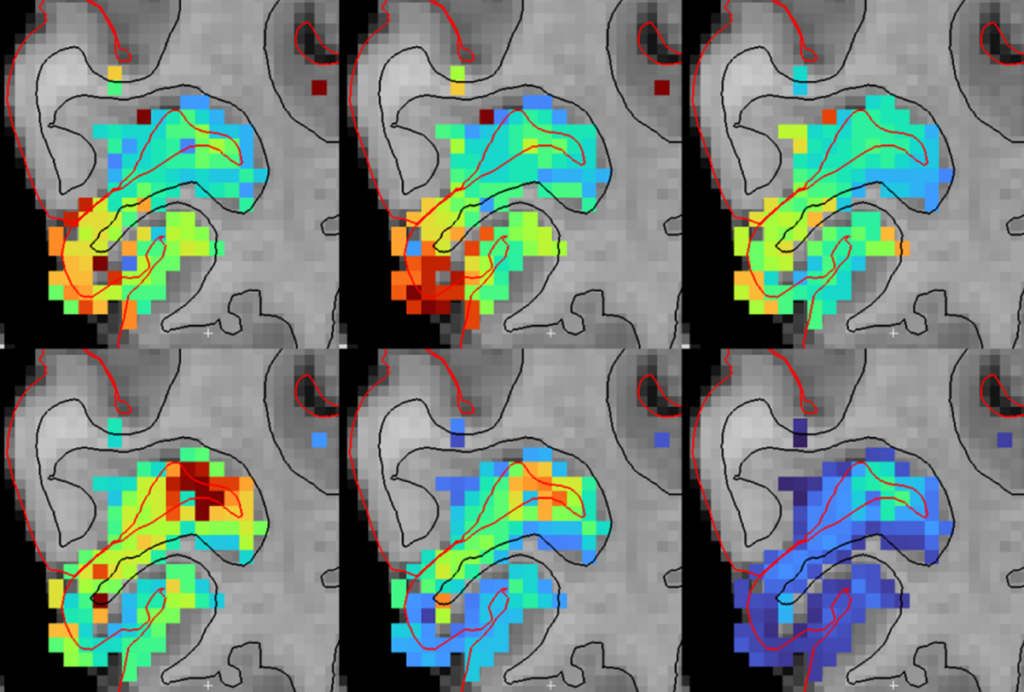

Hirsch’s solution to the problem is functional near-infrared spectroscopy (fNIRS). In this method, participants wear a cap embedded with light-detecting sensors that measure blood flow, a proxy for neural activity in the outer layer of the brain.

The team first recorded brain activity in people sitting across from each other at a table. A recorded tone prompted each participant to make eye contact with their partner and to then shift their gaze to the side for three seconds at a time1. The participants repeated these steps, but this time with a face on a video screen.

Smart setup:

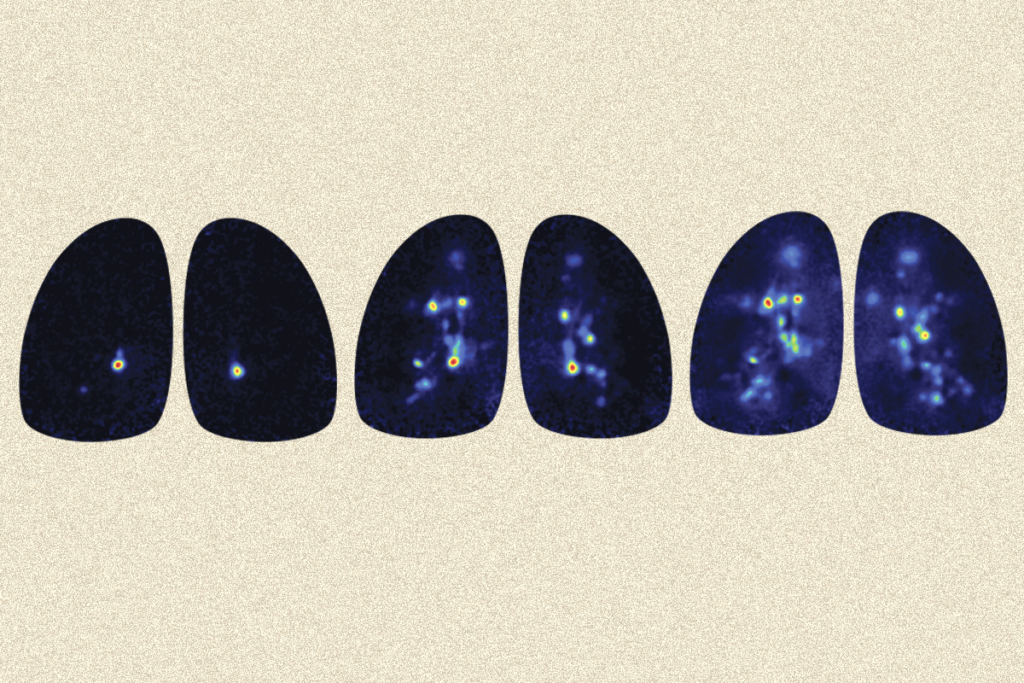

In a second experiment, participants looked at each other through a panel of ‘smart glass.’ They listened to a recorded story while making natural facial expressions, including frequent eye contact, in response to the narrative. Meanwhile, the smart glass switched from clear to occluded on a 15-second cycle.

The researchers also recorded brain responses while the participants watched a face in a video or looked at a blank screen for 15 seconds at a time. They have data from 30 people for the 3-second experiment and from 16 people for the 15-second one.

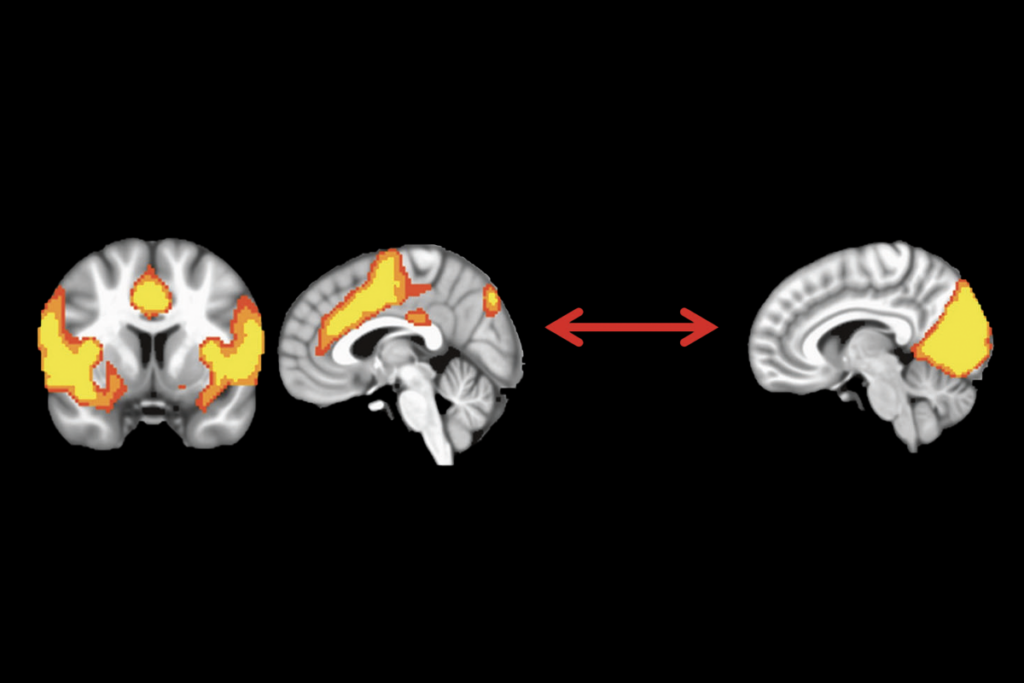

In both experiments, live eye contact activates areas of the right temporal parietal junction more strongly than when people look at eyes on a screen. The region is known to be “a nexus of social sensitivity,” Hirsch says.

The researchers combined the 15-second imaging data with eye-tracking data and found that direct, simultaneous eye contact activates areas associated with high-level visual processing, as well as a part of the temporal parietal junction called the right angular gyrus.

The more often a person makes direct eye contact, the stronger the signal in the right angular gyrus. “That’s pretty good evidence — when you poke it and it jumps, and you keep poking it and it jumps higher — that you’re on to a causal relationship,” Hirsch says.

The researchers are conducting the three-second version of the experiment in autistic adults.

For more reports from the 2019 Society for Neuroscience annual meeting, please click here.

References:

- Hirsch J. et al. Neuroimage 157, 314-330 (2017) PubMed

Recommended reading

Developmental delay patterns differ with diagnosis; and more

Split gene therapy delivers promise in mice modeling Dravet syndrome

Changes in autism scores across childhood differ between girls and boys

Explore more from The Transmitter

Smell studies often use unnaturally high odor concentrations, analysis reveals

‘Natural Neuroscience: Toward a Systems Neuroscience of Natural Behaviors,’ an excerpt