Interactive virtual reality system teaches social skills

A virtual reality system equipped with an eye-tracking device helps teenagers with autism learn to engage with others, according to a study published 23 May.

A virtual reality system equipped with an eye-tracking device helps teenagers with autism learn to engage with others, according to a study published 23 May in IEEE Transactions on Neural Systems and Rehabilitation Engineering1.

Technologies such as video games and iPad apps are showing promise for teaching children with autism social interaction skills. It may be that, like many healthy teenagers, those with autism are more receptive to video games than to organized interventions with peers.

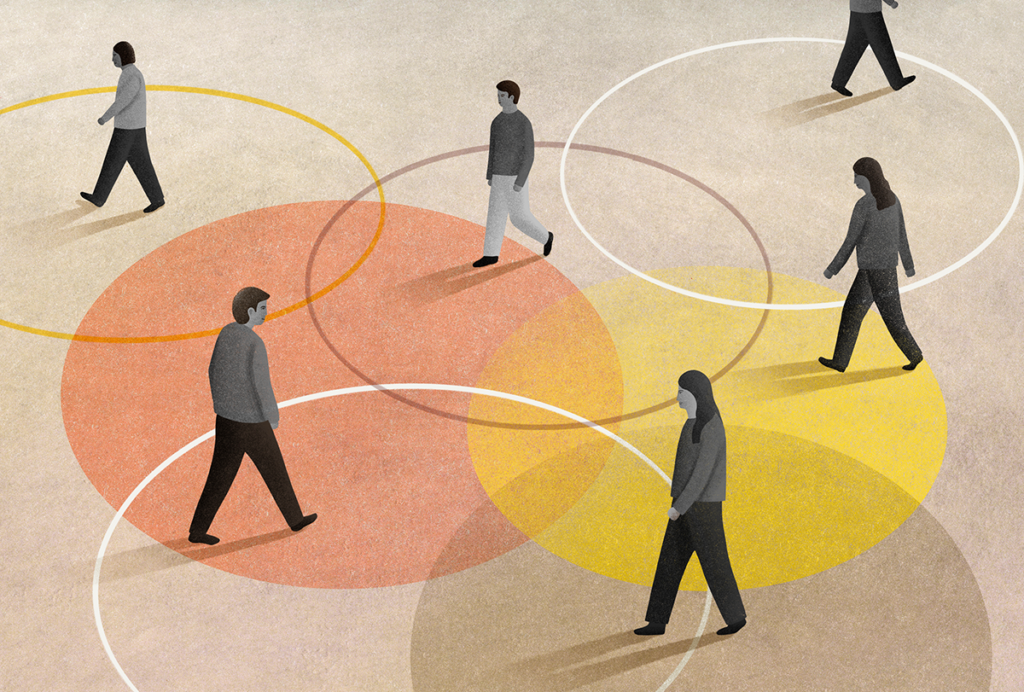

In the new study, the researchers developed a virtual reality system that simulates real-world interactions with computerized avatars. The system also integrates an eye-tracking device that collects data on the participants’ gaze and relays the information back to the system.

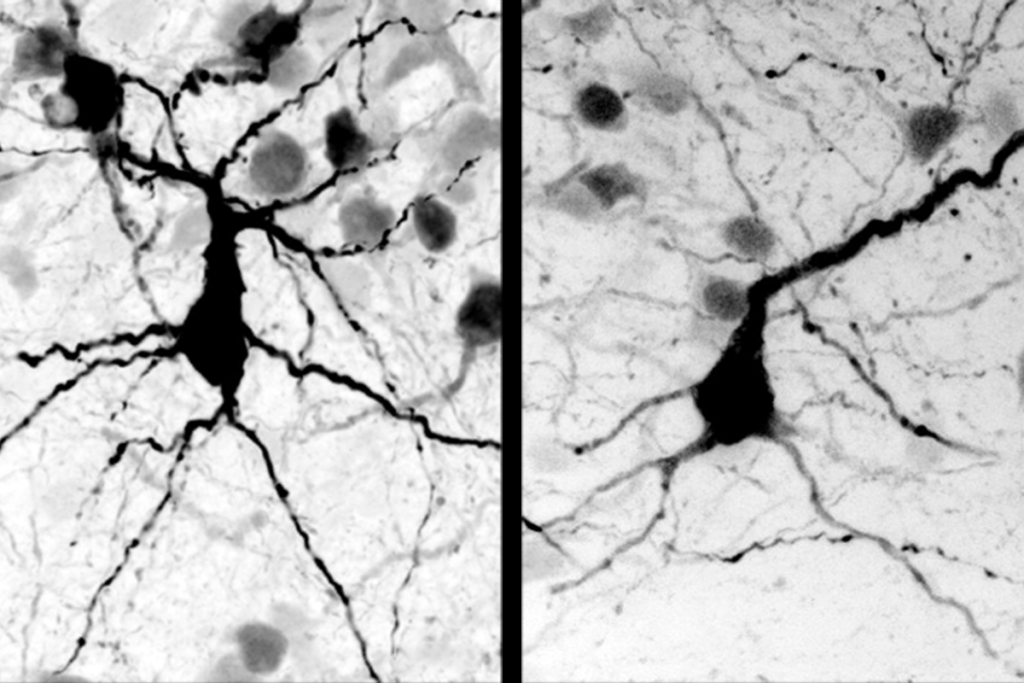

Individuals with autism look at others’ eyes less than controls do, and this may contribute to their difficulties in detecting social cues.

In the study, six adolescents with high-functioning autism watched computer avatars narrate a personal story in the format of a school presentation. The avatars blink like typical teenagers do and have facial expressions appropriate to the emotions in the story.

The system tracks how often and how long the participants look at various ‘regions of interest,’ such as the narrator’s face, an object — such as an inset screen with an image of the story — or the background. It also measures their pupil diameter and how often they blink.

Researchers can vary the interactions for participants from different cultures. For example, the avatars in this study stood about three feet away from the participants and the program encouraged the participants to make eye contact with the avatars about 70 percent of the time, which is considered the norm in the West.

At the end of the story, the program asks the teenagers what emotion the avatar was feeling. The participants then get personalized feedback, based on whether they answered correctly and had the appropriate level of eye contact. For example, if they recognized the emotion, but did not make enough eye contact, the program tells them: “Your classmate did not know if you were interested in the presentation. If you pay more attention to her she will feel more comfortable.”

The system will eventually also allow real-time feedback based on the eye-tracking data, allowing the avatars to change facial expressions or to provide verbal feedback.

All six teenagers were absorbed in the interactions and seemed to enjoy using the system, based on how much they looked at the screen as well as reports from caregivers. When the teenagers were more engaged, they were about 14 percent more likely to look at faces and 60 percent less likely to be looking at the background.

References:

-

Lahiri U. et al. IEEE Trans. Neural Syst. Rehabil. Eng. Epub ahead of print (2011) PubMed

Recommended reading

Explore more from The Transmitter

Neuro’s ark: How goats can model neurodegeneration