Inconsistent prevalence estimates highlight studies’ flaws

How researchers design autism prevalence studies has a significant impact on the results, says Eric Fombonne.

The lore about autism is that prevalence rates are rising — leading many people to call it, misleadingly, an ‘epidemic.’ Even among scientists, many assume that the largest prevalence estimates are the most accurate.

But epidemiologists know that the prevalence depends greatly on the methods used in the study.

In January, for example, the National Health Interview Survey in the United States reported an autism prevalence of 2.76 percent in 2016, up slightly from 2.24 percent in 2014. However, a 2014 survey of 8-year-olds by the U.S. Centers for Disease Control and Prevention (CDC) reported a lower prevalence, at 1.69 percent. And preliminary data from a 2012 survey of 8-year-olds in South Carolina suggested a higher estimate: 3.6 percent.

What may not be obvious is that these studies varied greatly in their design, which contributed to the varying estimates of prevalence1, says Eric Fombonne, professor of pediatrics at Oregon Health and Science University.

We asked Fombonne about how a study’s design influences prevalence — and in many cases, inflates the estimates.

Spectrum: Are studies of autism prevalence beginning to approach the true levels?

Eric Fombonne: We don’t know what the true autism prevalence is. In the past 5 to 10 years, the authors of studies finding high rates of autism have had the tendency to be a bit blind to the problems in their own studies. I’m concerned about the lack of critical appraisal in what we do.

S: How might a study overestimate autism prevalence?

EF: One study I participated in looked across all schoolchildren in one region in South Korea by sending questionnaires to all teachers and parents in the region. Of these children, those who scored above a cutoff for autism were invited to have a clinical confirmation of their diagnosis.

But only some of the parents responded to the screening questionnaire, and then only some of the parents of the children invited for an evaluation agreed to bring in their children. Still, to calculate the prevalence, we had to make the audacious assumption that the children whose families participated are as likely to have autism as those who didn’t.

When we started the study, there were few services for autistic children in Korea. So parents of a child with autistic features might have been seeking a diagnosis for the child — and might therefore have been more likely to participate in our study than parents of typically developing children. If that were true, the autism prevalence we obtained (2.64 percent) is likely to be an overestimate.

An unpublished study of autism rates in South Carolina based on the same design, with similarly low participation rates, makes comparable unchecked assumptions. So I worry that its high prevalence could be an overestimate as well.

S: Do the CDC prevalence studies make similar assumptions?

EF: No, the CDC does not attempt to assess everybody in a population. Instead, it looks in the medical and special education records of each child in a certain region to determine whether a child meets criteria for its surveillance definition of autism. Children with no relevant notations of social problems in their records are assumed not to have autism. This method is not subject to a bias from parent responses, although other types of bias are possible.

Still, it is not clear how accurate the CDC’s surveillance criteria for autism are. In a study designed to validate these, researchers found that between 20 and 40 percent of children who met the CDC definition of autism did not actually have autism, so the CDC rates could be overestimates2.

S: Are there any other problems with the CDC studies?

Yes. One important flaw in the CDC criteria is the inclusion of pervasive developmental disorder not otherwise specified (PDD-NOS). PDD-NOS is an ill-defined category in an outdated version of the psychiatric manual the Diagnostic and Statistical Manual of Mental Disorders. It may be loosely applied to children who do not meet full requirements for autism. The most recent CDC report doesn’t specify who has PDD-NOS, but in previous CDC studies, roughly 40 percent of the CDC ‘autism’ cases were in fact PDD-NOS.

Another issue is that of every 100 children the CDC researchers determine to have autism, only 80 have a reference to autism specifically in their medical or school records. So, one in five 8-year-old children the CDC decides has autism had never been picked up as autistic by any professional. At age 8, how is this likely? This proportion has remained unchanged in CDC surveys, despite a steady increase in autism awareness over the past 15 years. It is unfortunate that we cannot perform a direct in-depth investigation of these children.

S: Are some children inaccurately diagnosed with autism in real life?

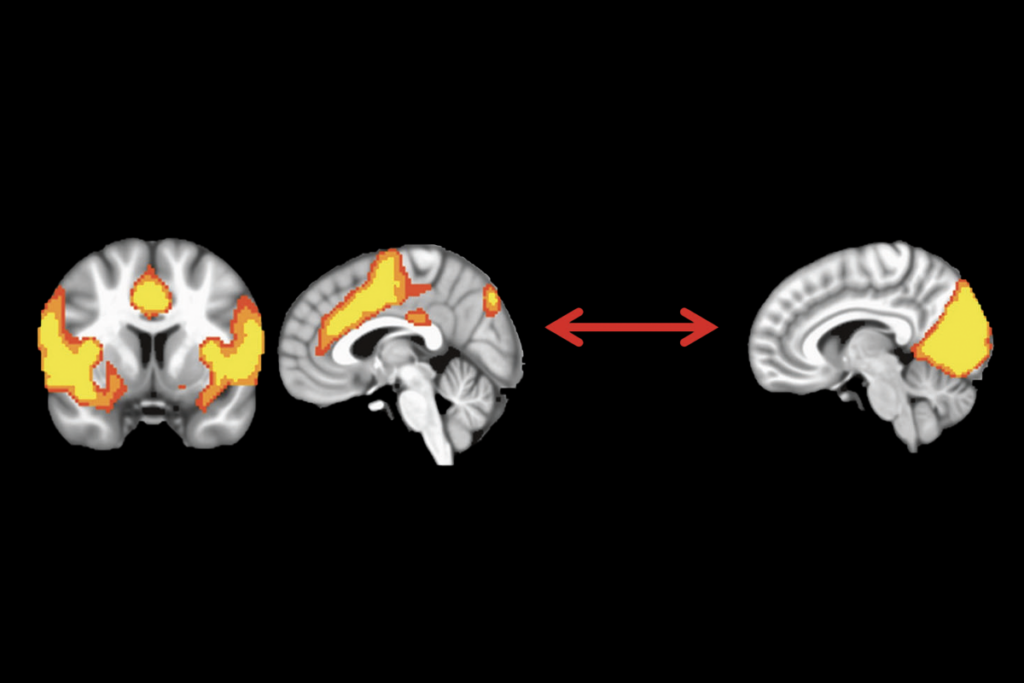

EF: Yes. I led a team that verified autism diagnoses prior to inclusion in a neuroimaging study. Trained researchers performed independent state-of-the-art assessments of over 200 children with an existing autism diagnosis. At least 30 percent of these children turned out to not have autism. It was mind-boggling. Some clinicians appear to diagnose autism in children who don’t have it simply because there are more support services available for children with autism than for children with other complex behavioral conditions.

I wonder about the long-term impact of misclassifying these children. It was disheartening to see so many children and teens who don’t have autism carrying a label of ‘autism’ at school and in their homes.

In my clinics 25 years ago, I remember explaining to parents who had no clue about autism why their child qualified for the diagnosis. Things have now reversed. Nowadays, some parents and professionals push for that diagnosis and resist a ‘not autism’ conclusion because it may come with less support.

S: Do you think some methods are better than others for estimating prevalence?

EF: Not really. There is no standardized way to do these studies. Each study has its unique strengths and limitations, and there is no quick fix to this problem. However, I see two ways to improve this area of research. One would be to add a longitudinal component to prevalence surveys, which so far provide only a static picture. Focusing on trajectories would better capture essential features of autism. Second, epidemiological studies will get better when biomarkers become available. These biological measures should reduce the infernal noise in our current autism assessments, which rely on behavior.

Meanwhile, we need to look carefully at each study we conduct and consider potential problems with its methodology. I worry that investigators are pressured to confirm high prevalence rates. I also worry that uncritical faith in diagnostic criteria and standardized instruments might lead researchers to disregard the profound measurement issues that persist in our field.

References:

Recommended reading

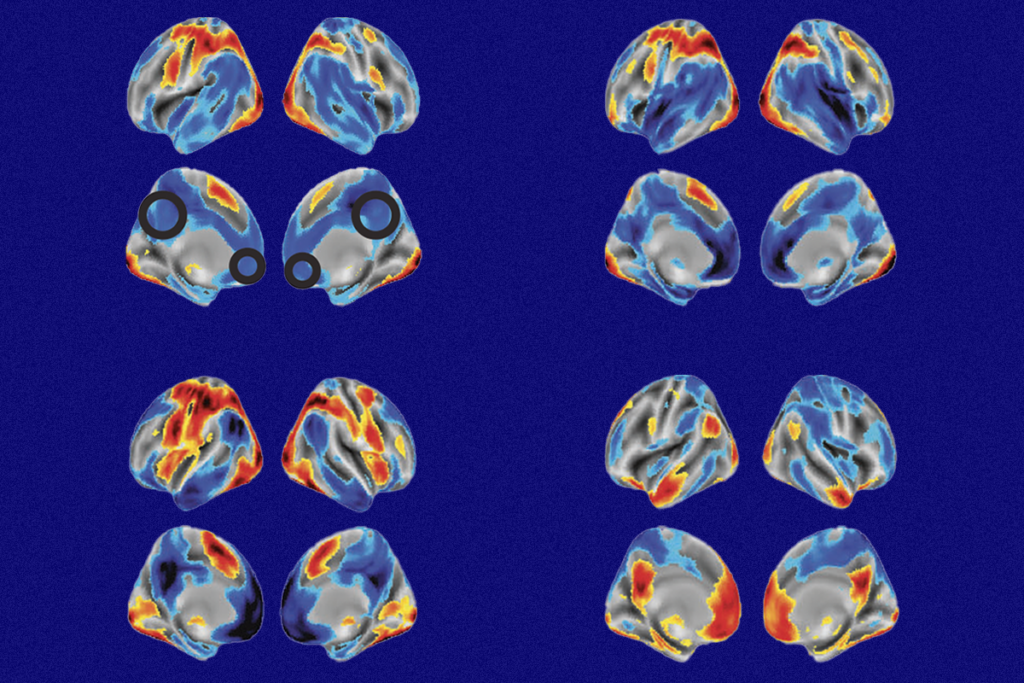

Too much or too little brain synchrony may underlie autism subtypes

Developmental delay patterns differ with diagnosis; and more

Split gene therapy delivers promise in mice modeling Dravet syndrome

Explore more from The Transmitter

During decision-making, brain shows multiple distinct subtypes of activity

Basic pain research ‘is not working’: Q&A with Steven Prescott and Stéphanie Ratté