Imaging techniques capture real-world social interaction

Three new approaches to brain imaging, presented Tuesday at the Society for Neuroscience annual meeting in New Orleans, allow researchers to probe how the brain responds to social situations.

-

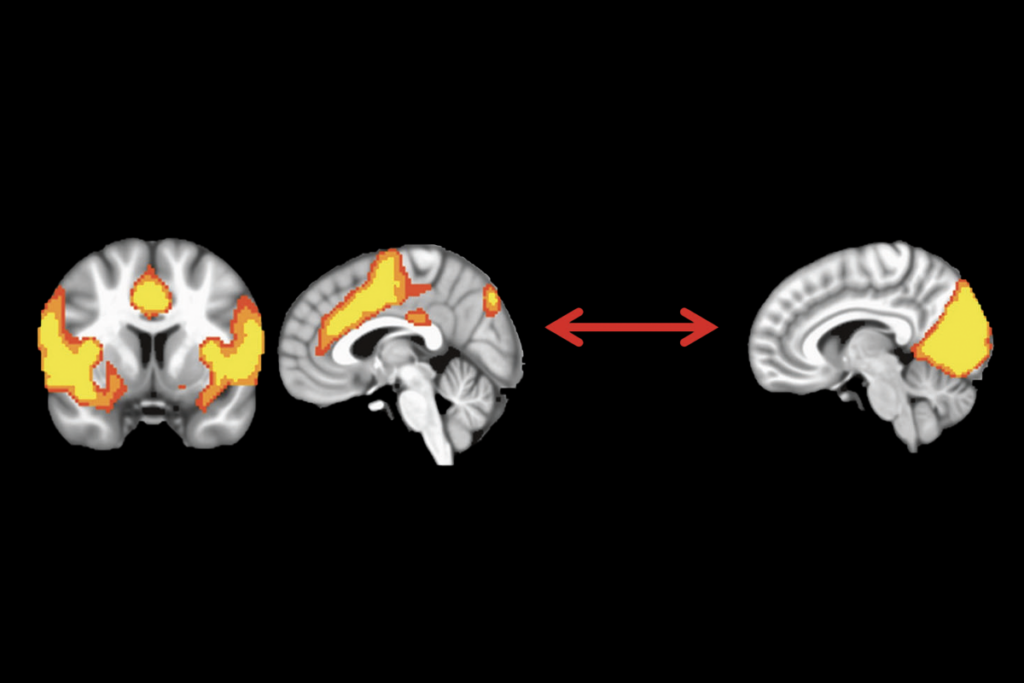

Social sections: Viewing an emotional scene between two characters in a movie lights up the social brain.

Three new approaches to brain imaging, presented Tuesday at the Society for Neuroscience annual meeting in New Orleans, allow researchers to probe how the brain responds to social situations.

The results provide proof of principle that the techniques, in which study participants view movies of social situations or even interact with each other directly, activate social areas of the brain.

Most imaging studies — particularly those related to social behavior and autism — measure how the brain responds to still photographs of emotional expressions and scenes.

But in the real world, “No face appears in front of you for [half a second] and just disappears,” says Brian Russ, a postdoctoral fellow in the laboratory of David Leopold at the National Institute of Mental Health, who presented one of the posters.

The new methods are designed to mimic more closely the way real social interactions happen.

In one of the studies, researchers led by Randy Buckner at Harvard University performed functional magnetic resonance imaging (fMRI) on 16 people with autism and 30 healthy adults.

The researchers scanned the participants while they viewed brief movie clips featuring two people interacting with each other in an emotional situation, a person interacting with an object, or a moving object alone (such as a flying airplane).

They found that both groups show more activation in the temporoparietal junction, superior temporal sulcus and medial prefrontal cortex when viewing the clips of emotional scenes than the other clips.

“Regions that are involved in social function are in fact coming on line for the task,” says Marisa Hollinshead, research coordinator for the Buckner lab, who presented the poster.

The researchers have not yet compared imaging data from people with autism and controls.

Couch potatoes:

In the second study, Russ and his colleagues applied a similar method to rhesus macaques, scanning the animals while they viewed short movies of other macaques, other species (specifically, polar bears) or nature scenes that don’t include animals.

Although the researchers have only scanned three macaques so far, those monkeys have seen a total of 700 clips, each lasting five minutes.

The researchers found that movies of macaques or other animals activate areas involved in the recognition of faces. “I think it’s great,” says Hollinshead, “it’s a nice parallel for our work.”

Russ and his colleagues also collected data on gaze so that they can match the fMRI results with what the monkeys look at.

A potential problem with studies that rely on passive viewing is that, without a defined task to focus on, the participants might fidget as their minds wander — just as they do in the real world.

Studies over the past year have found that even small head movements inside a scanner can alter the results1.

But the Buckner lab study found some evidence that people are in fact better able to keep still while they are viewing movies, a rare instance in which zoning out in front of a screen is beneficial. (As for the macaques, they get a sip of juice every few seconds as a reward for paying attention to the movie.)

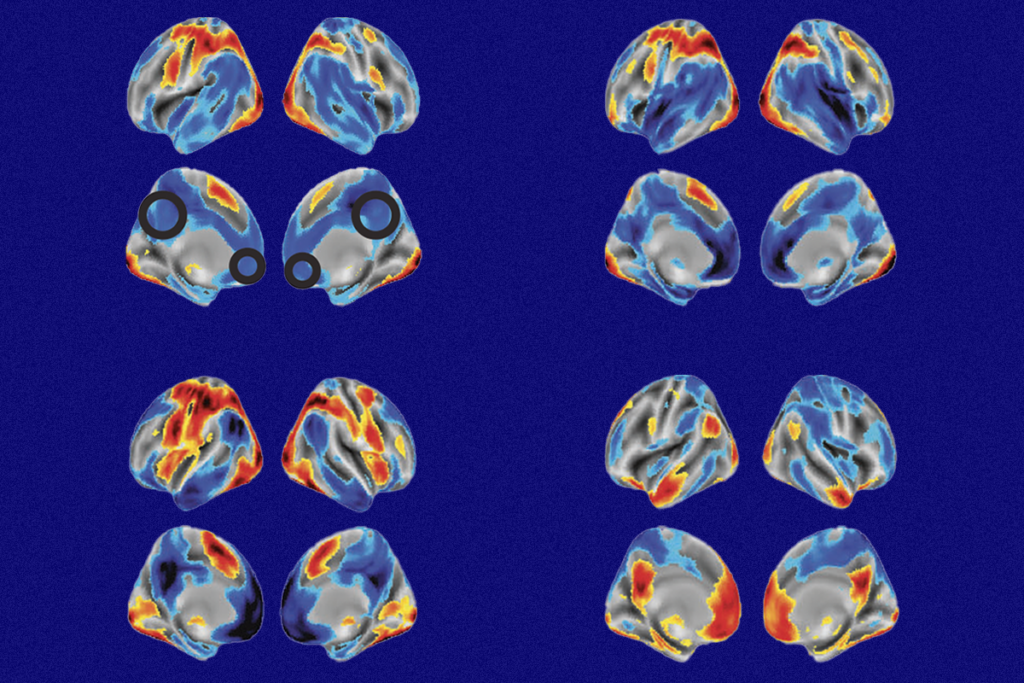

A third group of researchers, led by Andreas Meyer-Lindenberg at the Central Institute of Mental Health in Mannheim, Germany, presented data on an approach another step closer to the real world, simultaneously scanning two healthy adults who are interacting through a video screen.

In that study, the researchers found that social brain areas such as the temporoparietal junction, medial prefrontal cortex and anterior cingulate cortex show more activation when the two people are working to accomplish a task that requires joint attention, or shared focus on a single object, than it does when they are working alone or just resting in the scanner.

Other researchers have performed fMRI scans of people engaged in joint attention with an experimenter2, but this is the first to have both participants inside MRI machines at the same time.

“The interesting thing is the coupling of the fMRI data,” says Edda Bilek, a graduate student in Meyer-Lindenberg’s laboratory.

Previous studies have suggested that activity in the social areas of the brain tends to align in two people working together, and the researchers confirmed this effect. Intriguingly, their data suggest that the strength of coupling depends on the personality traits of the participants and the size of their social networks.

Scanning two people at the same time introduces numerous complexities and possibilities for error, says Avram Holmes, a postdoctoral fellow in the Buckner lab.

“This must be a technical nightmare to pull all this together,” he says. “But it looks like they figured it out.”

For more reports from the 2012 Society for Neuroscience annual meeting, please click here.

References:

1: Van Dijk K.R. et al. Neuroimage 59, 431-438 (2012) PubMed

2: Redcay E. et al. Hum. Brain Mapp. Epub ahead of print (2012) PubMed

Recommended reading

Too much or too little brain synchrony may underlie autism subtypes

Developmental delay patterns differ with diagnosis; and more

Split gene therapy delivers promise in mice modeling Dravet syndrome

Explore more from The Transmitter

Noninvasive technologies can map and target human brain with unprecedented precision

During decision-making, brain shows multiple distinct subtypes of activity