Imaging of social brain enters real world

Studies of social-brain function are moving out of scanners and into realistic settings.

Lying alone in a scanner in a dark, quiet room could hardly be a more solitary activity. Yet many studies of the neural underpinnings of social behavior unfold in exactly that setting.

Physical isolation is a known limitation of functional magnetic resonance imaging (fMRI), which measures blood flow as a proxy for brain activity. Although the technique can reveal which brain areas become active during certain tasks, it usually requires that those tasks be performed alone.

Researchers interested in social-brain function often rely on computerized activities as a stand-in for actual interactions during fMRI studies. But pressing keys on a computer is quite different from exchanging glances with another person.

This contrast is especially relevant to autism research. Understanding the neural basis of social function is central to understanding autism. And the social difficulties that characterize autism, such as trouble maintaining eye contact and following subtle shifts in another person’s gaze, are hard to recapitulate with a computer program.

Moving brain imaging studies out of the lonely bore of an MRI machine into more naturalistic environments represents a much-needed next step for autism research. We call this new frontier ‘interactive social neuroscience,’ or ‘second-person neuroscience1.’ And although there are technical challenges, the prospect of visualizing the brain during social interactions is more real than ever.

Happy medium:

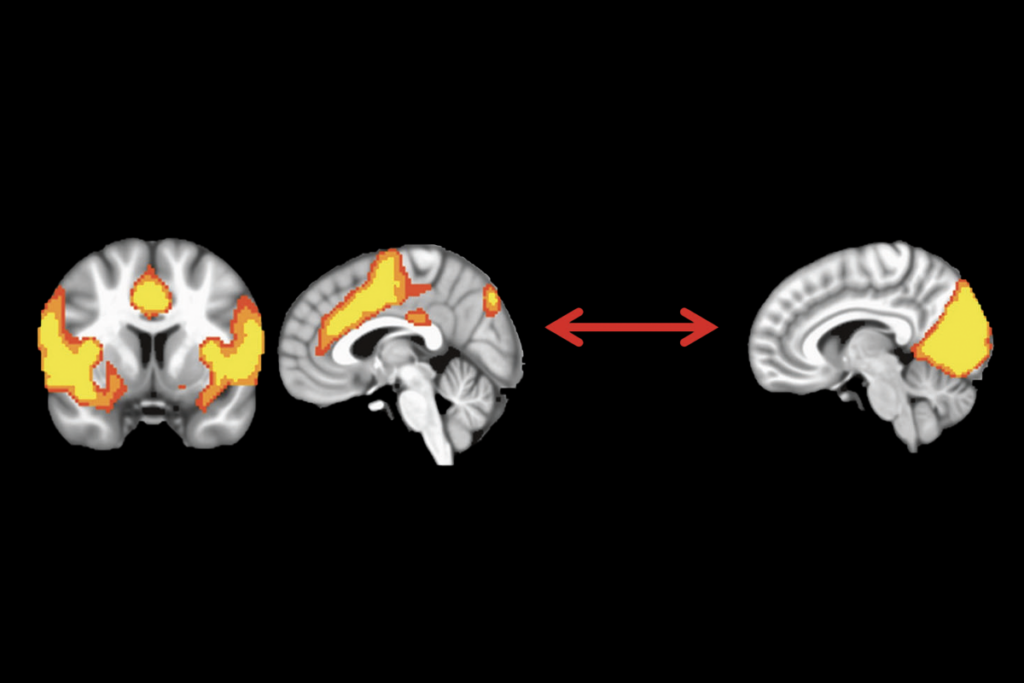

Researchers have come up with some clever workarounds for fMRI’s limitations. Elizabeth Redcay’s team at the University of Maryland has used closed-circuit video to enable virtual face-to-face interactions for individuals in a scanner2. The group has shown diminished activation relative to controls in key social brain regions, such as the posterior superior temporal sulcus, when individuals with autism try to follow the gaze of their video partner.

Another way of parsing brain activity is electroencephalography (EEG). This technique measures activity in neurons near the surface of the brain through electrical sensors on the scalp. Aside from being tethered to a computer by 128 wires, a participant is free to interact with another person. EEG equipment is also far less expensive to acquire and simpler to operate than an MRI machine.

But EEG has limitations, too. Although it provides detailed information about the timing of brain activity, it is less clear where in the brain the activity originates. And in order to link bursts of brain activity to certain behaviors, researchers must present experimental stimuli many times. This makes for a repetitive and unrealistic social experiment.

A third technique, called functional near-infrared spectroscopy (fNIRS), combines good spatial resolution with the flexibility of EEG. Like fMRI, fNIRS measures brain activity by quantifying the level of oxygenation and deoxygenation in blood in the brain. In this way, it provides information about where and to what extent metabolic activity is taking place. But the method of acquiring fNIRS data more closely resembles that of EEG, allowing a participant to move freely and engage with another person.

Facing reality:

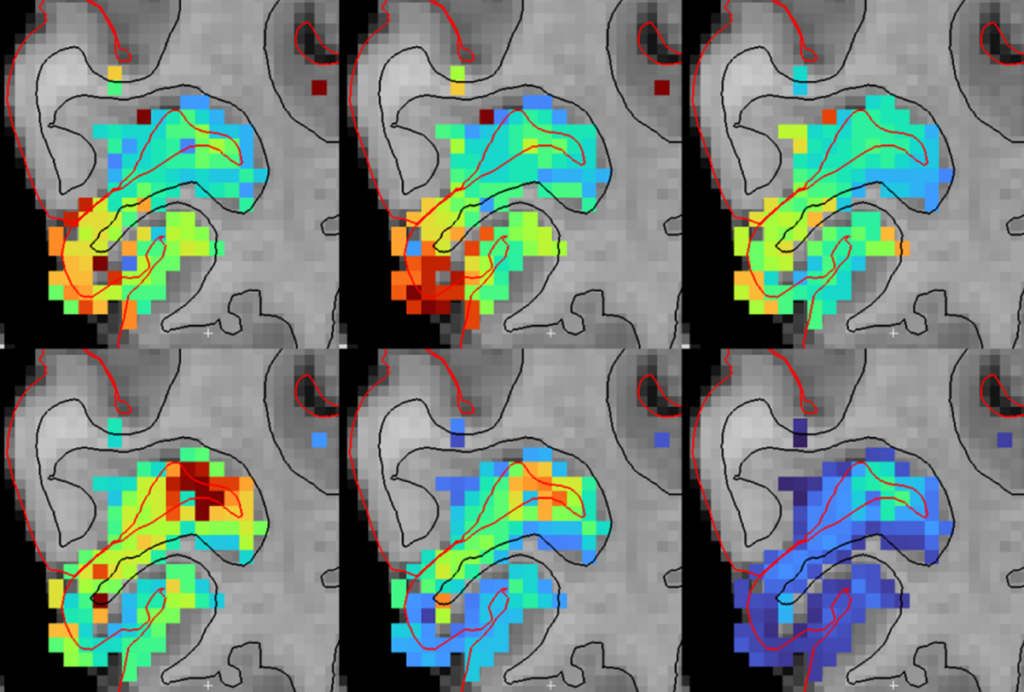

In fNIRS, an individual wears a cap containing ‘optodes,’ which emit and detect near-infrared light. The cap holds the optodes in a fixed position on the scalp such that the light passes through the skull, arcs through the brain’s surface, and hits a sensor on the way back out. By analyzing the returning wavelengths of light, researchers can glean information about the oxygenation of blood in the brain.

By outfitting two people with fNIRS recording devices, researchers can simultaneously record activity in the brains of two interacting individuals. And by integrating specialized eye glasses that track point of gaze, they can simultaneously record eye-tracking data to provide rich information about social engagement during the pair’s interactions.

At Yale University’s Brain Function Laboratory, which Hirsch directs, we have used this face-to-face, dual brain-recording approach to study typical social interactions. In one experiment designed to determine the neural basis of verbal interchange, participants took turns describing images to each other. In another experiment to pinpoint the brain regions involved in eye contact, the participants alternated between meeting each other’s gaze and focusing on a point on a wall.

Preliminary results show that both tasks engage a common circuit in the cerebral cortex (surface of the brain). This network of brain regions, which includes some involved in language, is active when participants communicate either verbally or through eye contact.

We are now using these interactive fNIRS methods to study adults with autism. By closely replicating social situations that individuals with autism find challenging, we hope to make strides in understanding social brain function — and how it goes awry.

This work is part of a broader goal of developing practical biomarkers to guide autism research. We plan to use the information we glean from fNIRS to more effectively use EEG, a relatively cheap and accessible technology, to develop clinical biomarkers that can be made widely available at minimal cost.

James McPartland is director of the Yale Developmental Disabilities Clinic. Joy Hirsch is director of Yale’s Brain Function Laboratory.

References:

Recommended reading

Developmental delay patterns differ with diagnosis; and more

Split gene therapy delivers promise in mice modeling Dravet syndrome

Changes in autism scores across childhood differ between girls and boys

Explore more from The Transmitter

‘Natural Neuroscience: Toward a Systems Neuroscience of Natural Behaviors,’ an excerpt