Imaging interactions

A clever new method records brain activity during live, back-and-forth social interactions and could help scientists study joint attention — the act of looking at an object the same time someone else does.

Functional magnetic resonance imaging (fMRI), which lights up the brain areas activated when a person carries out a task, is one of the most powerful tools in neuroscience.

But for measuring social behaviors, the set-up is less than ideal, to say the least: Participants must lie still in the dark, coffin-like machine for about an hour.

In the May issue of NeuroImage, researchers describe a clever method that can record brain activity during live, back-and-forth social interactions. The technique allows researchers to see the brain regions important for joint attention — the act of looking at an object at the same time someone else does.

Rebecca Saxe and her colleagues at the Massachusetts Institute of Technology rigged the fMRI machine with angled mirrors so that participants can watch a live projection of someone in another room. The participants are also simultaneously being filmed so that the other person can see them — much like a webcam.

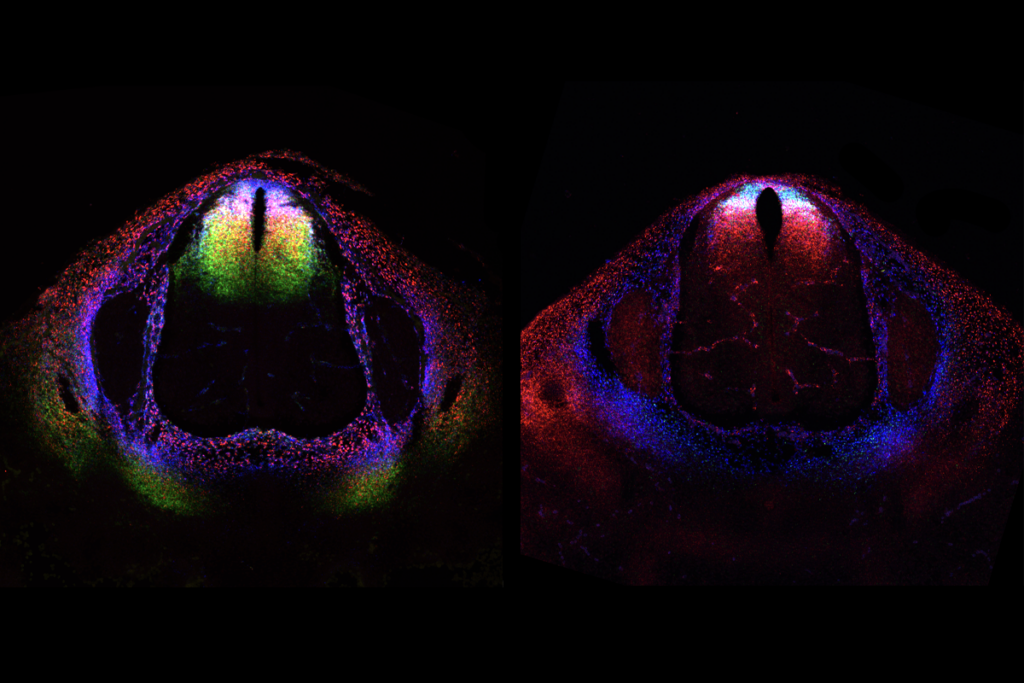

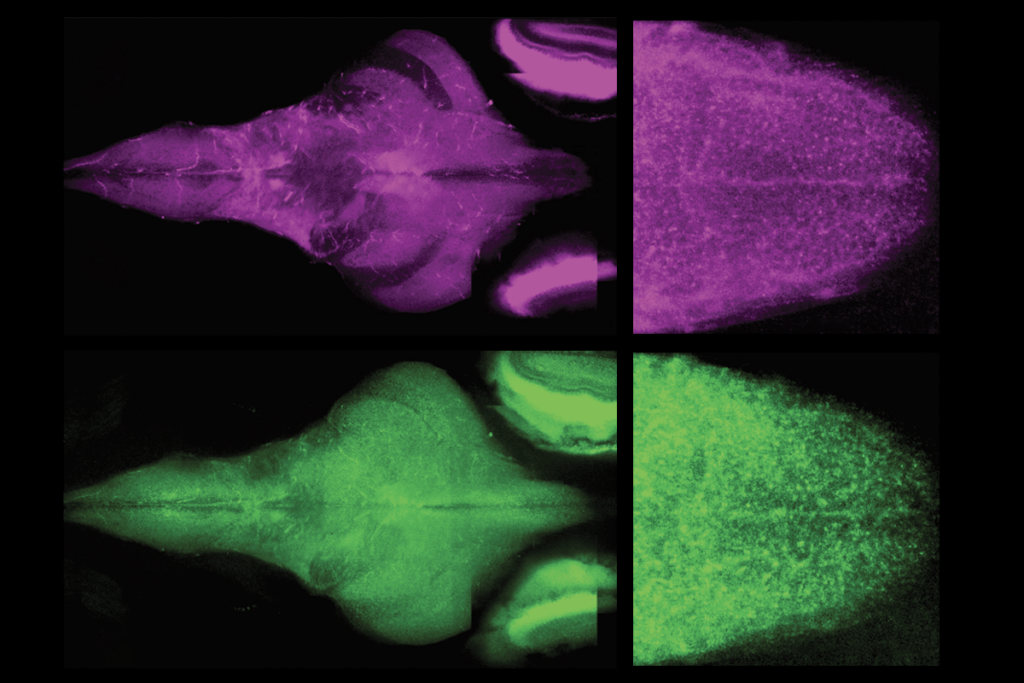

Using this method, Saxe’s group scanned the brains of healthy adults as they played two simple cooperative games. In the first, participants interact with a researcher via the monitors and are then shown either a 40-second feed of the live interaction or a 40-second tape of a previously recorded interaction. When shown the live version, participants show higher activity in several brain regions — such as the temporoparietal junction and superior temporal sulcus — that are known to be involved in social behaviors.

In the second experiment, participants play a game — “Catch the Mouse” — in which both players sometimes look at the same place to find where a mouse is hiding. As it turns out, the same two social-behavior brain regions light up more when participants share their attention with the other player than when they do not.

I visited Saxe’s lab a couple of years ago, when she was first setting up the live feed. She told me she was excited about the procedure because these live social behaviors — as well as the brain regions that they’re activating — have already been implicated in a slew of studies of people with autism. Now that she and her team have successfully used the method on healthy adults, they are testing it on adults diagnosed with autism. They expect to finish collecting data early this fall.

Recommended reading

Largest leucovorin-autism trial retracted

Pangenomic approaches to the genetics of autism, and more

Latest iteration of U.S. federal autism committee comes under fire

Explore more from The Transmitter

Neuro’s ark: Understanding fast foraging with star-nosed moles

NIH scraps policy that classified basic research in people as clinical trials