Transparent reports

New standards for animal studies, including an emphasis on replicating results and the publication of negative findings, are vital for research progress, says Jacqueline Crawley.

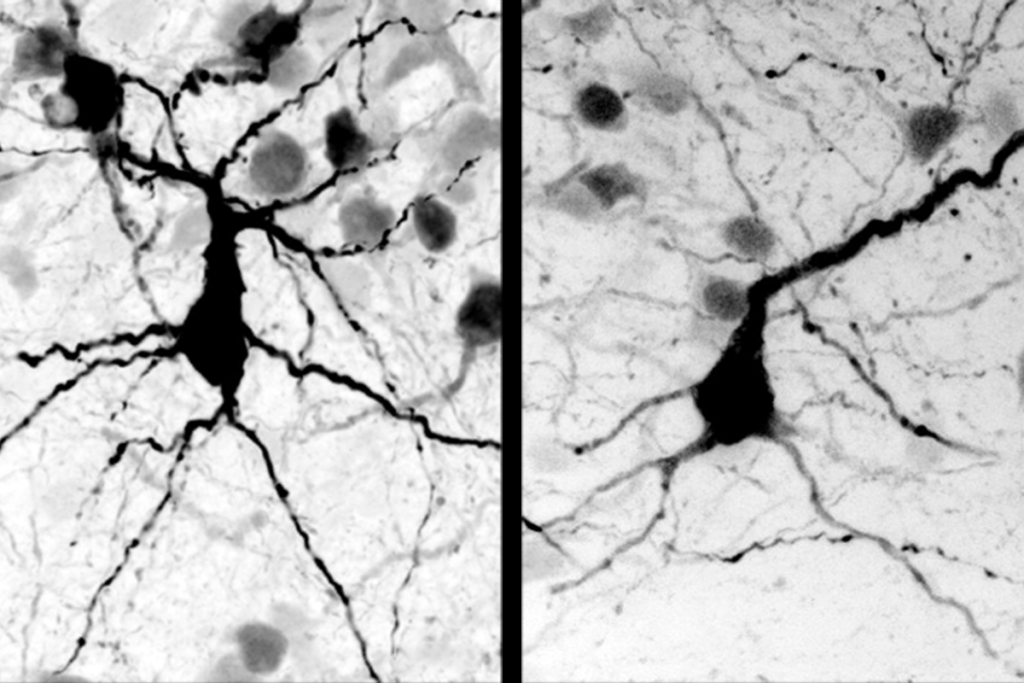

To move forward with drug development in autism, we need to know which of the many candidate genes are responsible for specific symptoms, and which are not. Animal studies that are well designed, executed, and interpreted contribute to answering these questions.

A perspective published 11 October in Nature calls for new reporting standards for the results of animal studies. Shai Silberberg and his many illustrious coauthors have done a great service to biomedical research.

Explaining various ways in which experimental design can bias results, the authors — including directors of the National Institutes of Health and editors-in-chief of high-profile journals — propose many remedies for the many maladies afflicting the field. If they can enforce their proposed guidelines, the quality of research findings will improve significantly.

To illustrate the potential pitfalls of animal research, Silberberg, program director at the National Institute of Neurological Disorders and Stroke, and his coauthors outline a series of poorly designed studies in animal models of ischemic stroke. These studies constituted the rationale for several clinical trials that subsequently failed. The same unfortunate scenario could occur when developing therapeutics for autism.

The perspective emphasizes the critical need to include sufficient numbers of animals per group to avoid false positives and false negatives, and to employ accepted statistical analyses of data. The authors emphasize the use of true littermates as controls for targeted gene mutations, to prevent artifactual differences that may result from factors such as genetic drift, parental care or home cage environment, in separately bred lines.

They also point out that a statistically significant result doesn’t always mean it’s robust and biologically meaningful, which “could account for the poor reproducibility of certain studies.” Focusing on the magnitude of an effect may be particularly relevant for autism, especially in regards to behavioral studies.

For example, abstracts of papers on mouse models of autism that over-interpret small decreases in one parameter of social interactions as an asocial phenotype, or over-interpret small elevations in open-field activity as hyperactivity, can seriously mislead the field.

Insufficient reporting of methods — such as the approach used to blind researchers to the identity of participants receiving a therapy — and lack of replication of findings in a second independent set of animals, could underlie spurious findings, which in turn skew meta-analyses. They recommend that methods be fully described in publications, in order to permit accurate replication by other laboratories.

Publications of replication and non-replication of striking results would be of particular benefit to the rapidly expanding autism literature. But to accomplish this, investigators must be incentivized to publish their negative results. Especially in autism research, in which the abundance of implicated genes begs for stratification, figuring out which genes do not contribute to individual symptoms is as valuable as discovering which genes do.

Jacqueline Crawley is professor of psychiatry and behavioral research at the MIND Institute at the University of California, Davis.

Recommended reading

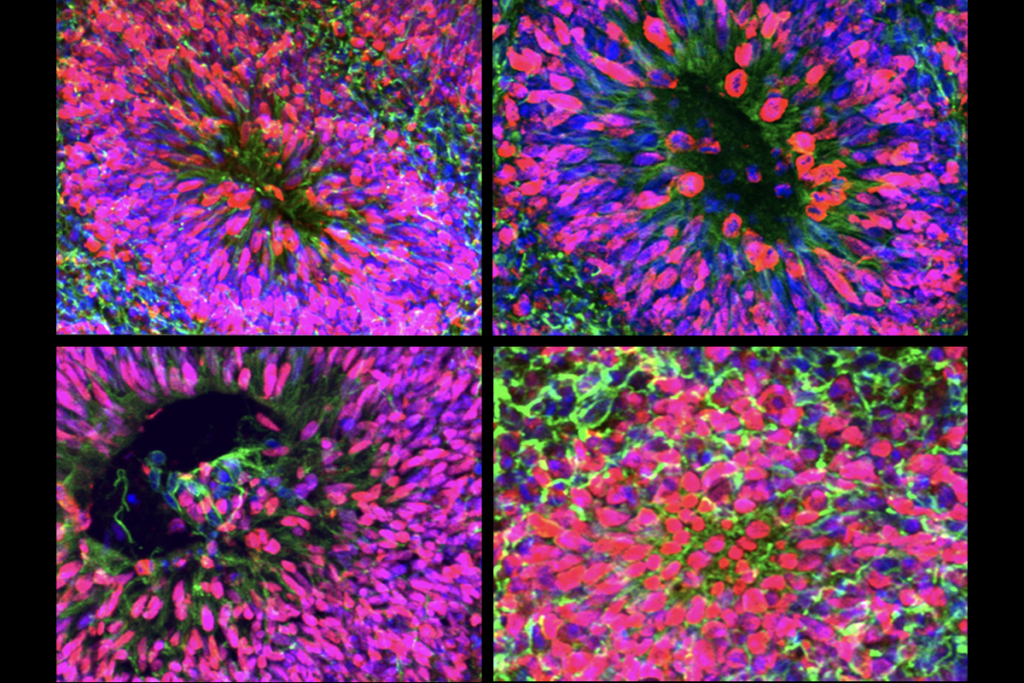

New organoid atlas unveils four neurodevelopmental signatures

Explore more from The Transmitter

Snoozing dragons stir up ancient evidence of sleep’s dual nature