Negative results

A number of studies have found no connectivity differences between people with autism and controls, but few have been published so far, says Dan Kennedy.

Last year at the International Meeting for Autism Research, my colleagues and I presented a poster describing the results of a resting-state functional connectivity study of adults with autism.

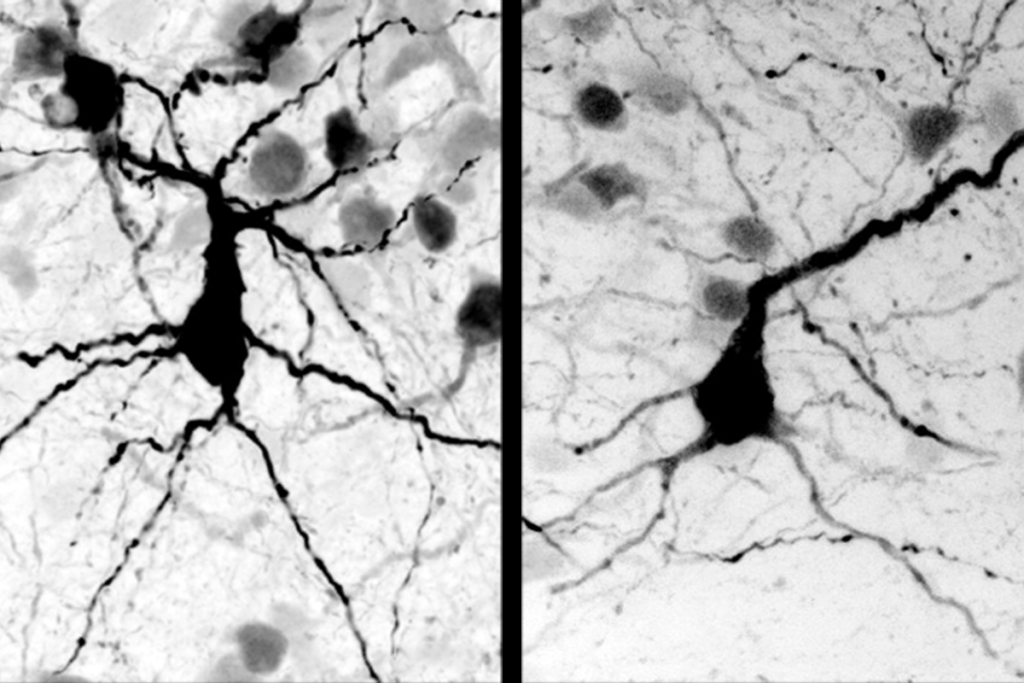

This approach examines functional interactions between brain regions by looking at their spontaneous co-activation while participants simply rest inside a magnetic resonance imaging scanner with no explicit task instructions.

Based on the large number of published research studies on this topic, we expected to find less coupling between brain regions in the autism group. To our surprise, however, the strength of functional connectivity between groups was quite similar, no matter how we carved up the brain or analyzed the data.

Sure, there were hints at some possible group differences between specific brain regions, but these could largely be expected on the basis of chance alone. In our opinion, the notable finding was the striking degree of similarity between the groups.

It turns out that we are not alone.

During the four-hour poster session when I presented the findings, three or four scientists approached me, all from different research groups, stating that they had reached the same conclusion. They also had collected resting-state data from individuals with autism, and had been unable to find the often-reported group differences.

With so many groups finding this same result, a critical question becomes apparent: Why haven’t these results been published?

I can think of at least two distinct possibilities, both of which ultimately result in a publication bias.

First, a lack of a group difference isn’t always the most exciting or interesting finding, although both positive and negative results are equally informative. Identifying differences between groups can clearly help narrow down possible causes and mechanisms underlying these differences, but so can findings of how groups are similar. In the end, positive and negative results are both necessary for developing and refining theories.

Second, it’s always worrisome when one can’t reproduce a commonly reported finding. It’s easy for researchers to dismiss their own results, imagining, perhaps, that the sample size was too small or that participants were older or younger or higher- or lower-functioning than those included in other studies. Or perhaps errors in data analysis can account for this result?

This last question is particularly unfair because the same level of methodological rigor and skepticism should be applied to studies that replicate existing results.

Once this internal battle is fought and scientists decide to submit their work for publication, they face an uphill battle with editors who must ask themselves whether the results are sufficiently interesting, and with reviewers who are likely to more heavily scrutinize papers that challenge conventional wisdom.

So, at all stages in the communication of scientific results, the likelihood of publishing negative findings such as ours diminishes. The resulting publication bias ultimately creates a misleading representation of the data.

When other researchers conduct reviews, surveys and meta-analyses of published data, whole categories of possible results are likely underrepresented or entirely unrepresented.

To counteract this problem, I would make several suggestions.

First, I would urge all researchers to attempt to publish any methodologically rigorous study, regardless of whether the result supports one’s initial hypothesis or replicates well-known findings in the literature.

Second, I would suggest that as authors and reviewers of others’ work, we hold all papers to an equal and high standard, recognizing that all results (if they follow from careful analysis) are important.

I hope this commentary stimulates further discussion within our own research labs. And, ultimately, I hope each of us might look back into our file drawers and hard drives and take the time to share with others what our data have been telling us. Who knows what we might collectively discover?

Dan Kennedy is assistant professor of psychological and brain sciences at Indiana University in Bloomington. Read more from the special report on connectivity »

Recommended reading

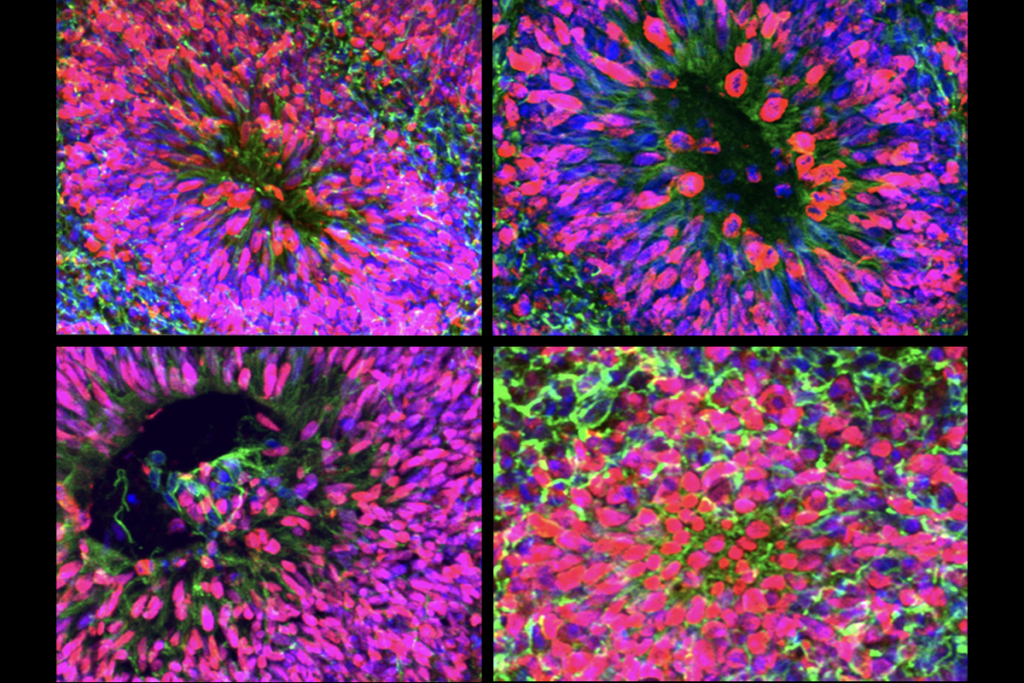

New organoid atlas unveils four neurodevelopmental signatures

Explore more from The Transmitter

Snoozing dragons stir up ancient evidence of sleep’s dual nature