A cautionary tale for autism drug development

Poorly designed animal drug studies for motor disorders have led to spurious conclusions for the clinical trials that follow. This may be even more true for autism research, says Michael Ehlers.

Steve Perrin provides a thoughtful critique of preclinical models for testing drug effects in human disease models and a powerful proposal for how to improve them in his 27 March comment in Nature. Perrin argues that poorly designed animal drug studies lead to spurious conclusions that form the basis for costly, and ultimately negative, clinical trials. This may be even more true for autism research.

Perrin is the chief scientific officer at the ALS Therapy Development Institute (ALSTDI) in Cambridge, Massachusetts, a scientific organization dedicated to identifying and advancing therapeutic development for amyotrophic lateral sclerosis (ALS). That context is important, as the broader biomedical research community struggles with the swirling incentives of publication, funding, the maximization of shareholder value and bias toward positive results that collectively drive academic research, disease-oriented foundations and biopharmaceutical companies.

Perhaps being diplomatic, he sidesteps questions of how incentives shape the contours of experimental design and data dissemination — although I will take stab at this below. But before then, let us take a close look at the case he laid out.

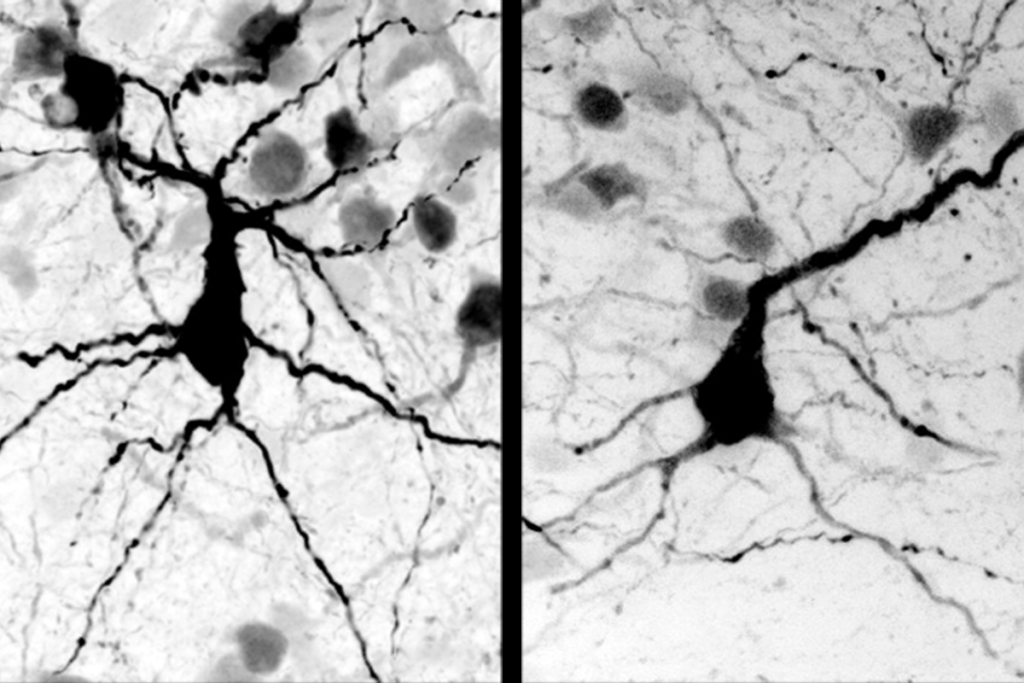

His article focuses on drug studies conducted on mouse models of ALS, a progressive neurodegenerative disorder of the upper motor neurons, which send signals from the spinal cord or motor cortex, and the lower motor neurons, which direct voluntary movement. The clinical endpoints of these studies are quite quantitative — namely, preserving or regaining motor function.

Compare this with those neuroscience disorders for which the relevant measurements are mood, social behaviors and cognition.

Given that difference — and an emerging set of strongly causal genetic mutations for ALS — one might anticipate a harder, more robust, more translatable set of rodent models amenable to predictive drug studies for ALS than for other disorders. Well, think again.

Experimental noise:

As Perrin documents, his institute could not reproduce study after study using specific ALS mouse models with a variety of drugs, all reporting impressive improvements. The drugs all notably failed to demonstrate benefit in clinical trials. A skeptic might wonder — well, are those scientists at the institute missing key procedural details? Are they doing the studies correctly?

Perrin attributes the discrepancy, in general terms, to experimental ‘noise’ and lays out a set of recommendations on the design of animal studies to avoid spurious conclusions. These include excluding irrelevant animals, balancing for gender, splitting littermates among experimental groups and tracking genes across generations.

To this I would add that researchers need to statistically model anticipated drug effects in order to adequately power preclinical studies. They also need to ensure that all preclinical studies are blinded and conducted using pre-specified endpoints.

The good news is that experimental elements designed to reduce noise, such as statistical powering, blinded conduct of experiments and re-genotyping, are increasingly routine across preclinical studies in industry. Yet these common-sense approaches are slow to infiltrate typical academic studies.

Perrin highlights the significant cost and time associated with appropriate design, such as maintaining large animal colonies, that can be beyond the usual laboratory study. Yet these costs are dwarfed by the resources flushed down the toilet when weak preclinical studies lead to much more costly clinical trials.

It is hard to disagree with Perrin’s recommendations. So why do suboptimal practices and experimental design persist? And why isn’t there a better system for correcting or updating the literature? Here is where we come to questions of incentive.

The academic community is the major source of new, innovative science, but it needs to take a hard look at both the positive and negative incentives that drive data reporting (or the absence thereof). From papers to grants to jobs to promotions to awards, significant positive incentive exists to, well, have a positive result.

On the other hand, very little negative incentive exists to prevent the suboptimally designed study from being published in top journals. Moving to a more rigorous translational ethos for preclinical drug testing will require the practical implementation of experimental quality control guidelines that are demanded by reviewers and editors. But it will also require a cultural shift away from an emphasis on novelty and hints of promise (and future funding) to an emphasis on robustness and reproducibility.

What is the impact on autism research? If anything, adequately powered, properly controlled, rigorously evaluated preclinical studies on rodent models of autism are even more important, given the greater complexity of rodent phenotypes currently being ascertained and considered relevant for the core features of autism.

If motor endpoints across genotypes, genders, litters, gene dosage and drug doses vary in ALS, I suspect there is even greater variability in assessments of social behaviors. Certainly, the genetic and behavioral heterogeneity in autism has greater variability than in ALS.

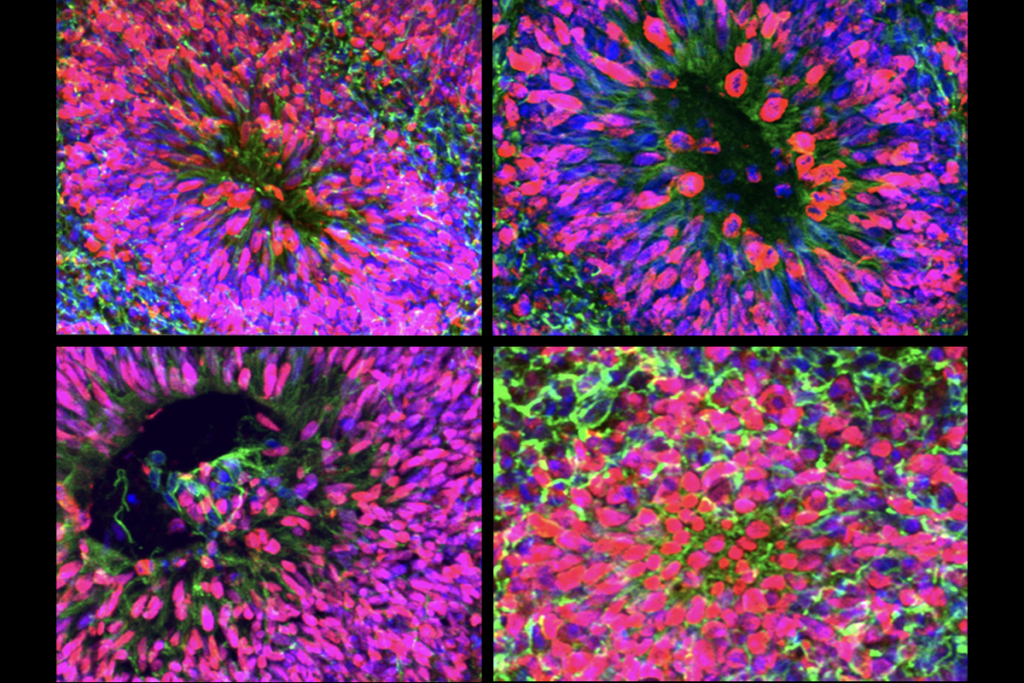

Indeed, given the challenges in the proper design and interpretation of rodent drug testing studies generally, and the more distant translational connectivity between genetics and observable features in autism between man and mouse, a strong case can be made to bypass rodent models and move quickly to focused human models such as induced pluripotent stem cells or specific focused clinical populations.

The cautionary tale of drug testing in ALS models forces us to reconsider how we evaluate and respond to the multiplying number of drug studies on mouse models of autism.

Michael Ehlers is senior vice president and chief scientific officer for neuroscience at Pfizer in Cambridge, Massachusetts.

Recommended reading

New organoid atlas unveils four neurodevelopmental signatures

Explore more from The Transmitter

The Transmitter’s most-read neuroscience book excerpts of 2025

Neuroscience’s leaders, legacies and rising stars of 2025