Device predicts future word use in toddlers with autism

An automated analysis of the speech-like sounds from 3-year-olds with autism predicts their word use four months later, according to unpublished research presented yesterday at the 2015 International Meeting for Autism Research in Salt Lake City, Utah.

An automated analysis of speech-like sounds from 3-year-olds with autism predicts their word use four months later, according to unpublished research presented yesterday at the 2015 International Meeting for Autism Research in Salt Lake City, Utah. The findings could help researchers better understand verbal development in children with autism.

Children who are learning to speak practice by producing consonants, vowels and other single-syllable sounds. Previous studies have found that toddlers with autism who produce more of these speech-like vocalizations have better language skills later on1,2. But tracking these sounds can be tedious, requiring researchers to listen to and analyze audio recordings or home videos.

The automated system used in the new study tracks vocalizations and predicts later word use just as well as manual methods, but more quickly and efficiently.

“This more cost-effective and time-efficient method may make it possible for clinicians to measure vocal development,” says lead investigator Tiffany Woynaroski, research assistant professor of hearing and speech sciences at Vanderbilt University in Nashville, Tennessee.

Woynaroski and her colleagues used a small wearable recording device called the LENA system to collect two daylong audio recordings of 33 preschoolers with autism. The children range in age from 2 to 4 years, and have intelligence quotients of 50, on average. About two-thirds of them are minimally verbal, according to parent reports.

Software connected to the LENA device, which is made by the Colorado-based LENA Foundation, can automatically analyze the recordings to quantify vocalizations, such as syllables, squeals and grunts. The software then generates a score based on the child’s speech-like sounds, with higher scores indicating more advanced abilities.

The researchers also used traditional methods to track vocalizations, manually assigning each child a score after recording the sounds they produced during two 15-minute play sessions. Four months later, they asked parents about their children’s vocabularies.

Overall, children with higher scores on the manual measure used more words four months later, the researchers found. The automated system found a similar trend. When the researchers limited their analysis to include only children who are minimally verbal, the automated system predicted future word use just as well as the manual one.

Woynaroski admits that four months is a relatively short time for children to learn new words, and suggests that future studies examine vocal development over longer intervals. She notes that the software her team used to analyze the vocalizations is not yet publicly available.

For more reports from the 2015 International Meeting for Autism Research, please click here.

References:

1. Plumb A.M and A.M. Wetherby et al. J. Speech Lang. Hear. Res. 56, 721-734 (2013) PubMed

2. Sheinkopf S.J. et al. J. Autism Dev. Disord. 30, 345-354 (2000) PubMed

Recommended reading

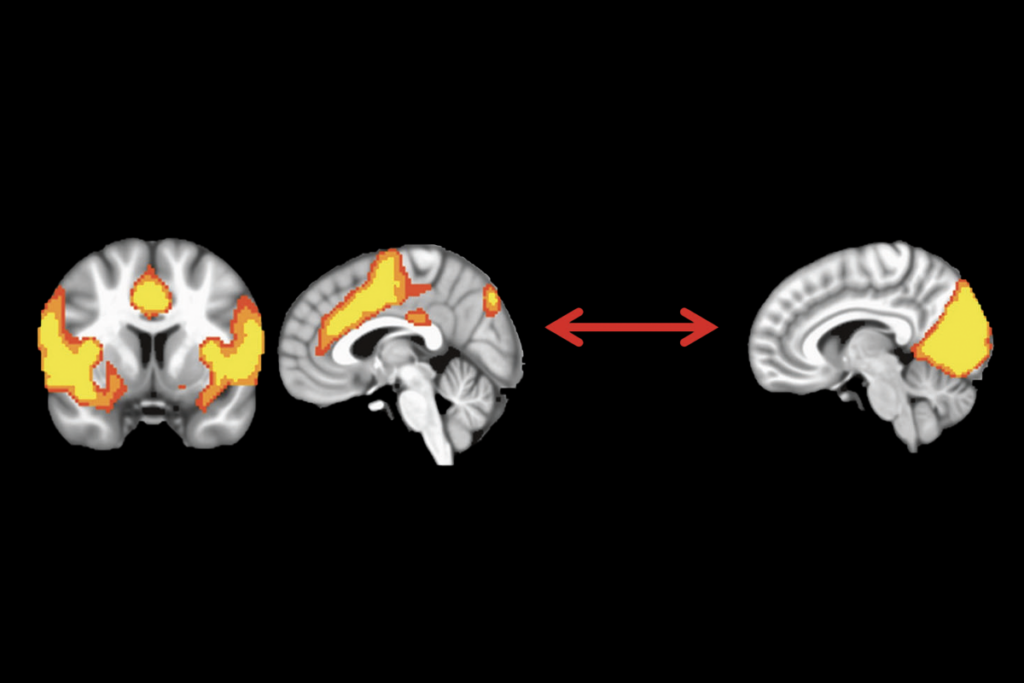

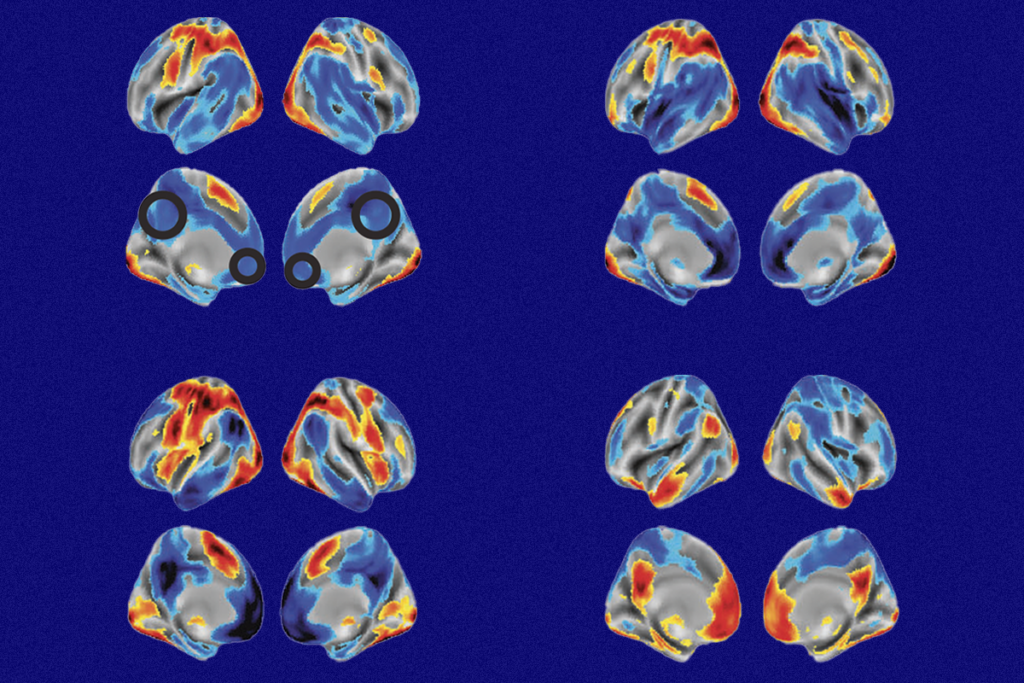

Too much or too little brain synchrony may underlie autism subtypes

Developmental delay patterns differ with diagnosis; and more

Split gene therapy delivers promise in mice modeling Dravet syndrome

Explore more from The Transmitter

During decision-making, brain shows multiple distinct subtypes of activity

Basic pain research ‘is not working’: Q&A with Steven Prescott and Stéphanie Ratté