Registered reports

The more researchers poke around, the more likely they are to find a significant effect — and the more likely that the effect they end up reporting is just a fluke. A new kind of journal article, the ‘registered report,’ may address this problem, says Jon Brock.

These are fast-moving times for autism research. Every week brings a new swath of research findings that promise fresh insights into the causes of autism, its diagnosis and treatment. Yet, beneath the flurry of publications, the reality is that progress has been painstakingly slow.

One reason, unfortunately, is that many published studies contain results that turn out not to be true. This isn’t because scientists are lying or fabricating their data. It is a consequence of the way science is done and the pressures on researchers to produce results.

The scientific approach is meant to guard against erroneous findings. Statistical analyses are used to indicate how likely it would be to get similar results just by chance. By convention, a result is only considered to be statistically significant if there’s less than a five percent chance of it being a fluke. So, naively, we might expect 95 percent of published research findings to be true.

The problem is that, as researchers, we are under pressure to find and report significant results. It’s much harder to get results published — particularly in high-profile journals — if they’re not statistically significant. And often, we have a vested interest in the hypothesis we’re testing. We’re testing our own ideas and, naturally, we want our ideas to be right.

We also have a lot of freedom in the analyses we choose to conduct and the outcomes we choose to report. If the data don’t quite come out as significant, we can always try a slightly different analysis, collect a few more data points, or find a reason to throw out data that don’t fit the general pattern. And we can keep going until we get a statistically significant result.

The more we poke around, the more likely we are to find a significant effect — and the more likely that the effect we end up reporting is just a fluke.

Research plans:

Awareness of this problem is growing. Last week, in a letter to the British newspaper The Guardian, U.K. neuroscientists Chris Chambers and Marcus Munafo called for the widespread introduction of a new form of journal article, the ‘registered report,’ which they hope will address the issue.

The journal Cortex, where Chambers is an associate editor, is already trying out registered reports. Before they even begin to collect their data, researchers have to submit a proposal to the journal, detailing exactly what they are going to do. This includes the analyses they plan to conduct, as well as the methods they will use to collect the data.

Once the data are in, the paper is accepted not based on the results, but on whether the researchers did exactly what they said they would.

There’s no room for fudging. Whatever the results turn out to be (significant or otherwise), we will know exactly how much we can trust them.

A similar approach is already standard practice in medical research. Before testing a new drug in a clinical trial, researchers have to state exactly what outcomes they are looking for.

However, trials of other forms of treatment or intervention often go unregistered. Researchers don’t want to commit to a single outcome measure, presumably because they’re not sure from the outset what the best measure might be. But if they’re allowed to pick and choose their outcome measures after the event, there’s a good chance of there being at least one measure that appears to support the treatment — even if the treatment is completely ineffective.

The proposal for registered reports is a recognition that we need to apply the same standards to all research, not just clinical trials.

Of course, it’s often the case that the most interesting findings are the ones we least expect. Many important scientific findings were discovered by accident. And there’s nothing intrinsically wrong with reporting findings that weren’t predicted.

Still, we should always be skeptical about findings that weren’t predicted. And of course, if the effects are real, then further studies should replicate the results.

Registered reports should be seen as the gold standard for research. They’re not the solution to all of the challenges facing autism research — or science in general. But they may be a way of identifying those findings in which we can have a high degree of confidence.

Jon Brock is a research fellow at Macquarie University in Sydney, Australia. He also blogs regularly on his website, Cracking the Enigma. Read more Connections columns at SFARI.org/connections »

Recommended reading

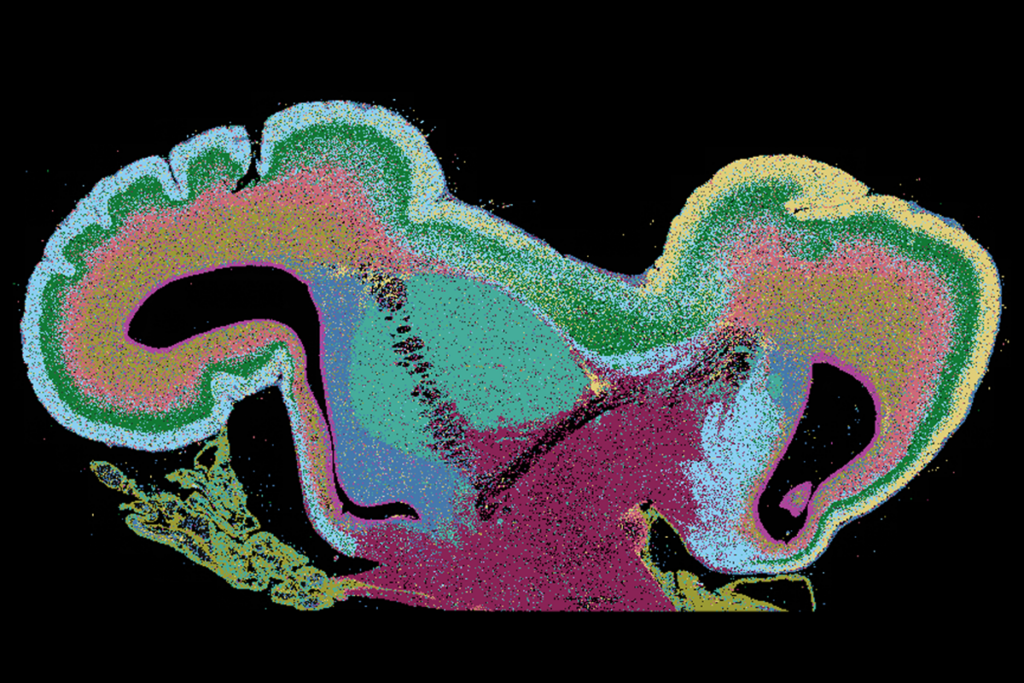

Among brain changes studied in autism, spotlight shifts to subcortex

Home makeover helps rats better express themselves: Q&A with Raven Hickson and Peter Kind

Explore more from The Transmitter

Dispute erupts over universal cortical brain-wave claim

Waves of calcium activity dictate eye structure in flies