Book review: ‘Rigor Mortis’ reveals rampant sloppiness in science

In his new book, journalist Richard Harris writes that lack of reproducibility in research poses a serious threat to science.

Biomedical science faces an existential threat — not the threat of budget cuts or anti-science political views, but one from within the discipline itself. So argues Richard Harris in his new book, “Rigor Mortis.”

The book is an alarming and highly readable summation of what has been called the ‘reproducibility crisis’ in science — the growing realization that large swathes of the biomedical literature may be wrong. By one estimate, according to reporting by Harris, up to 85 percent of published studies may be incorrect. By another, unreliable research costs U.S. taxpayers $28 billion per year — an amount equivalent to nearly the entire annual budget of the U.S. National Institutes of Health (NIH)1.

Harris has been tracking the reproducibility crisis for years as a reporter for National Public Radio. But his book highlights the urgency of the problem, which Harris says threatens to erode public trust in science.

Most worryingly, Harris reports, an untold number of people have participated in drug trials on the basis of demonstrably false findings, putting their own lives at risk.

He highlights an analysis of nine drugs that showed promise for treating amyotrophic lateral sclerosis (ALS) in animal studies. Eight of the drugs failed to slow the deadly neurodegenerative disease in people2.

When scientists at the ALS Therapy Development Institute in Cambridge, Massachusetts, explored why the trials were a bust, the problem turned out to be worse than the known issue that animal models don’t faithfully reproduce human ALS. The scientists found a host of flaws in the animal studies themselves. Some studies included too few animals to detect a statistically valid effect; others failed to account for gender differences. Male mice in the ALS model die earlier than females, and in some of the studies, researchers included more females in the treatment group than in the control group — which could make the drug look like it’s working even if it isn’t.

When the Cambridge scientists redid the studies with more rigorous methods, not one of the candidate drugs showed a benefit. The clinical trials had been destined to fail, at a cost of hundreds of millions of dollars.

Publish or perish:

Most scientists don’t intentionally conduct shoddy experiments, Harris notes. They want to produce findings that benefit society. But science has evolved in ways that make it difficult for them to follow that dream. Funding is scarce and the pressure to publish in high-impact journals is intense, he writes. As a result, scientists prioritize studies that are likely to make a splash over less sexy but perhaps more rigorous work.

Through interviews with researchers and journal editors, Harris learns that the pressure to publish and secure precious grant money drives scientists to engage in behaviors that undermine the reliability of their work. These include ignoring data that don’t fit with their theories, massaging statistics and overhyping results. Some scientists fabricate data outright.

Once a flawed study is published, there is no incentive for other scientists to attempt to replicate its results or point out its flaws, because they must spend their time and money developing and publishing their own research if they wish to advance their careers. And there is no way for scientists to admit their mistakes without damaging their reputations.

Watchdogs and U.S. lawmakers have become aware of the problem, spurring funding agencies to address the issue. The NIH, for instance, has spelled out expectations for how grantees should ensure rigor in their research. The rules include authenticating materials such as antibodies and cell lines, and accounting for gender. Dutch authorities announced last year that they would invest 3 million euros to replicate studies.

Digital badges:

For their part, scores of major journals, including Nature, Science and Cell, have agreed to use publishing practices that promote reproducibility. These journals are requiring authors to fully describe their methods so that others can replicate their experiments, for example. Still, Harris notes that most funders and publishers continue to reward novelty over tenacity.

Non-academic science institutions, on the other hand, are highly motivated to push for solid science. Pharmaceutical companies loathe spending money on drugs that don’t work. And patient-funded groups such as the ALS Therapy Development Institute need to focus their meager funds on research that will lead to new treatments. More and more private funding organizations are requiring researchers to share data, hoping to push scientists toward greater transparency and rigor.

The Center for Open Science, a group dedicated to promoting rigorous research, has created digital ‘badges’ that journals can post on the online version of papers whose authors agree to share data or materials. The center receives much of its support from philanthropic sources such as the Laura and John Arnold Foundation, based in Houston, Texas. Harris suggests that privately funded groups will play an increasing role in keeping science on track.

Clearly, there is no single solution to the wide-reaching problem. But Harris’ book should spur every scientist to scrutinize her experimental design and encourage other scientists to try to reproduce findings. In an age of funding cuts and anti-science political rhetoric, researchers simply can’t afford to be sloppy.

References:

Recommended reading

Expediting clinical trials for profound autism: Q&A with Matthew State

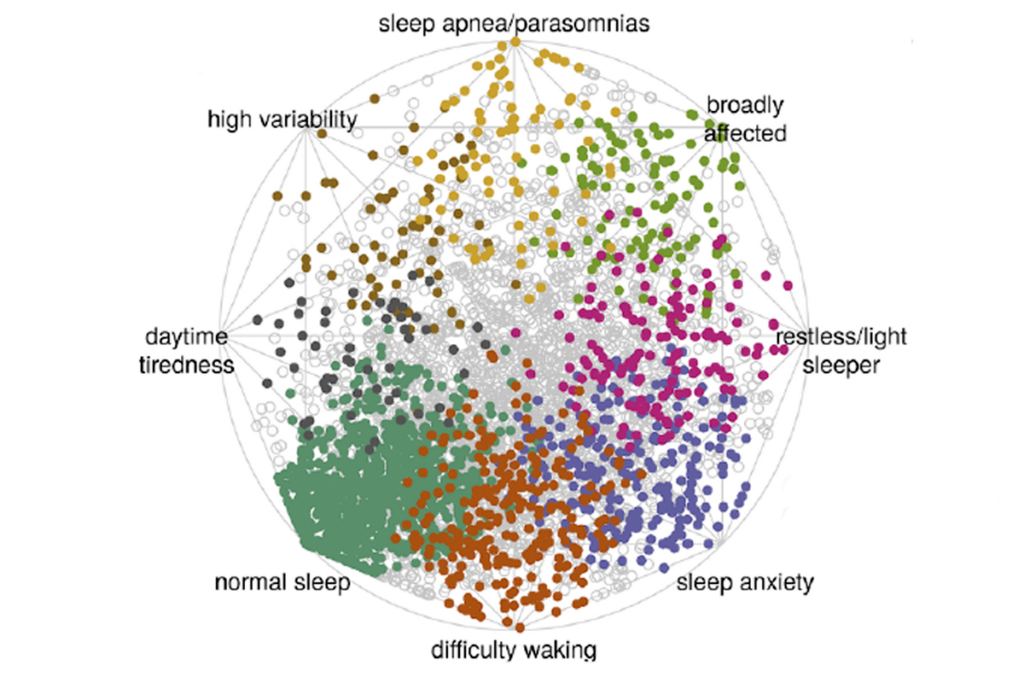

Too much or too little brain synchrony may underlie autism subtypes

Explore more from The Transmitter

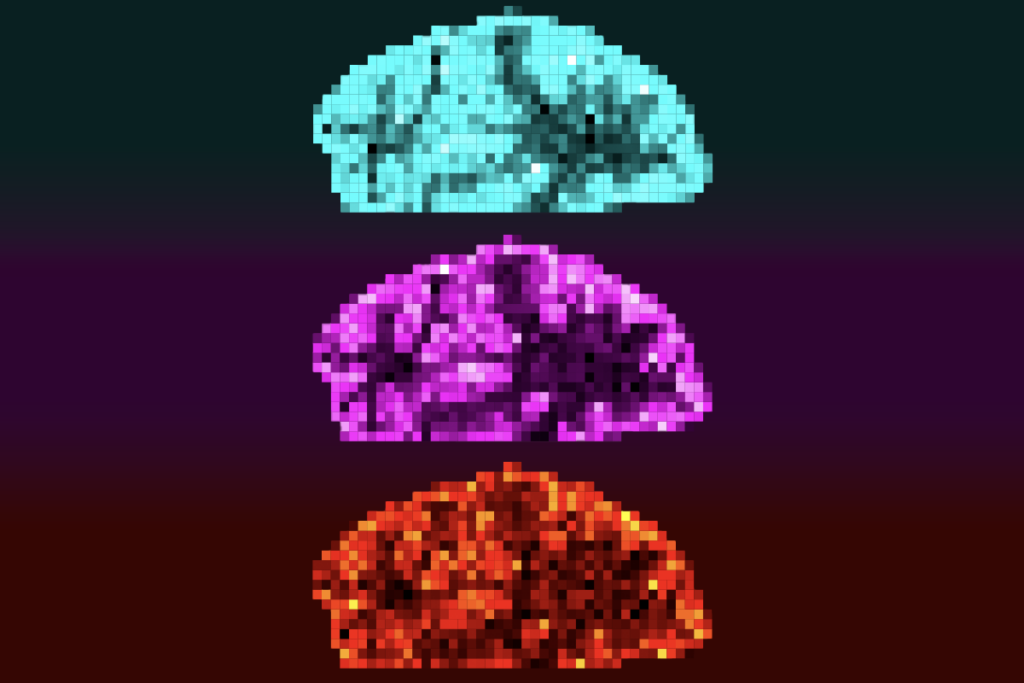

Mitochondrial ‘landscape’ shifts across human brain