A scientist at University College London is correcting two of her published papers after anonymous commenters publicly raised questions about her team’s statistical analyses.

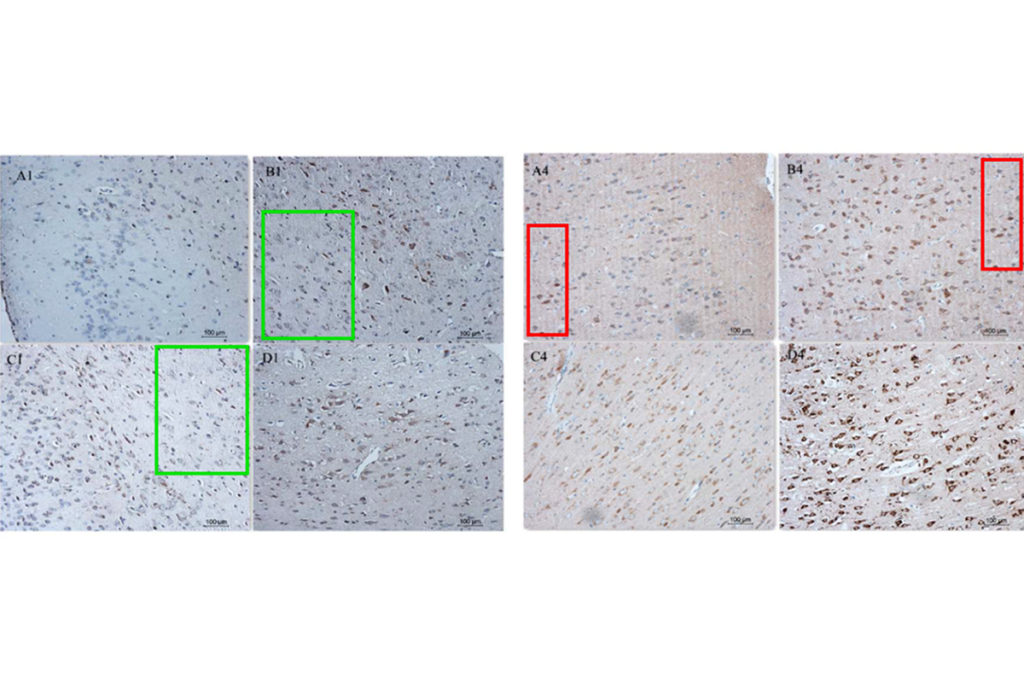

One of the papers was published in Nature Neuroscience in February 2023, and the other appeared in The EMBO Journal last August. Both looked at determinants of microglia-synaptic engulfment in mouse models of Alzheimer’s disease. They were co-led by Soyon Hong, group leader at the university’s U.K. Dementia Research Institute.

In November 2023, anonymous commenters on the online discussion forum PubPeer started questioning the data in both papers. Hong wrote on the forum last month that both journals will publish corrections addressing some of the issues “in the near future.”

Nature Neuroscience applied an editor’s note to Hong’s paper on 12 February to alert readers “that concerns have been raised about the data and statistical analysis reported in this article. Further editorial action will be taken once this matter has been resolved,” the note says.

Meanwhile, The EMBO Journal wrote in the PubPeer discussion on that paper, saying they were assessing the matter.

In an email to The Transmitter, Hong said that making the corrections available as soon as possible is a priority for her team and the journals.

T

he PubPeer commenters raised mainly three types of concerns about both papers. First, they said Hong and her colleagues erroneously contrasted p-values across some of their experiments to arrive at their conclusions.For instance, in the Nature Neuroscience paper, the researchers reported a loss of synapses in Alzheimer’s model mice compared with wildtype mice — results that yielded a p-value of less than 0.05. In a second experiment, the team removed the protein SPP1 in both models; the slight difference in synapse loss between those two models yielded a p-value greater than 0.05, so the team concluded that synapse loss in the Alzheimer’s mice is partially driven by SPP1.

But the difference between a significant p-value and a non-significant one is not necessarily significant, says Sander Nieuwenhuis, professor of cognitive neuroscience at Leiden University. Instead, Hong’s team should have performed a statistical test to compare the difference found in the first experiment with the difference found in the second one, he says.

Thirteen years ago, Nieuwenhuis realized many of the neuroscience papers he had to review made this same mistake, which led him to write a paper about it. Back then, he found the problem in nearly half of 157 neuroscience papers he examined.

“It seems such an obvious problem, but apparently, especially in neuroscience, people are not very much aware of it,” he says.

Some of the studies’ statistical analyses “could be improved,” Hong wrote to The Transmitter. Both papers will be corrected to address those issues, she added.

P

ubPeer commenters also said that some of Hong’s experiments had a statistical error called “pseudoreplication,” meaning that the team analyzed multiple cells from the same mouse or multiple spines in neuronal cultures as if they were independent samples. “It’s such an obvious violation of the assumption of independency,” Nieuwenhuis says.Instead, the analyses should have used either the mean or the median of the regions of interest, says Stanley Lazic, a neuroscientist who owns a probabilistic predictive modeling company and has written about pseudoreplication. Some of the experiments’ conclusions wouldn’t change, but they would likely have bigger error margins, he says.

“The comments led us to realize that we were not clear enough on our scientific rationale on what is considered a fair biological unit,” Hong wrote in her email. One of the papers displays data analyzed on a per-spine basis, and the other displays microglia as a biological unit. But the team routinely performs per-mouse analyses as well, according to Hong. She says she plans to correct the papers to display both types of analysis, showing that the results do not change.

Finally, PubPeer commenters pointed out that some of the source data in the Nature Neuroscience paper seemed to not correspond with what was plotted in the paper.

“Wrong files were erroneously uploaded as source data files for the final figures,” Hong wrote in her email. She added that she and her colleagues immediately alerted the journal and are working to correct the files.

Despite the corrections, the major findings of both papers still stand, according to Hong. “There is no change in the outcome or conclusions in either paper,” she wrote.