Eyes provide insight into autism’s origins

The eyes, so goes the ancient proverb, are the window to the soul. Sophisticated machines that track vision suggest that eyes may also be the window to autism.

The eyes, so goes the ancient proverb, are the window to the soul. Sophisticated machines that track vision suggest that eyes may also be the window to autism.

In the fall of 2002, clinicians at the Yale Child Study Center were testing 15-month-old Helen*** for developmental disorders. For most of her life, Helen had developed normally: smiling, then crawling, then walking, even saying a handful of words. But when she was about a year old, she stopped speaking. She stopped bringing things to her parents, and ignored new people.

Her parents were especially worried because Helen’s 3-year-old brother had been diagnosed with autism a year earlier. Their fears for their daughter were confirmed: after a battery of cognitive and behavioral tests, the specialists at Yale found that Helen, too, is autistic.

In a way, Helen’s visit was “serendipitous,” says Ami Klin, a psychologist at the center. At the time, clinicians rarely saw an autistic child younger than age 2 or 3, so Helen provided a rare research opportunity.

Klin and his colleague, Warren Jones, began observing the way Helen looked at people. In one experiment1, they used infrared cameras mounted on a baseball cap to track her precise eye movements as she watched a video of a cooing woman’s face. Unlike typical babies, Helen focused much more on the woman’s mouth than on her eyes.

“That [experiment] generated the hypothesis that this girl could actually be watching somebody’s face, but not necessarily experiencing that face as a person,” Klin says.

Helen’s case study has led to several follow-up experiments with larger groups of children, which have so far produced similar results. Several research groups are using eye-gazing differences to probe how autism might be linked to social cognition.

Because the technology provides one of the cheapest, most quantifiable measures of social cognition, it could routinely be used to diagnose autism, some experts say.

“Eye tracking is one of the few affordable and easily implemented technologies that gives us a real quantifiable way of measuring social cognition,” says Michael Spezio, a social neuroscientist at Scripps College in California. The machines needed are much cheaper than those for EEG, fMRI or MEG, but more expensive and labor-intensive than the current gold standard of behavioral tests.

“People are really just feeling out what existing [eye-tracking] paradigms would be appropriate to use on children of various ages, and then how children with autism will respond,” says Sally Rogers, a development psychologist at the University of California, Davis. “We’re still at the beginnings of this kind of work.”

Eye tracking has long been used in human perception experiments. In 1977, psychologists at the University of Durham in England tracked the eye movements of three healthy adults as they looked at black-and-white photographs of human faces. They found that people tend to gaze mostly on ‘core features’ of the face ― the nose, the mouth, and especially the eyes2.

This focus on the eyes “is well-programmed in us,” Klin says. Decades of research has shown that even in the first few days of life, babies prefer to look at their mothers3, and mothers prefer to look at their babies4.

“Out of this mutually reinforcing choreography, a lot that is important in human development seems to emerge,” Klin says. Because impaired social development is a characteristic feature of autism, it’s logical to study eye movements in people with autism, he adds.

In 2002, researchers at the University of North Carolina published the first eye-tracking study targeting individuals with autism5. They tracked the eye movements of five adults with autism looking at photographs of facial expressions, and found that they spent a smaller fraction of time looking at the nose and eyes than did controls.

But still photographs lack the rapid social cues that occur in real social interactions, Klin notes. “Nothing is more challenging for individuals with autism than naturalistic situations,” he says. “In the lab, we should be interested in how they can function in the real world.”

In 2002, Klin and Jones published a study that used highly emotional scenes from the film Who’s Afraid of Virginia Woolf? instead of photos6. They confirmed that individuals with autism gaze at the actors’ mouths, or even at movements far in the periphery of the scene, as opposed to watching the actors’ eyes.

These eye-tracking studies all involved adults or adolescents with autism ― until Helen.

Connecting the dots:

Helen was tested on two eye-tracking paradigms. In the first, she watched a video of a woman making friendly cooing noises. Unlike typical babies, Helen focused much more on the woman’s mouth than her eyes.

The second paradigm tested Helen’s interest in biological motion by using ‘point-light animations’ of body movement that Klin and Jones had created at an animatronics studio in California. In the studio, they attached small lights to the major joints on an actor’s body. Then the actor was taped making a variety of movements ― such as playing patty-cake or waving excitedly ― in the dark.

The resulting animations, white dots on a black background, look like moving constellations. Normal babies ― even as young as two days old ― can connect the dots and recognize the animations as human figures.

And typically developing babies in Klin’s lab preferred to look at the waving human figure than at the same animation presented upside-down. “Our typically developing kids saw those displays as people. They’re imposing social meaning onto these white dots,” says Jones.

But Helen didn’t make this social connection.

She showed a preference for the right-side-up version of the patty-cake animation only when it was accompanied by clapping noises for every time the actor’s hands came together. “She was acutely sensitive to this physical contingency,” Klin says, meaning that she had no trouble recognizing the physical synchrony between movement and sound.

This idea of “physical contingency” might explain why many previous experiments showed that autistic individuals seem to gaze at the mouth, rather than the eyes. The mouth moves more than the eyes do, and produces sounds that are synchronous with these movements.

Klin and his group have expanded their studies to two groups of 2-year-old autistic children. Their results are currently in press. “Let’s just say that whatever you read about the 15-month old stands for these larger groups of children,” Klin says.

Center of emotion:

Knowing where children with autism are looking, however, doesn’t necessarily show whether and how their brains use this visual information.

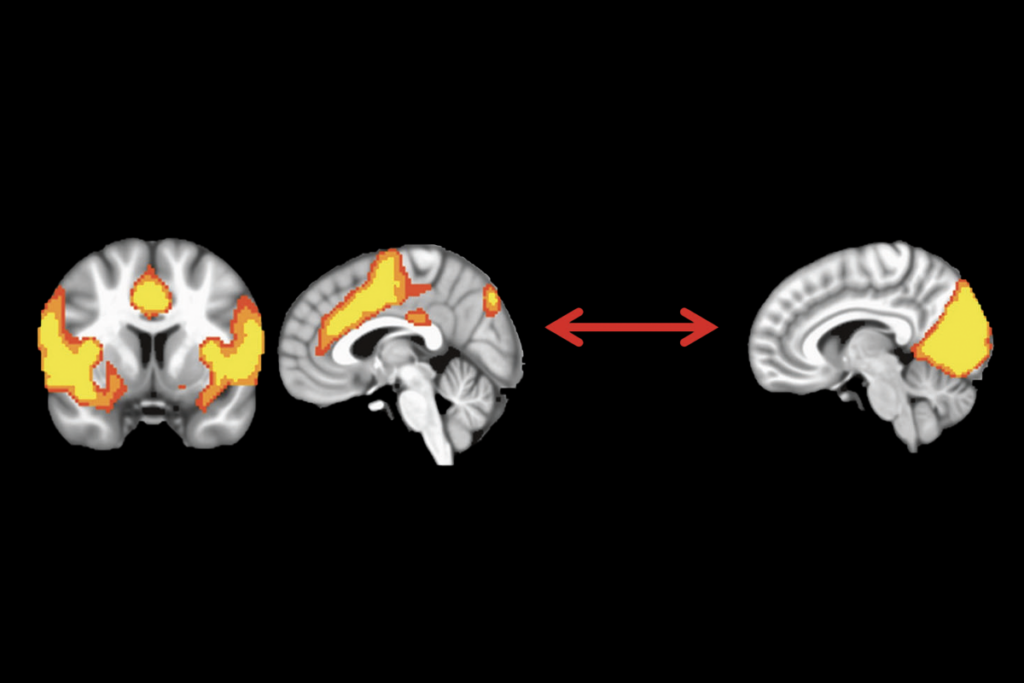

In 2005, researchers at the California Institute of Technology found that, like people with autism, a patient with unusual lesions to the amygdala ― a small region in the temporal lobe that’s thought to be the center of emotion ― focused less on the area around the eyes when judging emotions7. The observation suggested that autism might stem from an abnormal function of the amygdala.

Last year, Spezio reported that when people with autism are shown random parts of a face expressing fear or happiness, they rely on the mouths rather than on the eyes to correctly identify the emotion8.

Even when they were shown photos of eyes, they didn’t make use of that information to make emotional judgments. From this, Spezio concluded that “the brains of people with autism treat facial information differently.”

“What’s really interesting is how an autistic person looks at the face as it unfolds over a minute or two of conversation,” Spezio says. These longer “gaze paths”, he adds, are likely to show distinct differences between autistic and normal individuals.

In unpublished studies, Klin and Jones are finding abnormal gaze patterns in babies younger than a year old who later develop autism.

But another ongoing study suggests that eye gazing patterns may change drastically during early development.

In 2006, Rogers and her colleagues at the University of California, Davis, tested 6-month-old siblings of children with autism as they interacted with their mothers in real time through a TV monitor9. Using a “still-face paradigm,” the researchers measured 31 babies’ eye movements just after the mothers suddenly froze their faces. They found ― just as Klin’s group had ― that these siblings of autistic kids spent more time looking at their mothers’ mouths than their eyes.

As the researchers followed these babies, however, they found that the ones who had gazed longer at their mothers’ mouths did not necessarily go on to develop autism.

“The three children who did develop autism, in fact, all had shown a preference for their mothers’ eyes,” says Rogers. Her group is still analyzing the results but, she says, “The lesson here is that we have to be very careful when extracting a hypothesis from adult studies and taking it down to infancy.”

Before using eye tracking as a diagnostic tool, “We’d have to have some reliable markers using eye-tracking that are better than behavioral markers,” Rogers says. “It’s an exciting time right now, we’re all testing new paradigms and new kinds of stimuli. But we’re not there yet.”

*** Name has been changed for privacy reasons.

References:

- Klin A. and Jones W. Dev. Sci. 11, 40-46 (2008) PubMed

- Walker-Smith G.J. et al. Perception 6, 313-326 (1977) Abstract

- Field T.M. et al. Infant Behav. Dev. 7, 19-25 (1984) Abstract

- Lewis M. & Feiring C. Child Dev. 60, 831-837 (1989) Abstract

- Pelphrey K.A. et al. J. Autism Dev. Disord. 32, 249-261 (2002) PubMed

- Klin A. et al. Arch. Gen. Psychiatry 59, 809-816 (2002) PubMed

- Adolphs R. et al. Nature 433, 68-72 (2005) PubMed

- Spezio M. et al. J. Autism Dev. Disord. 37, 929-939 (2007) PubMed

- Merin N. et al. J. Autism Dev. Disord. 37, 108-121 (2007) PubMed

Recommended reading

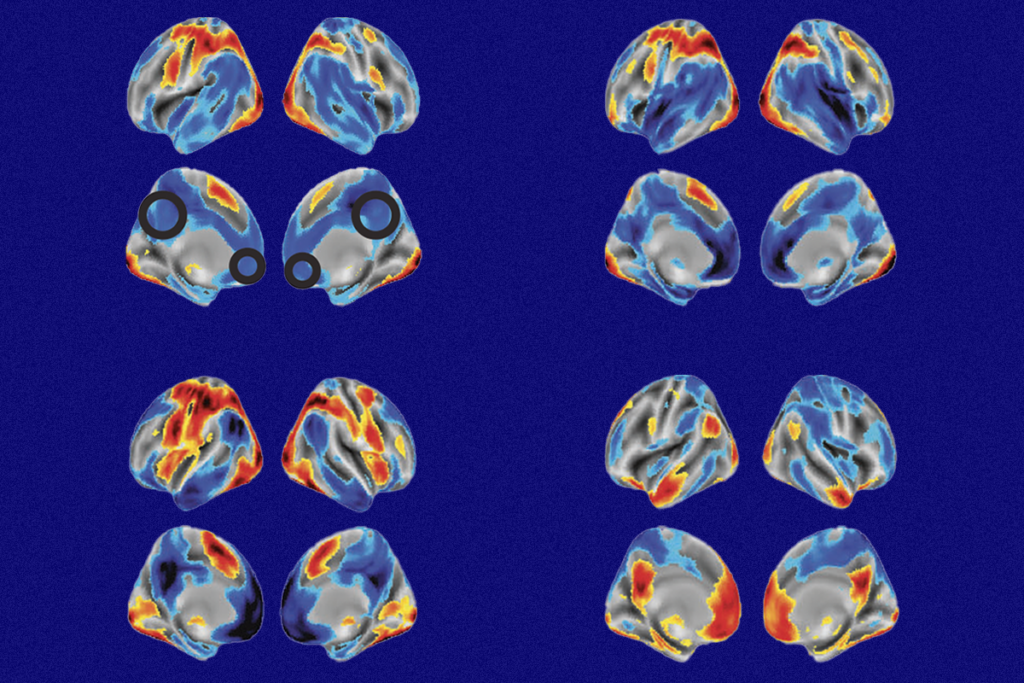

Too much or too little brain synchrony may underlie autism subtypes

Developmental delay patterns differ with diagnosis; and more

Split gene therapy delivers promise in mice modeling Dravet syndrome

Explore more from The Transmitter

During decision-making, brain shows multiple distinct subtypes of activity

Basic pain research ‘is not working’: Q&A with Steven Prescott and Stéphanie Ratté