Machine-learning models can predict a neuron’s location based on recorded bursts of activity, a new preprint suggests. The findings may provide novel insights into how the brain integrates signals from different regions, the researchers say.

The algorithm—trained on electrode recordings of neurons in mice—appeared to learn a cell’s whereabouts from its interspike interval, the sequence of delays between blips of activity. And after deciphering the spike pattern from one mouse, the tool predicted neuronal locations based on recordings from another rodent.

That conservation between animals suggests the information serves some useful brain function, or at least doesn’t get in the way, says lead investigator Keith Hengen, assistant professor of biology at Washington University in St. Louis.

Although more research is needed, the anatomical information embedded in interspike intervals could—in theory—provide contextual information for neuronal computations. For example, the brain might process signals from thalamic neurons differently from those in the hippocampus, says study investigator Aidan Schneider, a graduate student in Hengen’s lab.

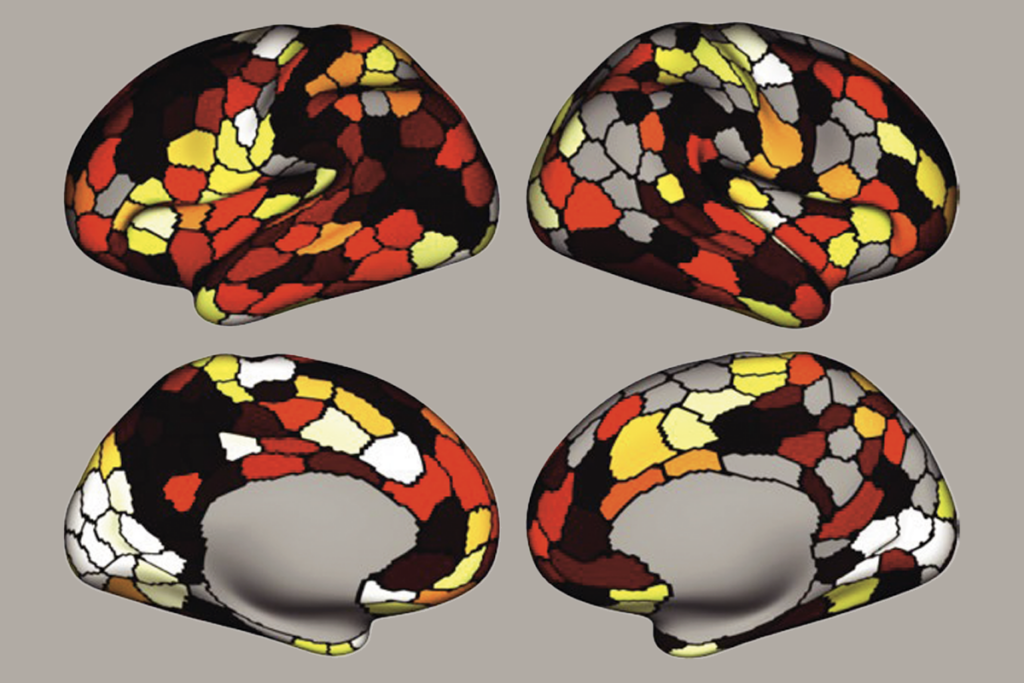

Schneider and his colleagues trained the model using tens of thousands of Neuropixels probe recordings from 58 awake mice, published by the Allen Institute. When Schneider’s team presented the algorithm with fresh data, it could decipher whether a given neuron resided in the hippocampus, midbrain, thalamus or visual cortex 89 percent of the time, once the team removed noise from the data. (Random guesses would be correct 25 percent of the time.) But the tool was less able to pinpoint specific substructures within those regions.

It’s a great example of the kinds of insights that labs poring over huge datasets can produce, says Drew Headley, assistant professor of molecular and behavioral neuroscience at Rutgers University, who was not involved in the study. But the findings may simply echo published reports of variations in spiking activity across different brain regions, he says.

B

y manipulating the spiking pattern, the group was able to identify which feature of it conveys a neuron’s location. Shuffling and adjusting the time gaps between spikes blunted the model’s predictions, suggesting that the sequence of spike intervals holds anatomical information. The findings were posted on bioRxiv in July.The tool could be used to ensure electrodes are recording from the correct location during experiments, Hengen and his colleagues say.

That might be useful when working with rodents, but the brain structures recorded in the mice—including the thalamus and hippocampus—aren’t accessible for recording neurons in people, says Angelique Paulk, instructor in neurology at Harvard Medical School, who wasn’t involved in the study. More reachable areas, such as the prefrontal cortex, evolved more recently and might not behave in the same way as the regions found in the back of the brain, Paulk says. “How generalizable is it really, if you’re focused on these [back] structures?”

And the tool might not be accurate enough to provide the detailed information that neuroscientists need. It’s relatively easy to position electrodes in the right brain region, but there’s less certainty around cortical layers, says Tim Harris, senior fellow and director of the applied physics and instrumentation group at the Howard Hughes Medical Institute’s Janelia Research Campus, who wasn’t involved in the work. “The data doesn’t allow you to draw those fine lines,” he says.

Hengen and his colleagues disagree with that point. In contrast to the visual cortex—where the algorithm performed little better than chance—within the hippocampus it could pinpoint a neuron’s location with 51 percent accuracy. “It’s not a coordinate that we’re predicting, but we can pull out those substructures,” Schneider says.

And as the models are fed with increasing volumes of data, they should be able to pinpoint specific structures more accurately, says study investigator Gemechu Tolossa, a graduate student in Hengen’s lab.

Whether or not the model is used in the lab, building on the work with explainable artificial intelligence—tools that untangle the predictions made by algorithms—could help scientists better understand how the brain encodes and uses anatomical information, Headley says. “That could be a substantial contribution to neurobiology.”