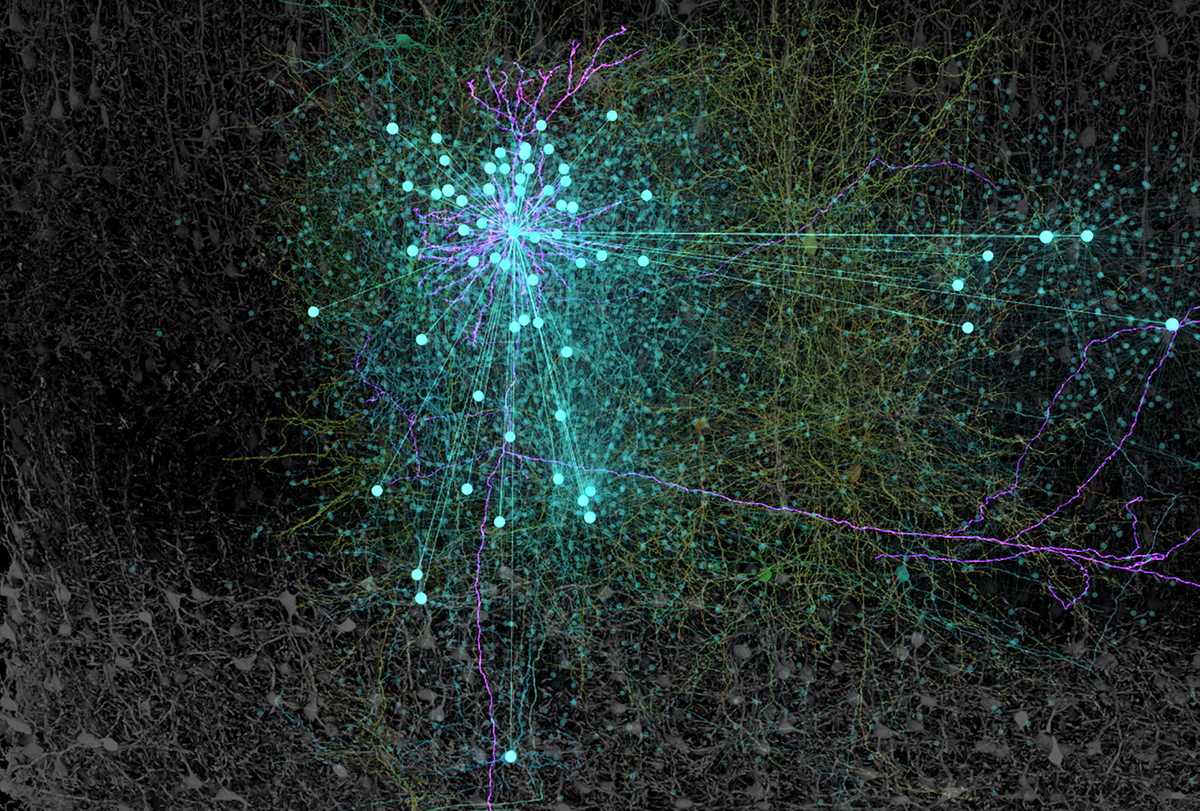

Over the past 10 years, systems neuroscience has put substantial emphasis on the inhibitory cells and connections of the cortex. But about 80 percent of cortical neurons are excitatory, each receiving thousands of excitatory synaptic inputs. A substantial fraction of those inputs—half or more, depending on the estimate—come from other neurons within just a few hundred microns’ distance. These excitatory-excitatory recurrent connections extend across layers but also form dense local networks within each cortical layer. In each cubic millimeter of the cortex, there are as many as half a billion excitatory recurrent connections. What are these recurrent synapses doing?

Because they are so numerous and widespread, excitatory recurrent synapses incur a metabolic cost to build and maintain. For this reason, it seems likely they play a vital role in brain function. But studying these networks has been challenging—in large part because of a lack of experimental tools—so we don’t yet know exactly what recurrent synapses do. But that’s changing, thanks to new tools for targeting specific neurons, along with theoretical advances in computational neuroscience and knowledge about recurrent networks from artificial intelligence.

S

cientists have traditionally studied the role of longer-range connections in the brain by perturbing those connections with genetic tools designed to target specific cell types. Optogenetics has been particularly helpful in understanding the roles of cell classes, enabling researchers to modify neural activity in genetically defined cells with millisecond precision.Because inhibitory cell classes can be identified and tagged by genetic factors, such as their parvalbumin or somatostatin expression profile, they have been much easier to study than excitatory cells. To date, only a few genetic markers have been found that sort the tens of thousands of excitatory cells in a typical layer 2/3 cortical column into distinct groups, making it challenging to study their role in the brain.

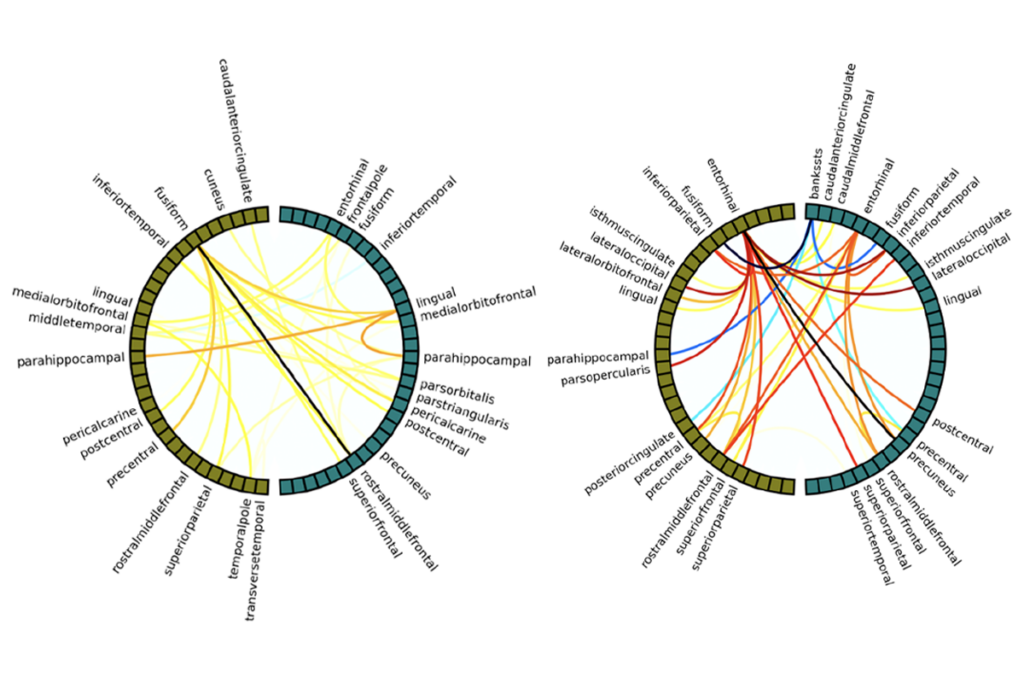

Recurrent networks are also difficult to study by virtue of their very connectedness. Individual cortical excitatory neurons receive hundreds or thousands of inputs, many of which are strongly correlated. When we see a person’s face, for example, neurons in the brain’s face regions receive long-range, feedforward and feedback inputs from other visual regions. They also receive local synapses from other face neurons. This connectivity creates a cortical network filled with hidden influences: Neurons’ activities are correlated, both with other local neurons and with common inputs.

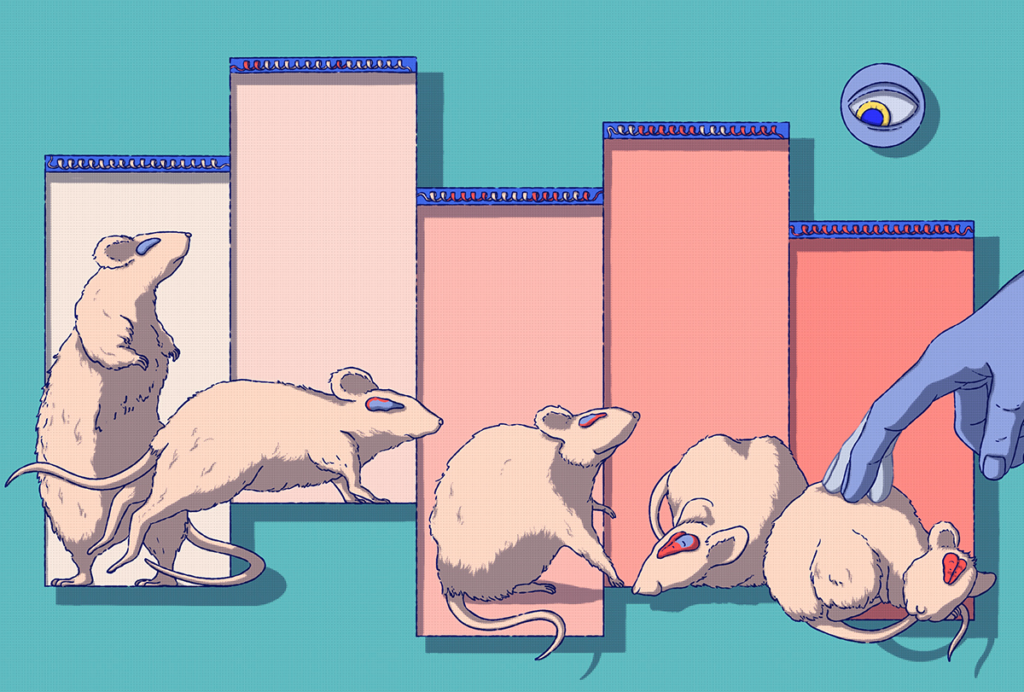

It’s nearly impossible with recording methods alone to separate recurrent from long-range effects, owing to the difficulty of estimating those hidden factors. Instead, researchers need causal approaches—namely, the ability to perturb neural activity in specific cells or populations of cells independently of the rest of the network. Newer stimulation approaches make that possible, even in the absence of genetic labels. Scientists can group cells by their activity profile and target them with two-photon optogenetics, which uses all-optical methods to stimulate populations of neurons with near single-cell resolution. Other useful experimental approaches combine genetics and light, yoking neural activity to gene expression that can later be used for optogenetic or chemogenetic control.

The ability to perturb groups or populations of cells has turned out to be particularly important for studying local excitatory networks. Most individual excitatory cortical synapses are weak, so stimulating just one cell in a cortical area has a minimal effect on the network. But when many neurons are stimulated, the local effects sum to produce strong influences in other cells. That population input mirrors natural input.

T

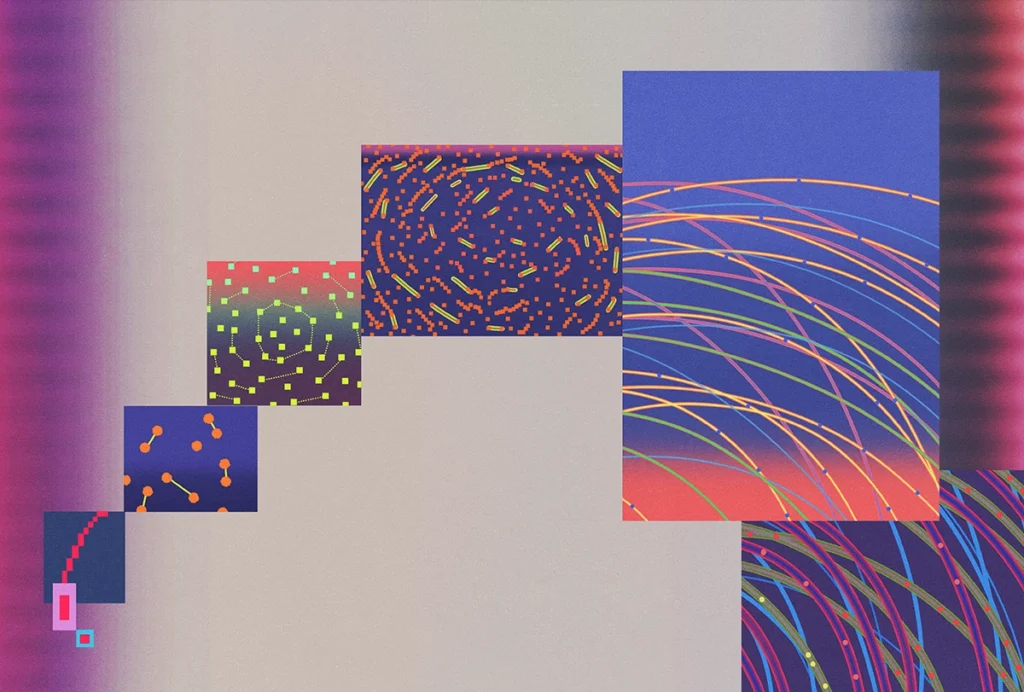

hese experimental advances have put us in a position to explore ideas coming from two different theoretical domains: computational neuroscience and AI. Both fields have developed recurrent neural network (RNN) models but with different features and goals. Both offer insight into what recurrent connections can do, as well as the opportunity to test theories for how these circuits operate in the brain.RNNs from computational and theoretical neuroscience more closely resemble biology—they include networks with spiking neurons, inhibitory neurons, and neurons with complex ion channels and other features. From these efforts, we know that recurrent networks with a brain-like structure can do network computations of many sorts, from pattern completion to decision-making via network dynamics. Because these models use real neural features, they can be refined and used to direct future experiments. Ring attractor networks offer a clear example. Computational neuroscientists theorized that these structures might underlie the brain’s representation of head direction, which was later confirmed experimentally in flies and mice. The recurrent connections in the network help shape the bump of activity that represents head direction.

RNNs from AI, by contrast, use simple units and are built using training procedures that are not guided by biology. AI models have been more complex and powerful than their computational neuroscience counterparts, and researchers have used them to explore the limits of what recurrent networks can do. Though some of the general principles of recurrent computation seen in AI models do not apply to the brain, they have inspired interesting hypotheses. For example, artificial RNNs are often used to perform time-based sequence learning, and perhaps brain recurrent networks also perform this function.

The interaction among these fields offers great potential for making progress in understanding cortical recurrent networks—by taking biologically inspired principles from theoretical and computational neuroscience, exploring rules of RNN operation from AI, and testing resulting hypotheses via population-level experimental tools.

This year’s Nobel Prize in Physics, awarded to Geoffrey Hinton and John Hopfield in part for their work on recurrent network models, hints at that promise. Those models were inspired by brain structure and ultimately led to modern, large-scale AI systems. Decades later, insight is poised to flow in the other direction: Using principles of recurrent networks derived from AI systems will help us understand biological brains.