At any social gathering of neuroscientists, the conversation can turn to what’s holding back the field. “Funders,” some grumble, lamenting the amount of money thrown at microbiology instead. “Journals,” others chip in, grousing at the gatekeeping editors who stop new ideas from flourishing. “The tyranny of old-timers,” some newly minted principal investigator often mutters, cursing the difficulty of challenging entrenched ideas.

“Averaging,” I say, and everyone moves slightly farther away from me down the bar. But really, it is a problem: Averaging is ubiquitous—and hides from us how the brain works.

A major goal of neuroscience is to understand the signals sent by neurons. Those signals—their spikes—underpin the brain’s functions, whether they be seeing, deciding or moving. To understand, say, how we make a decision, many argue we need to understand the spikes that underlie it: how they convey information about the options available, how they convey the process of a decision between those options, and how they convey that decision into action.

Averaging is everywhere in our attempts to understand the spiking of neurons. A good example is that fundamental workhorse of sensory neuroscience, the tuning curve. Imagine that you want to know what frequency of sound a neuron most prefers. You play that neuron tones in a range of frequencies and count the number of spikes it emits for each. Repeat that a bunch of times, average the number of spikes emitted for each frequency, and voila, you’ve built yourself a tuning curve: a plot that tells you the average number of spikes the neuron sends for each frequency. It may well show you that the neuron sends more spikes, on average, to 100 hertz tones than to 10 or 1,000 hertz tones, suggesting the neuron is tuned to that specific frequency of sound.

The natural extension of this idea is to look across time, creating a “peri-stimulus time histogram.” This shows nothing more than the average number of spikes a neuron sends just before and after some significant event. When an animal repeatedly gets a reward, for example, we look at how a neuron responds to that reward by seeing how many more or fewer spikes it sends, on average, after a reward than before it.

Neuroscientists use these kinds of averaging for good reasons. A neuron typically emits just a few spikes around significant events, so only by averaging over many repeats of a tone, reward or movement can we have enough spikes to pull the signal out and see what event the neuron responds to most reliably. Neurons also typically emit different numbers of spikes to the same event, so only by averaging over many repeats of the same event can we seemingly extract the reliable signal the neuron uses to encode it. Averaging undoubtedly helps us to wrap our heads around the complexity of the brain’s activity; we even use the average response of neurons as the starting point for some of our most sophisticated analyses of population activity, such as the many variants of principal component analysis.

But neurons don’t take averages. All a neuron gets to work with is the moment-to-moment spikes sent by its inputs. Those inputs carry few, if any, spikes. Each input likely varies its response to the same significant event. Averaging over time hides what a neuron actually sees, what it actually gets to compute with.

T

he way averaging hides the actual moment-to-moment signals of neurons becomes a problem when we consider how much deep theory is based on averaged neural activity. A striking example is the theory for how neurons represent evidence used to make decisions. The theory says there exist cortical neurons, especially those in the lateral intraparietal area, that act as evidence accumulators, ramping up their spiking in proportion to how much incoming sensory information supports the option they represent. One option wins out when this ramping activity reaches a threshold.This theory was based on a body of work that looked at recordings from individual cortical neurons as monkeys watched dots moving randomly on a screen and reported if the majority were moving left or right. Cortical neurons that favored, say, the leftward option increased their spiking when the majority of dots were moving left. They ramped up their activity in proportion to the ramping up of evidence for their option, looking just like they were accumulating that evidence. The problem, of course, is that these ramps were averages: averaged over trials and averaged over neurons.

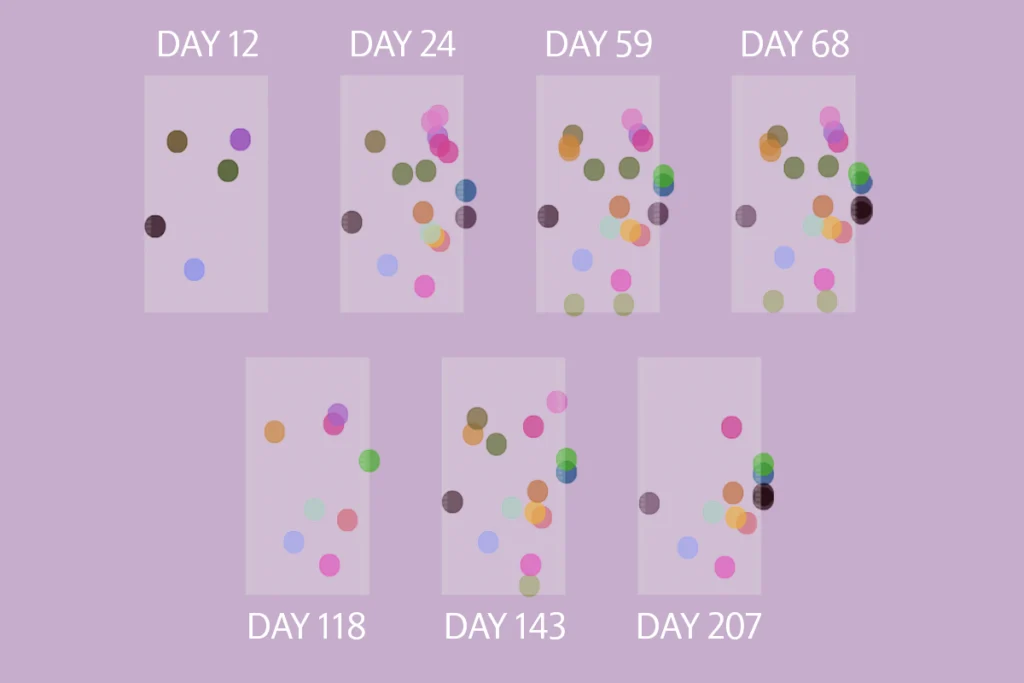

When researchers used more advanced statistical tools to take a closer look at single cortical neurons, they found that most didn’t ramp their activity while the dots were moving: Some increased their activity at seemingly arbitrary moments; others seemed to decrease their activity, even though the dots were moving in those neurons’ apparently preferred direction.

When some of the same team took a closer look at single trials of the moving dots, they reported that even the neurons that did increase their activity seemed to step up their activity at some point while the monkeys were dot-watching, not gradually increase it. With just a handful of spikes on each trial, distinguishing a step from a ramp in spiking requires some fancy computational models that try to infer the underlying implied firing rate. An argument raged back and forth about how well we can tell during a single trial if a single neuron steps rather than gradually increases its spikes.

The final answer eludes us: On single trials, some neurons’ spikes seem more step-like, other neurons’ spikes more ramp-like. But irrespective of how many neurons increased their rates or how many stepped or ramped in single trials, the problem of averaging is clear: Many single neurons do not look like the average “ramping” response and do not fit theory based on it.

Does that matter? After all, if the aggregate signal across those cortical neurons in the current moment is accumulating evidence, then maybe that’s enough. Maybe that’s all the brain cares about. The problem is that we don’t know.

Whenever most of us average neural activity over trials, over neurons or both, we are implicitly assuming that the resulting signal is what the rest of the brain sees. We’re hoping that all the variation of individual neurons is distributed across the whole population of neurons in such a way that their aggregate moment-to-moment signal is approximately the same as the signal we get by averaging the output of each neuron.

It’s weird that we don’t know if this assumption is true. Averaging is ubiquitous, central to our tools and part of the language. But we also know in our hearts that it is a matter of convenience, an easier way to think about the brain. Indeed, averaging spikes may just be an accident of history rather than a rational approach to understanding the brain, an artifact from the days when just getting a different response from a neuron to lines of different orientations was an amazing insight into how the brain worked. Before computer-generated graphics existed, a simple histogram of a neuron’s averaged response was the simplest thing to draw.

We now have the tools we need to find out if averaging is showing us something about the brain’s signals or is a misleading historical accident. With recordings of spikes from hundreds of neurons at the same time, we can ask whether the aggregate moment-to-moment signal of these neurons looks like the signal we get when we average over trials, neurons or both. Then we will know quite how much averaging is a convenient fiction of neuroscience, holding back our understanding of the brain.