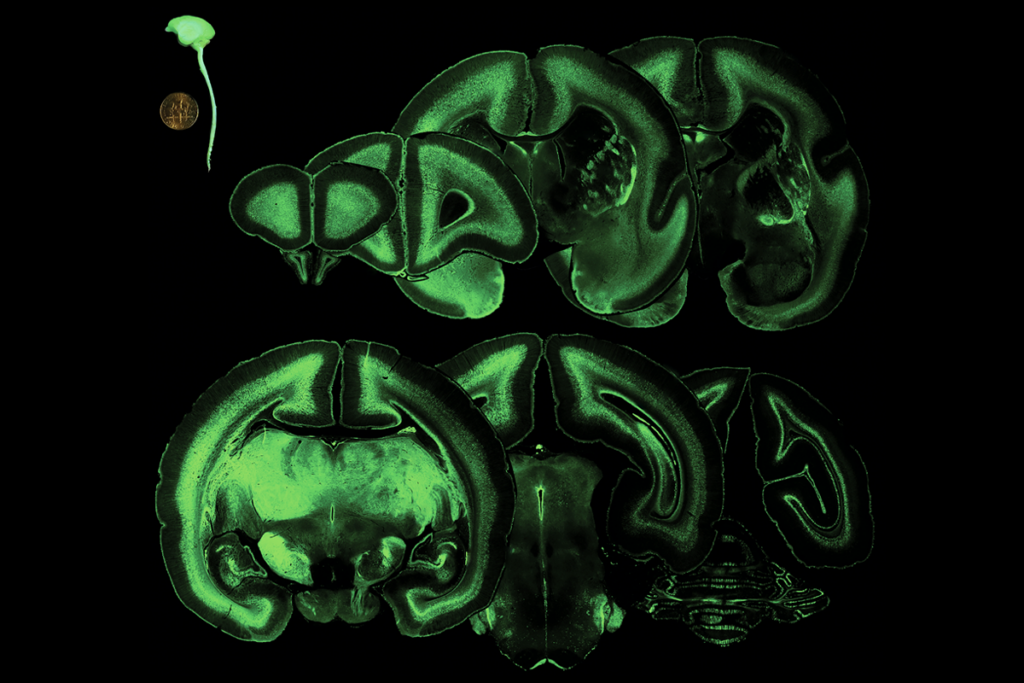

Enny van Beest wanted to watch how the representation of spatial information in a single neuron changes in different environments and conditions across the span of two weeks. So, as a test run, she recorded 10 days of neural data from Neuropixels probes implanted in a mouse. She set a computer to crunch the data and waited an entire weekend for the analysis to process. Then, her computer crashed. Over and over again she tried analyzing the data, and every time, her computer crashed.

The algorithms she used “just couldn’t keep up” with the amount of data she had collected, says van Beest, a postdoctoral researcher in Matteo Carandini’s lab at University College London. “It was pretty clear we needed something else.”

Extended, or chronic, recordings are key to understanding how brain function changes over time, says Loren Frank, professor of physiology at the University of California, San Francisco. And averaging activity from cell populations may hide important features about the signals, so it is important to look at single neurons, van Beest says.

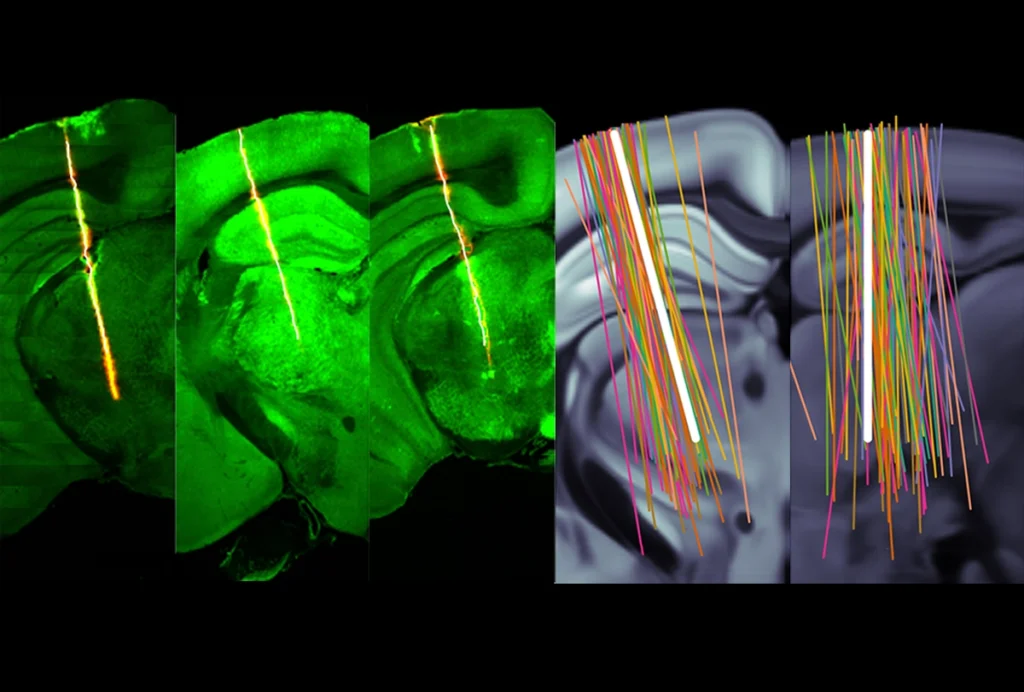

Researchers have efficiently recorded from single neurons for several weeks in primates and mice. But as van Beest and others have increasingly used high-density probes to simultaneously measure hundreds of single neurons in multiple brain areas over weeks or even months, the size of the resulting datasets has grown beyond what traditional analysis methods can handle, Carandini says. And tracking neurons during these longer recordings is difficult, as the probes can move around and lose contact with the cells, a problem called drift. “All these methods essentially fail when you have Neuropixels probes,” he says.

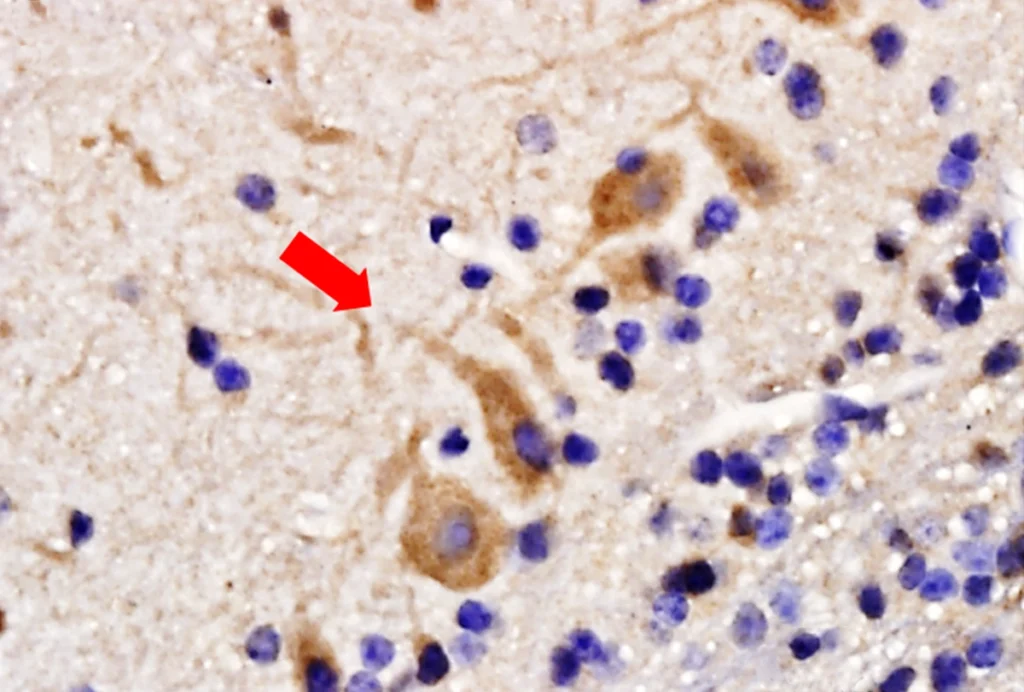

Traditional analyses start by gathering all the data files together, which is computationally demanding, so Carandini, van Beest and their colleagues developed an algorithm that analyzes individual data files first and then combines the results, a method they describe in a new study, published in September in Nature Methods. The algorithm, called UnitMatch, uses a neuron’s unique electrical signature—its spike waveform shape—in combination with the location of the signal on the probe to identify neurons. Matches can then be validated by their stable functional properties, such as their inter-spike interval, or the time between subsequent action potentials.

UnitMatch could help researchers better understand how neural representations change over long periods of time, says Ilana Witten, professor of psychology and neuroscience at Princeton University, who has started using the approach in her own lab. And for cases in which a study does not yield enough trials or data per day, the new approach could simplify the combining of multiple days of data, she adds. “So far, we’ve been really impressed.”

B

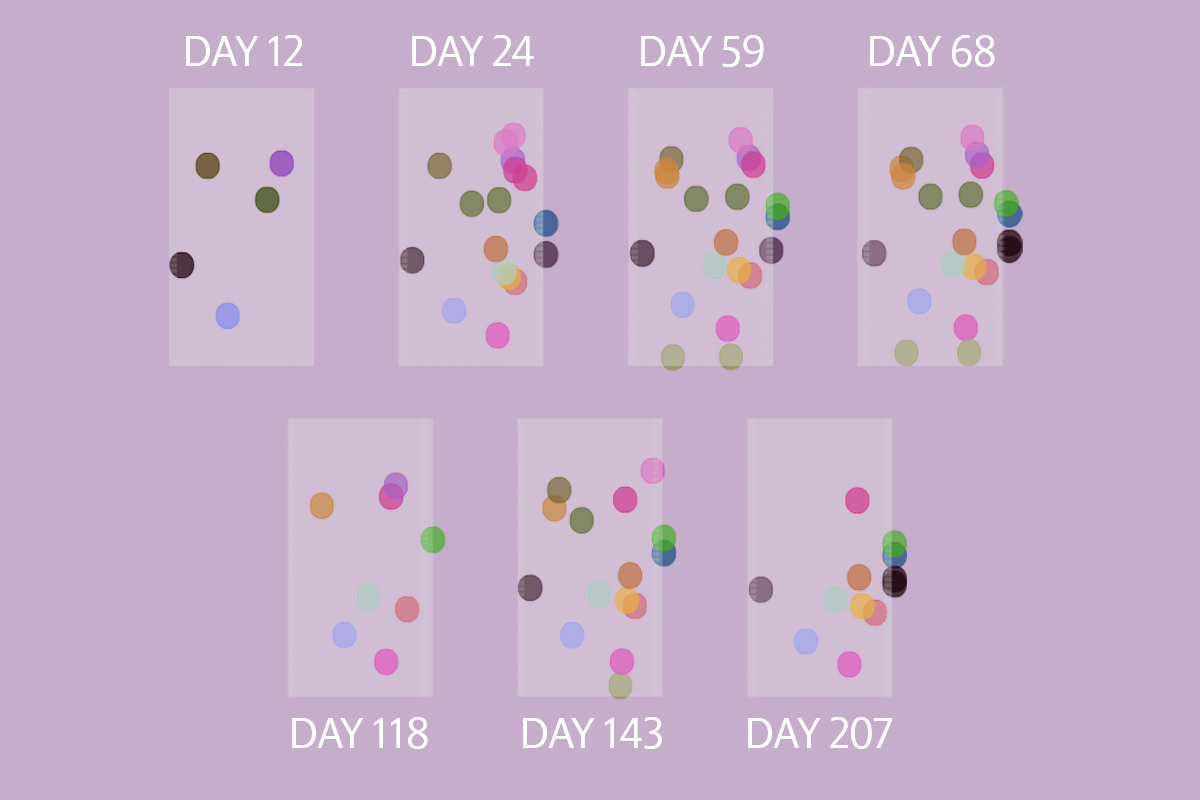

efore UnitMatch, van Beest had been trying to combine multiple days of recording data into one large dataset and then track neurons using an approach called Kilosort. “Kilosort is great at spike sorting individual sessions but is not intended for tracking neurons,” she says. “That was the start of trying to make a new algorithm to solve this problem more efficiently.”Using UnitMatch, she and Carandini successfully tracked up to 30 percent of their initial single neurons for more than 100 days. One neuron they tested in the visual cortex responded consistently to a visual stimulus of natural images across days. And in a visuomotor learning paradigm, neurons revealed a variety of responses: Some responded strongly to the two visual stimuli, whereas others responded to only one, highlighting how the approach can help researchers identify unique neuronal characteristics across long recording periods.

Because UnitMatch processes all recordings from the same recording site at the same time, it can go through data faster. For example, it successfully analyzed 22 recordings across five mice in 25 minutes—a task that took another algorithm, Earth Mover’s Distance, eight hours, the team found. And thanks to its one-stop processing, UnitMatch can also track neurons that disappear for one or a few recordings and then reappear, which would be impossible for serial tracking methods such as Earth Mover’s Distance.

O

ne limitation of UnitMatch is that it assumes that a neuron’s properties remain stable, Frank says. “We don’t know for sure that [those features] can’t change as neurons evolve,” he says. “If those are assumptions are right, great; if they’re not, maybe one gets into trouble.”For example, a neuron’s firing rate might change in the context of learning, which would make it an unreliable variable to track cells, says Tim Harris, professor of biomedical engineering at Johns Hopkins University and a pioneer of Neuropixels systems.

Ultimately, the problem is that there is no ground truth, says Sebastian Haesler, professor of neuroscience at KU Leuven, who was not involved in the new work. With imaging technologies, researchers can visualize a neuron and confirm that it’s the same one throughout an experiment, but that is, as of yet, impossible to do with just electrophysiological recordings, he says.

As the field continues to collect more and more data, however, new problems will arise—and scientists will need to develop new tools to address them. A team recently established benchmarks to standardize pooled Neuropixels datasets, for example. Anne Churchland, professor of neurobiology at the University of California, Los Angeles, who led that effort, says she plans to use UnitMatch on the data collected from a new device her lab has been working on that can track multi-area recordings for up to 300 days.

The real advances will come with comparing how well these different approaches perform, Haesler says.

“We need to throw all our data at this stuff, then analyze it with different methods and see where the differences and similarities are,” he says. “It’s not about right or wrong, or winner or loser. But it’s actually about understanding what are the parameters and reasons why one works better. So from this, we will actually learn a lot about the ground truth.”