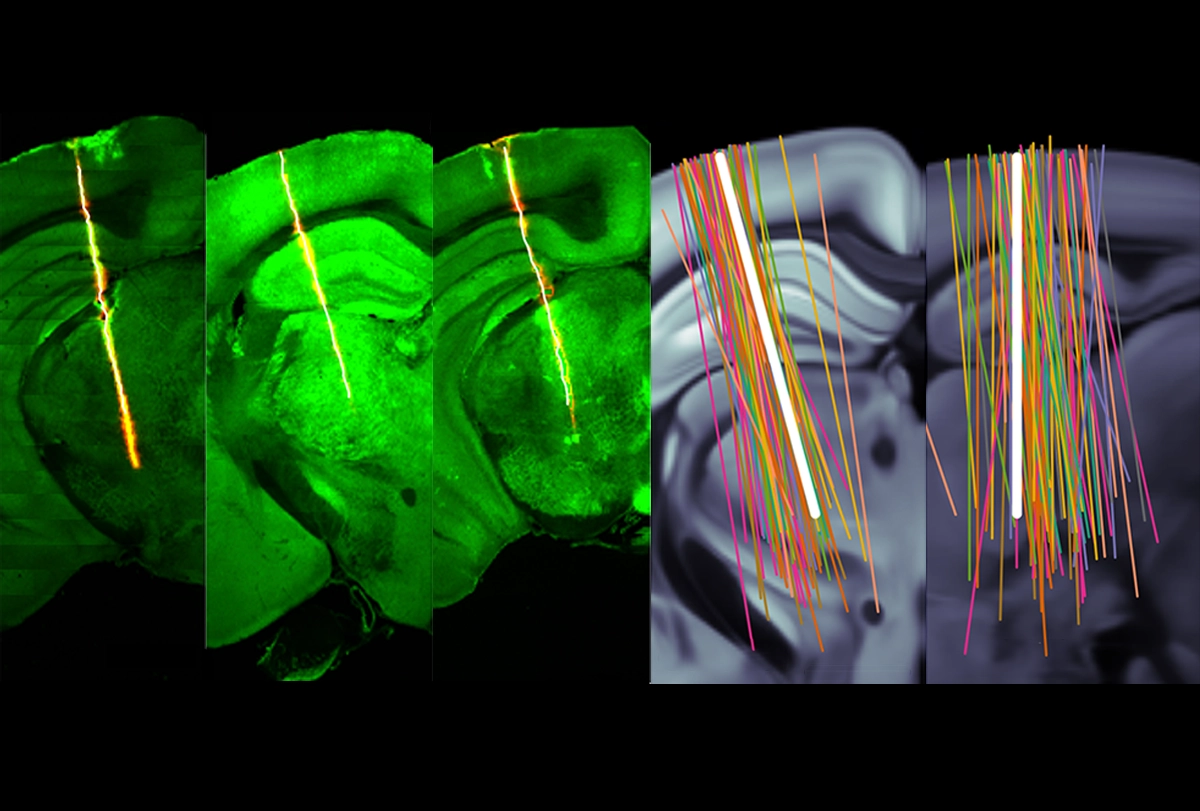

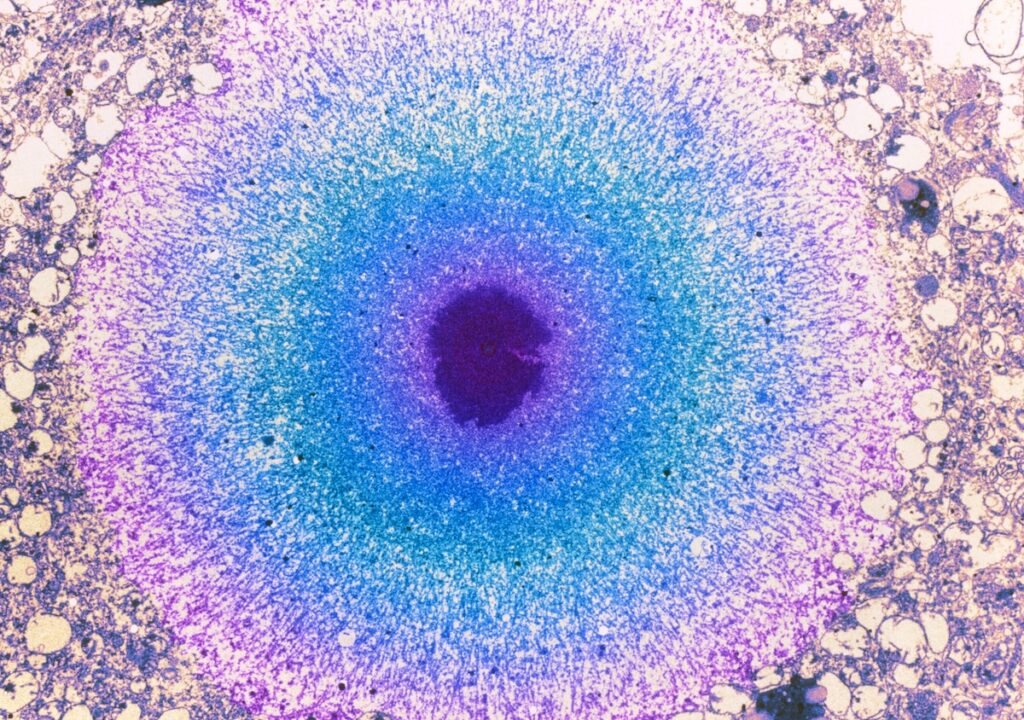

Five years ago, Anne Churchland and her colleagues in 10 other labs set themselves an ambitious task. They wanted to create a vast, pooled dataset of electrophysiology recordings from the same brain location in head-fixed mice performing a visual decision-making task. To try to standardize their efforts, they all used Neuropixels probes and identical surgical techniques and animal-training protocols.

But as the data flowed in, the question arose: “How can we really make sure that it’s going to be okay to pool the data in the way that we plan to do?” says Churchland, professor of neurobiology at the University of California, Los Angeles.

So they introduced a baseline set of metrics across all the labs to analyze their data and, as that pool increased, continued to refine and add to the criteria. Those revisions ultimately gave birth to a master list of 10 new quality-control standards for electrophysiology recordings, detailed in a preprint posted to bioRxiv last month.

The standards, called Recording Inclusion Guidelines for Optimizing Reproducibility (RIGOR), can increase lab-to-lab data reproducibility by helping researchers determine whether and how to analyze data from single neurons or an entire recording session, according to the preprint. And the standards apply to all such data, regardless of the type of recording array, animal model or recording location used, Churchland says.

The teams behind the metrics participate in the International Brain Laboratory, an open-science collaboration that aims to understand and map the brain-wide circuits that support decision making. (The collaboration is funded in part by the Simons Foundation, The Transmitter’s parent organization.)

Currently, few research papers report any metrics for how electrophysiology recordings are analyzed, says Drew Headley, assistant professor of molecular and behavioral neuroscience at Rutgers University, who was not involved in the work. Now, with the new set of 10 RIGOR metrics, “everyone can hone in on the same preprocessing and standards.”

T

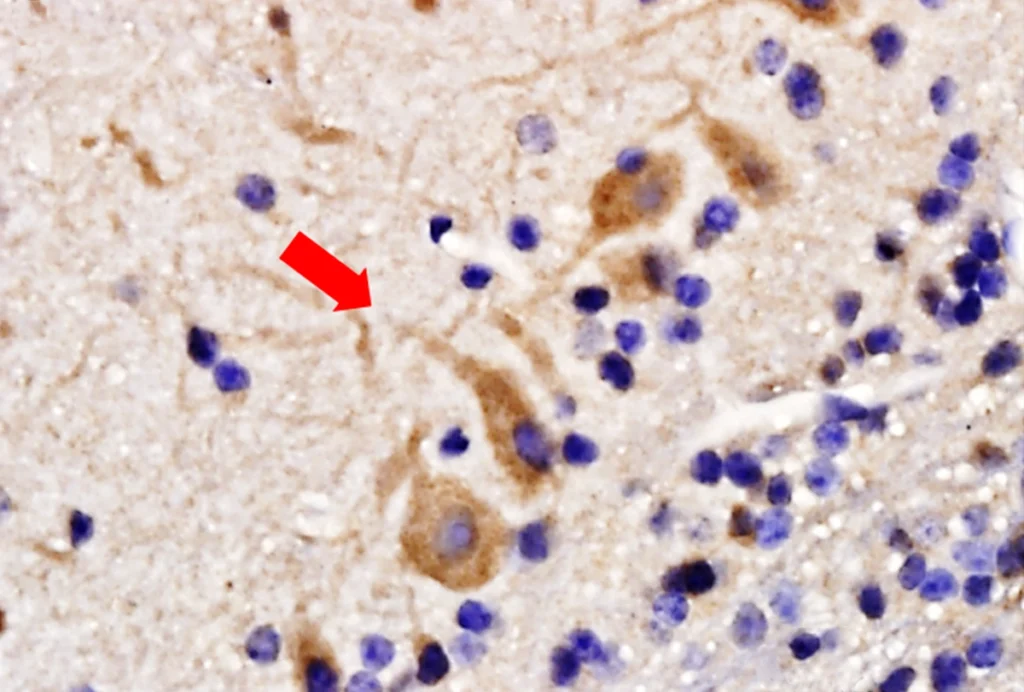

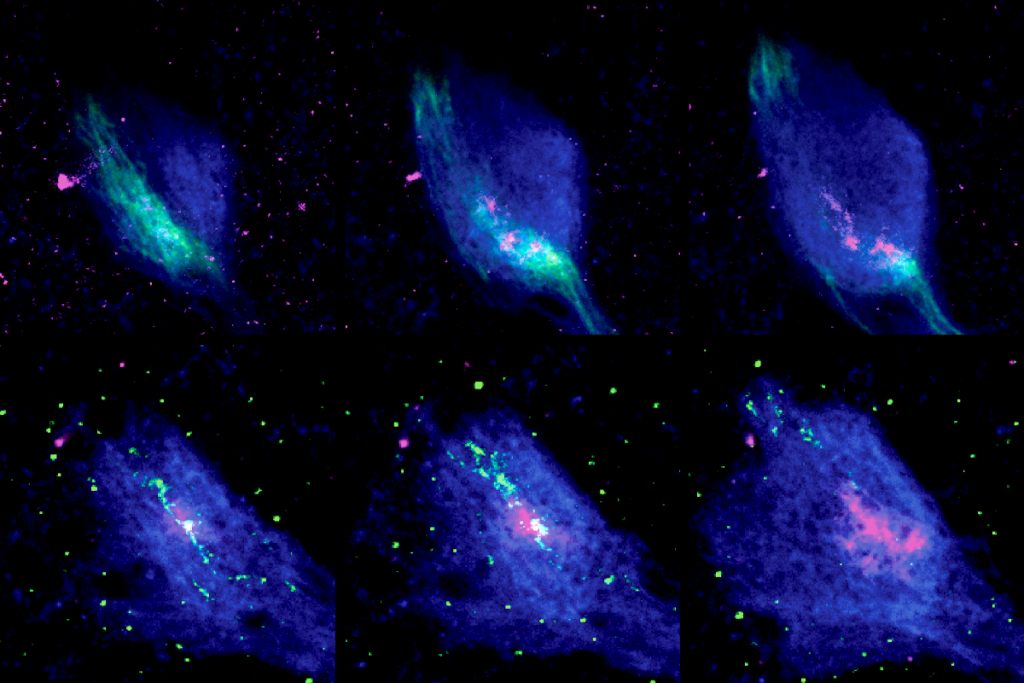

hree RIGOR metrics pertain to recordings of a single unit—the term for an action potential likely associated with a single neuron. These metrics are related to spikes and the refractory period length—the spike-free time after a neuron fires an action potential—and can reveal whether spikes from two neurons are mistakenly grouped together.The remaining 7 RIGOR metrics apply to whole recording sessions that have more than 20 electrode channels. Some of these metrics, such as yield—the number of recorded neurons per channel in each brain region—are computed, whereas others, including epileptiform activity and artifacts, can be visually assessed.

Churchland’s team applied the RIGOR metrics to 121 experimental sessions, and found that 82 passed muster. This included recordings from more than 5,300 neurons.

RIGOR is meant to be a set of benchmarks and not “written in stone,” Churchland says, although the group does attach a number to several of the criteria, including yield. Recording sessions should be included if yield is at least 0.1, which equates to 38 neurons across the 384 recording channels on a Neuropixels probe, the study reports.

But Neuropixels originator Tim Harris questions the use of a specific number for yield—or even the idea of yield at all. Some brain areas are quieter than others, says Harris, senior fellow who leads the applied physics and instrumentation group at the Howard Hughes Medical Institute’s Janelia Research Campus. “Now, if I saw one neuron that had reasonable amplitude in that quiet area, I’d go ahead and accept that,” he says.

E

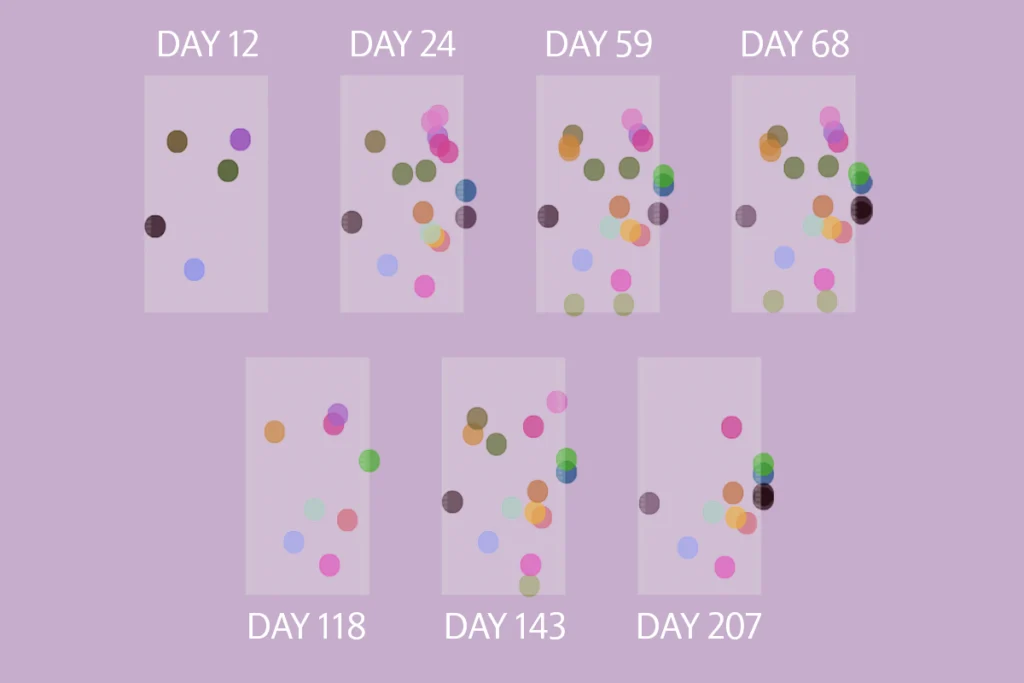

ven after applying RIGOR, other factors still introduced variability to the data Churchland and her colleagues collected, the study reports.For instance, the response of individual neurons varied across recording sessions within a single lab, which made it difficult to replicate single-neuronal results across labs. But aggregate data across labs clearly showed trends in some brain regions, such as an increase in the firing rate of neurons in the CA1 region of the hippocampus soon after an animal moves. “I don’t think it’s a hopeless enterprise,” Churchland says of single-neuron analysis, “but I think people need to collect larger data sets, and they need to be more aware of the limitations.”

The team discovered another source of variability while revising their preprint: software. A journal rejected an earlier version of the preprint in 2022 because the reported neuronal yield was lower than that reported in two previous studies that used Neuropixels in a similar experiment, Churchland says. Reviewers questioned the team’s ability to set standards for Neuropixels recordings if they were “unskilled experimenters,” she recalls.

Her team finally traced the yield issue to labs using different versions of automatic spike sorting software called Kilosort, she says. They got similar neuronal yield when they reanalyzed under the same version of the tool.

As the quantity of electrophysiology data continues to grow, Churchland says she hopes the RIGOR standards will appear in future papers “to provide the reviewers with reassurance that you’re tapping into an accepted set of standards that the community feels are a reasonable starting point.”