When do neural representations give rise to mental representations?

To answer this question, consider the animal’s umwelt, or what it needs to know about the world.

It is often said that “the mind is what the brain does.” Modern neuroscience has indeed shown us that mental goings-on rely on and are in some sense entailed by neural goings-on. But the truth is that we have a poor handle on the nature of that relationship. One way to bridge that divide is to try to define the relationship between neural and mental representations.

The basic premise of neuroscience is that patterns of neural activity carry some information — they are about something. But not all such patterns need be thought of as representations; many of them are just signals. Simple circuits such as the muscle stretch reflex or the eye-blink reflex, for example, are configured to respond to stimuli such as the lengthening of a muscle or a sudden bright light. But they don’t need to internally represent this information — or make that information available to other parts of the nervous system. They just need to respond to it.

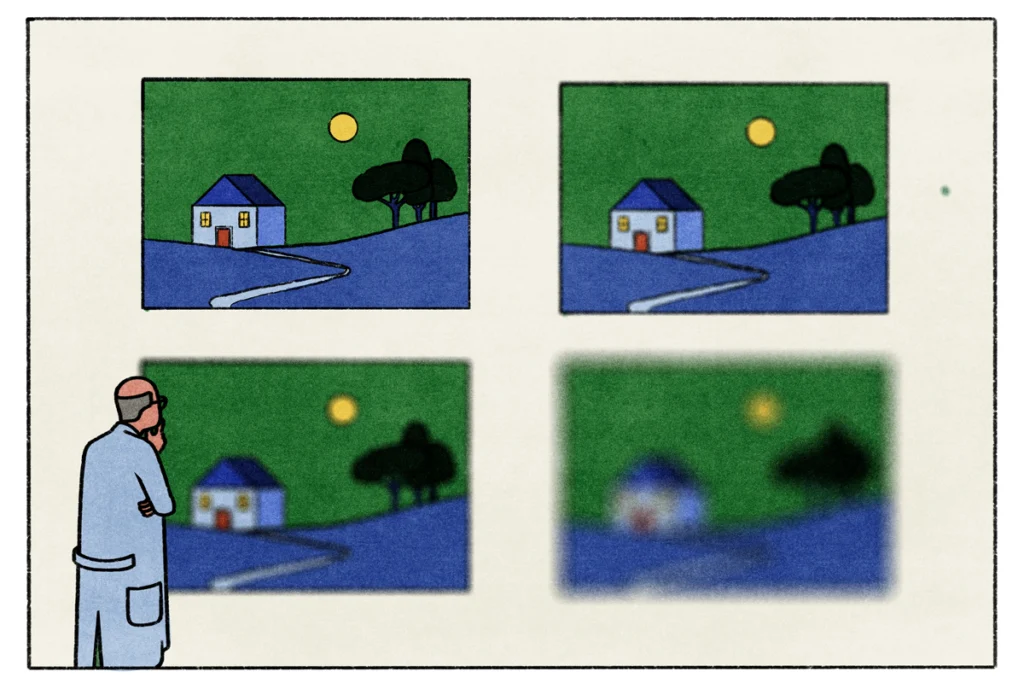

More complex information processing, by contrast, such as in our image-forming visual system, requires internal neural representation. By integrating signals from multiple photoreceptors, retinal ganglion cells carry information about patterns of light in the visual stimulus — particularly edges where the illumination changes from light to dark. This information is then made available to the thalamus and the cortical hierarchy, where additional processing goes on to extract higher- and higher-order features of the entire visual scene.

Scientists have elucidated the logic of these hierarchical systems by studying the types of stimuli to which neurons are most sensitively tuned, known as “receptive fields.” If some neuron in an early cortical area responds selectively to, say, a vertical line in a certain part of the visual field, the inference is that when such a neuron is active, that is the information that it is representing. In this case, it is making that information available to the next level of the visual system — itself just a subsystem of the brain.

Usually, patterns of neural activity across local populations represent such information. And crucially, the populations and circuits that interpret such representations are causally sensitive to the meaning of those macroscale patterns, rather than to the details of the instantaneous neural instantiations.

But does that activity of representing imply that the pattern is a representation of the type envisaged in cognitive science — i.e., a distinct cognitive object? At what point do intermediate neural representations give rise to high-level mental representations? When does distributed, devolved signal processing become centralized cognition? A good way to approach this question is to ask: When does it need to?

T

he point of perceptual information processing systems is ultimately to allow the organism to know what is out in the world and what the organism should do about it. Any given scenario will involve myriad factors and relationships, including dynamically changing arrays of threats and opportunities — too many to be hard-coded into reflexive responses. To guide flexible behavior, sensory information and information about internal states have to be submitted to a central cognitive system so this information can be adjudicated over in a common space. Such a system can and should only operate with some kinds of information — information at the right level.We don’t want to think about the photons hitting our retina, even though our neural systems are detecting and processing that information. That’s not the right level of information to link to stored knowledge of the world or to combine with data from other sensory modalities. What we need to think about are the objects in the world that these photons are bouncing off of before they reach our eyeballs. We, as behaving organisms, need to cognitively work with our perceptual inferences, not our sensory data.

For any organism, we can ask two related questions: What kinds of things can it think about? And what kinds of things should it think about? Here, an ecological approach is valuable. Jakob von Uexküll introduced the concept of the “umwelt” of an organism, which might be translated as its “experienced world.” This idea begins with the specific sensorium of the organism, which will vary among species and sometimes even individuals. Different organisms can smell different chemicals, for example, or detect different wavelengths of light or frequencies of sound, while being oblivious to many other factors in their environment.

But the umwelt also crucially entails valence, salience and relevance — it is a self-centered map of things in the environment that the organism can detect and that it cares about. Perception in this view is not the neutral, passive acquisition of information. It is an active process of sense-making, which results in a highly filtered, value-laden, action-oriented landscape of affordances. If an organism is thinking at all, these are the things that it needs to think about.

Using the word “think” in this context invites all kinds of anthropomorphism, of course, and risks inflating to the level of cognition what might be better framed in cybernetic terms. But if cognition can be defined as using information to adaptively guide behavior in novel circumstances, then perhaps we can think of a continuum from simpler control systems to the more abstract cogitation that humans engage in. That is, after all, the trajectory that evolution had to follow. And if we allow that many animals have some kind of central arena where the highest-level inferences become the objects of cognition, we can ask what such inferences could be about.

That question may be difficult or even impossible to answer for animals incapable of self-report. But we may be able at least to say what various organisms can’t be thinking about. A nematode can’t be thinking about objects far away from it because it has no means to detect them. Its cognitive umwelt is consequently limited to the here and now. A lamprey can’t be thinking about types of objects because it doesn’t have enough levels of processing to abstract the requisite categorical relationships. And a human baby can’t think about next week because its cognitive horizon doesn’t extend that far.

Each animal’s potential cognitive umwelt — what it could think about — is thus limited not just by its sensory capabilities, but also by the levels of internal processing it has, as well as its capacity for long-term memory and long-term planning. But there are also active limits on what any organism does think about. All the low-level information processing gives rise to the objects of cognition, but in a highly selective, filtered fashion. High-level cognition is useful precisely because it ignores so much low-level detail — because of what’s not on your mind.

Thus, only a subset of neural representations — the meaningful elements that are processed by various neural subsystems — rise to the level of mental representations — the elements of cognition. Moreover, only a subset of those mental objects — a varying subset, depending on circumstances — may be things that we need to think about consciously. It’s thus too vague and all-inclusive to simply say, “The mind is what the brain does.” Our mental goings-on are more likely entailed by a dynamically shifting, adaptively filtered subset of neural goings-on.

Kevin Mitchell

Trinity College Dublin

Recommended reading

Not playing around: Why neuroscience needs toy models

AI-assisted coding: 10 simple rules to maintain scientific rigor

Explore more from The Transmitter

Snoozing dragons stir up ancient evidence of sleep’s dual nature

The Transmitter’s most-read neuroscience book excerpts of 2025