When neuroscientists study how behaviors or cognitive abilities are represented in the brain, they usually study them one by one. But that’s not how brains work. We know intuitively that learning builds on itself—you probably could not have learned algebra without first grasping arithmetic (even if you’ve forgotten it all now).

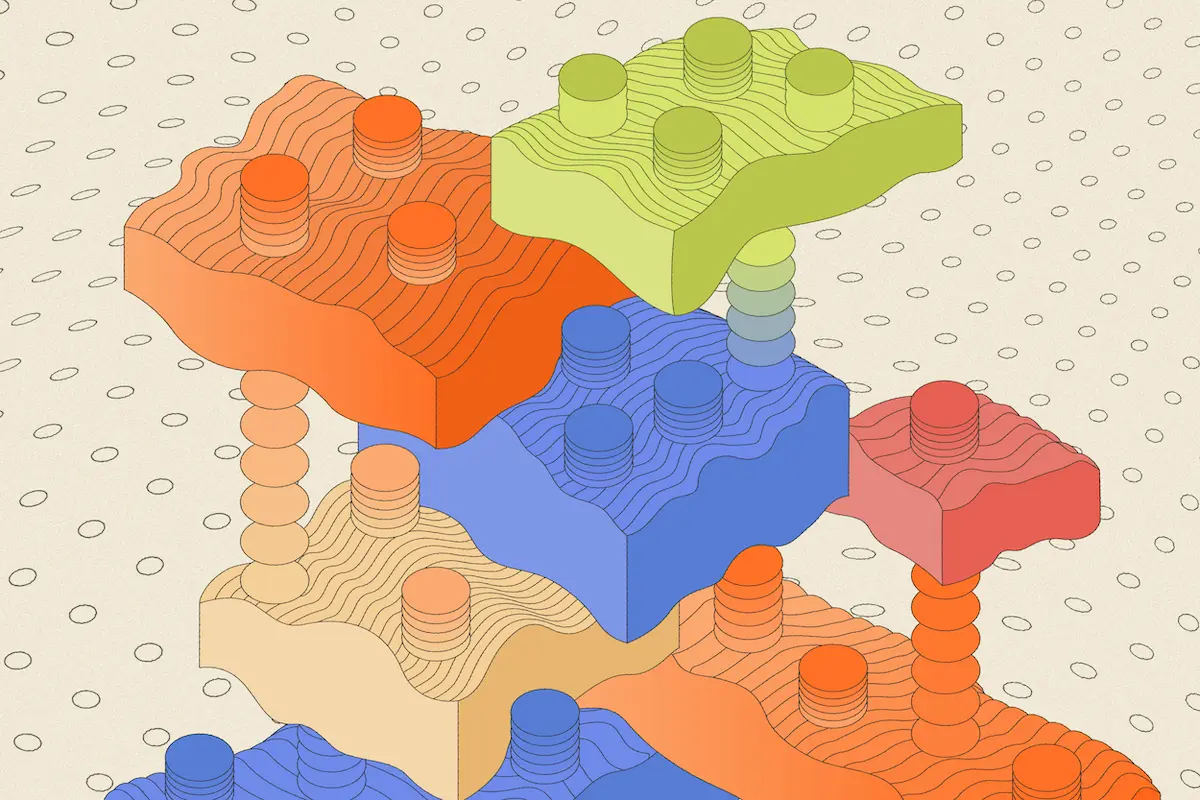

A neural network described in a new study suggests how such additive learning might happen. When the network learned multiple tasks—such as recalling something, responding to a stimulus as quickly as possible, or making a go/no-go decision—it found a strategy to break the tasks into modularized patterns of computation and then recombine them in different ways, as each task demanded.

These computational patterns, or “motifs,” essentially work like Lego bricks for learning—and they point to how neurons might form computational units within a large network, says Laura N. Driscoll. Driscoll, a senior scientist at the Allen Institute for Neural Dynamics, led the work as a postdoctoral student under the supervision of David Sussillo, adjunct professor and the late Krishna Shenoy, professor, both of electrical engineering, at Stanford University.

“The fact that you are able to reuse these motifs and contexts basically starts to allow us to predict how animals might perform a task they have never seen before, based on tasks they have previously learned,” Driscoll says.

One of the brain’s key features is its ability to adapt its computations to a changing environment in real time. “I really think this [paper] is an absolutely crucial stepping stone toward the ultimate goal, which is figuring out how the brain actually performs dynamic computation,” says Mac Shine, associate professor of computational systems neurobiology at the University of Sydney who was not involved in the work. “This is a deeply hard problem—both for our brain to solve and for us to understand.”

D

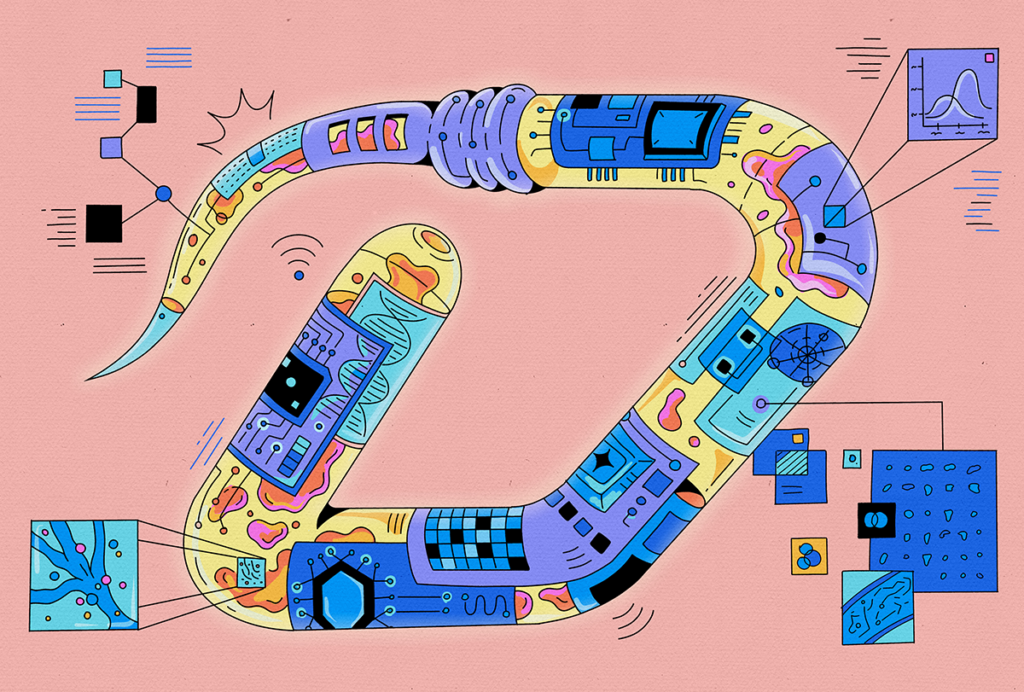

riscoll and their colleagues used a type of neural network called a recurrent neural network (RNN), in which a node’s outputs don’t just flow unidirectionally but can feed back into its inputs. The work builds on a 2019 study from another lab that trained an RNN to perform 20 related tasks.That study mapped a bird’s-eye view of these tasks’ relationships to each other, identifying clusters of nodes in the neural network that had computationally similar activity patterns. It showed that the network’s representations of similar aspects of different tasks remained similar. The current work instead set out to dissect exactly how the network learns these types of tasks. By analyzing the network as a system that changes as it learns—a so-called dynamical system—the researchers were able to identify the computations that were reused across tasks.

An RNN’s activity is in constant flux, but energetic peaks and valleys called fixed points provide an outline of this dynamic landscape. The researchers mapped the fixed points that emerged as the network was trained on 15 tasks simultaneously, and found that sets of fixed points—also called motifs—emerged to carry out the computations to perform individual tasks. Some of these motifs overlapped in tasks that shared similarities.

After the network had learned multiple tasks, it was able to reuse and recombine these motifs to accomplish seemingly similar new tasks later on, assembling the computational elements in an additive manner. For example, if two different tasks required working memory, the network would reuse a specific motif that stored the memory needed in both of them, Driscoll explains.

Additionally, when the team deleted specific clusters of nodes in a network trained on multiple tasks, it affected only the tasks that relied on motifs encoded by those clusters.

The idea that different tasks can co-opt or co-use the same fixed points in neural networks or the same groups of neurons in the brain reflects an emerging concept that neuroscientists call compositionality. “I don’t think that’s necessarily a surprising concept, but it’s nevertheless been hard to study both experimentally and computationally,” says Dean Buonomano, professor of neurobiology at the University of California, Los Angeles.

“Certainly, the finding of how this might occur in neural networks—and showing that it occurs in recurrent neural networks—is indeed quite novel,” he adds.

When the researchers pretrained the network on a set of tasks and then introduced a new task that varied slightly from them, the network was able to reuse the previously learned motifs for the new task. But a network that lacked relevant pretraining struggled to learn the new task, resulting in variable learning outcomes. In some cases, after extended training, it was able to modify the motifs to perform the novel tasks.

“You are accumulating these motifs that are like building blocks of knowledge,” Driscoll says. “You can take in a new input and reconfigure your previously learned knowledge in a way that enables you to do a novel task you’ve never seen before.”

Sussillo, who is also a senior research manager at Meta’s Reality Labs, compares such motifs to kitchen items you might use to prepare a meal. Depending on what you are cooking, you might reach for a knife, a cutting board, a spatula or a measuring cup—or some combination thereof. And if you know how to flip an egg in a pan, you probably know how to flip a crepe, too.

In identifying motifs as computational features, “we ran that [notion] to ground,” Sussillo says. “What is the thing that is reused in a network? I believe for the first time we made that concrete.”

U

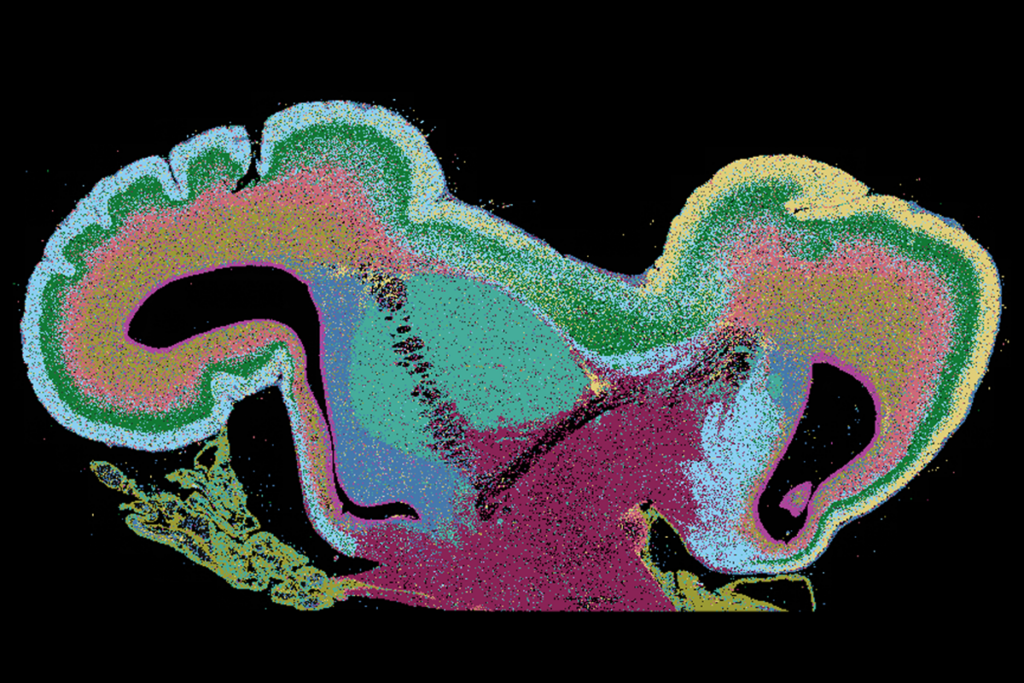

nderstanding how a neural network breaks tasks down into components may shed light on how the brain does so. “The big hypothesis is that curriculum learning happens through reuse of these motifs,” Driscoll says. That means the order in which an animal learns certain tasks would affect both the speed with which it learns and the strategies it employs to do so—both ideas Driscoll now plans to test in mice.The learning that emerges in neural networks—and in behaviors that animals display—are highly input-dependent, and how well the motifs that pop out in neural networks align with components of learning in animals remains to be seen, Shine says. But researchers can look through data on brain activity in mice performing similar cognitive tasks to see if those data hold patterns similar to those that emerged in the recurrent neural network.

“Odds are, how a mouse solves the problem is going to be far more complicated than a simple recurrent neural network,” he says. “But the fact that we even have a hint of what to look for is a huge step.”

The work raises important yet testable questions about how computations in RNNs might translate to animals’ brains, says Kanaka Rajan, associate professor of neurobiology at Harvard Medical School. Motifs develop when a network—and maybe an animal—learns specific tasks, but it’s unclear how they would come into play in a more naturalistic setting, such as when a mouse is just hanging out in its home cage.

Additionally, RNNs represent “disembodied bags of neurons,” but animals doing tasks in the lab have bodies that exist in the physical world, Rajan says. “If I put the same network in an embodied architecture, will it run a robot?”