Large language models

Recent articles

How artificial agents can help us understand social recognition

Neuroscience is chasing the complexity of social behavior, yet we have not answered the simplest question in the chain: How does a brain know “who is who”? Emerging multi-agent artificial intelligence may help accelerate our understanding of this fundamental computation.

How artificial agents can help us understand social recognition

Neuroscience is chasing the complexity of social behavior, yet we have not answered the simplest question in the chain: How does a brain know “who is who”? Emerging multi-agent artificial intelligence may help accelerate our understanding of this fundamental computation.

The BabyLM Challenge: In search of more efficient learning algorithms, researchers look to infants

A competition that trains language models on relatively small datasets of words, closer in size to what a child hears up to age 13, seeks solutions to some of the major challenges of today’s large language models.

The BabyLM Challenge: In search of more efficient learning algorithms, researchers look to infants

A competition that trains language models on relatively small datasets of words, closer in size to what a child hears up to age 13, seeks solutions to some of the major challenges of today’s large language models.

‘Digital humans’ in a virtual world

By combining large language models with modular cognitive control architecture, Robert Yang and his collaborators have built agents that are capable of grounded reasoning at a linguistic level. Striking collective behaviors have emerged.

‘Digital humans’ in a virtual world

By combining large language models with modular cognitive control architecture, Robert Yang and his collaborators have built agents that are capable of grounded reasoning at a linguistic level. Striking collective behaviors have emerged.

Are brains and AI converging?—an excerpt from ‘ChatGPT and the Future of AI: The Deep Language Revolution’

In his new book, to be published next week, computational neuroscience pioneer Terrence Sejnowski tackles debates about AI’s capacity to mirror cognitive processes.

Are brains and AI converging?—an excerpt from ‘ChatGPT and the Future of AI: The Deep Language Revolution’

In his new book, to be published next week, computational neuroscience pioneer Terrence Sejnowski tackles debates about AI’s capacity to mirror cognitive processes.

Explore more from The Transmitter

Dendrites help neuroscientists see the forest for the trees

Dendritic arbors provide just the right scale to study how individual neurons reciprocally interact with their broader circuitry—and are our best bet to bridge cellular and systems neuroscience.

Dendrites help neuroscientists see the forest for the trees

Dendritic arbors provide just the right scale to study how individual neurons reciprocally interact with their broader circuitry—and are our best bet to bridge cellular and systems neuroscience.

Two primate centers drop ‘primate’ from their name

The Washington and Tulane National Biomedical Research Centers—formerly called National Primate Research Centers—say they made the change to better reflect the breadth of research performed at the centers.

Two primate centers drop ‘primate’ from their name

The Washington and Tulane National Biomedical Research Centers—formerly called National Primate Research Centers—say they made the change to better reflect the breadth of research performed at the centers.

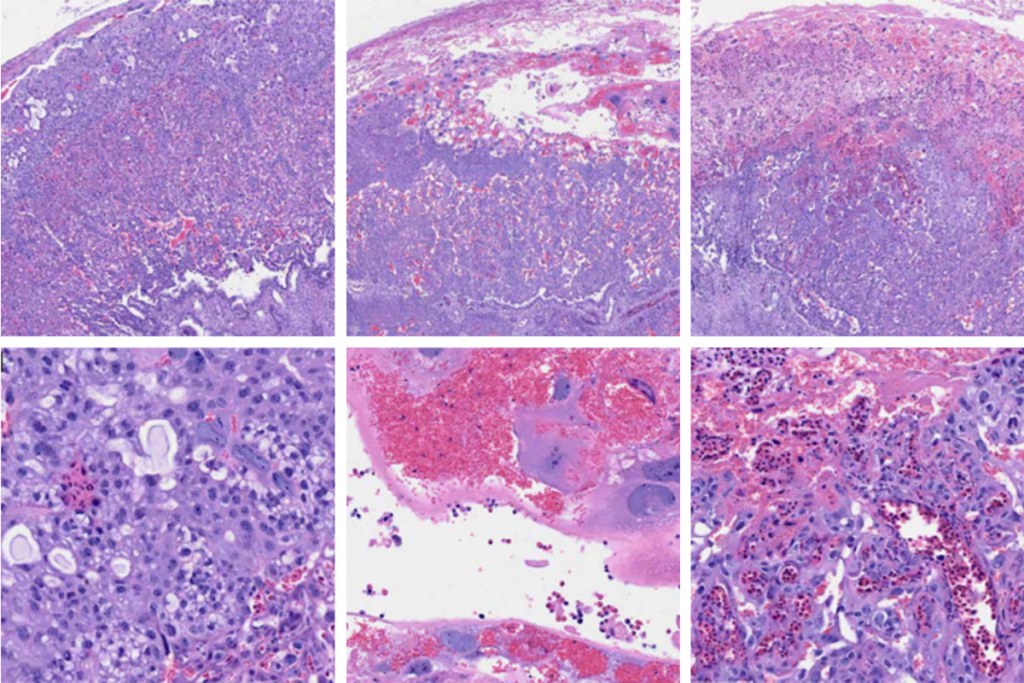

Post-infection immune conflict alters fetal development in some male mice

The immune conflict between dam and fetus could help explain sex differences in neurodevelopmental conditions.

Post-infection immune conflict alters fetal development in some male mice

The immune conflict between dam and fetus could help explain sex differences in neurodevelopmental conditions.