It is a well-known irony of academia that the job of a principal investigator (PI) requires skills professors are traditionally not trained for. From teaching and mentoring to project managing and budgeting, PIs are required to know many things that don’t come up during the pure research they do as Ph.D. students and postdoctoral researchers. To some extent, these are “known unknowns,” meaning skills an incoming professor knows they’ll need to learn, even if they haven’t had much experience in them yet. I can say from personal experience, however, that one part of the job caught me off guard: my role as an evaluator.

In just over a year and a half as a new professor, I have read about 400 research statements, including applications for scholarships, Ph.D. programs, postdoctoral fellowships, faculty positions and grants, written by everyone from undergraduate students to full professors. These statements represent the plans and hopes of a whole crop of budding scientists, and my job is to help decide whether those plans will play out.

On what basis are such decisions to be made? Most postdocs have some experience evaluating research, notably from serving as a reviewer on a paper. For journal reviews, the task is constrained, the standards are somewhat clear, and the outcome is a full report detailing any concerns, which is returned to the authors and an editor. Evaluating research proposals and other types of future work, however, is quite different. Proposals often lack the level of detail needed to fully convey how rigorous the eventual work will be. There may even be uncertainty as to whether the work can be done at all, or at least whether this specific person in this specific place with these specific resources can plausibly do it. In many cases, the evaluation of the proposed work can’t be nuanced but rather needs to collapse to a thumbs up or thumbs down.

I have come to appreciate that, most importantly, evaluating planned work requires a set of scientific values. What kind of work do you want produced? In which direction do you think the field should go? What influence do you want to see more of? And how much should you let your own personal preferences and interests guide your decision? Answers to these questions require pulling from a driving vision for your field, something most new PIs haven’t had time to develop.

I

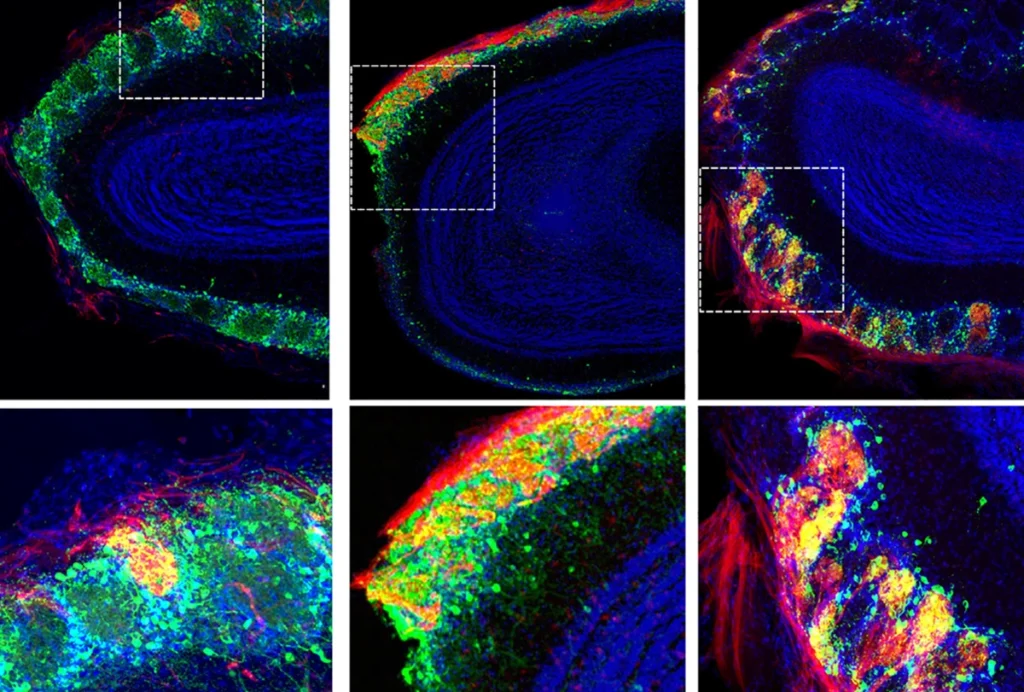

n neuroscience, these questions manifest in many ways. My work, for example, straddles the border between neuroscience and artificial intelligence, so I frequently need to consider how much I value neuro-AI proposals that focus on biological fidelity versus those that draw more from computer science topics. When judging computational work, I also need to consider what may bring bigger returns: exploring a bold new theory of brain function or hammering out the details of an existing one. Lastly, I need to weigh the value of a mathematically elegant approach against one that is “ugly” but might be able to achieve more.Because PIs are also required to judge work outside their own domain, it is important to have a rubric for assessing quality more generally. Fortunately, many issues that we consider within our own fields are also applicable more broadly. The “lumpers versus splitters” debate, for example—whether one should try to unify different examples by using only broad categories or divide them into ever smaller ones—applies across research topics at many levels, ranging from cell types to psychological capacities. Being able to assess when lumping may be more useful than splitting, or vice versa, can therefore have broad utility. The contrast between “big data”-style naturalistic experiments and smaller, tightly controlled ones is another recurring theme. Developing a sense for when exploring a large and diverse dataset can provide insight versus when more targeted designs are needed also makes these decisions easier.

Even more challenging, these evaluations cannot be made in isolation. Like a well-balanced stock portfolio, a research field needs to be diversified. As someone who studies attention, I would love to see many well-funded labs churning out great attention research suited to my scientific questions. But I know that is not the only work that should be funded. Even within my own lab, to produce the most innovative outputs I need people with a diverse set of expertise and interests. So, when evaluating research proposals, it is important to keep a bigger picture in mind, to recognize when certain research areas aren’t getting the resources they deserve, and to determine that a given topic may be over-saturated. As students of animal behavior, we should know the importance of both exploitation and exploration—and balance our research accordingly.

Unfortunately, as is the case with so many of our academic duties, we are rarely allowed to take the time these decisions deserve. But to help accelerate your thinking, I recommend finding some time to regularly reflect on your own values, preferences and desires for the field, particularly as a new PI. This can enhance productivity in the long run, as you then have some precompiled criteria in place when decision time comes.

I also encourage post-docs who plan to become faculty to reflect on their evaluation values. What work excites you, and why? What common pitfalls have you seen? What forms of interdisciplinary interactions appear fruitful to you? What common methods do you worry are leading us astray? Not only does such reflection help prepare you for the onslaught of decisions you’ll be making, it can also help shape your own work toward what you think is most impactful.

I have guided my own work toward more complex tasks and stimuli after realizing how much I prefer these when evaluating proposed work. I’ve also pushed myself to aim for more ambitious goals and to propose more novel theories. Overall, frequent evaluation of proposed work has encouraged me to attempt science that I think will have the greatest impact.